Integrating DMBOK®2 & DCAM for Enhanced Data Management in AI for Data Professionals

Executive Summary

This webinar highlights key themes from the third episode of "Integrating DMBOK & DCAM for Enhanced Data Management," focusing on the critical intersection of data governance and AI governance. Howard Diesel and Andrew Andrews underscore the importance of interoperability between DCAM and EDM, emphasising the need for robust stakeholder engagement and the navigation of complex software engineering regulations.

Howard covers key challenges in data modelling, the importance of lifecycle management, and the structure of maturity assessments in driving effective data governance within organisations. Additionally, the webinar explores the implementation of quality control measures, maturity assessment complexities, and the evolving strategies necessary for data management in the digital age, including accountability, risk mitigation, and project prioritisation.

Webinar Details:

Title: Integrating DMBOK®2 & DCAM for Enhanced Data Management in AI for Data Professionals

Date: 03/04/2025

Presenter: Howard Diesel & Andrew Andrews

Meetup Group: African Data Management Community Forum

Write-up Author: Howard Diesel

Contents

Third episode of Integrating DMBOK & DCAM for Enhanced Data Management

Importance of Unity in Data Governance and AI Governance

The Interoperability of DCAM and EDM

Data Management and Stakeholder Engagement

Navigating the Complexity of Software Engineering and Data Management Regulations

Data Governance and AI Regulation

Differences and Challenges in Data Modelling

Data Life Cycle and Data Governance Approaches

The Purpose and Structure of Data Management Maturity Assessment

Data Governance in the Organisation

Implementing Quality Control and Governance in Data Management

Challenging Aspects of Maturity Assessments

Data Management and Assessment Strategies in the Digital Age

Data Maturity and Accountability in Organisations

Maturity Assessment and Data Management Strategies

Understanding Effective Data Modelling and Compliance

Data Management and Strategy in Project Management

Navigating Business Risks and Project Prioritisation

Data Management and Risk Management Strategies

Third episode of Integrating DMBOK & DCAM for Enhanced Data Management

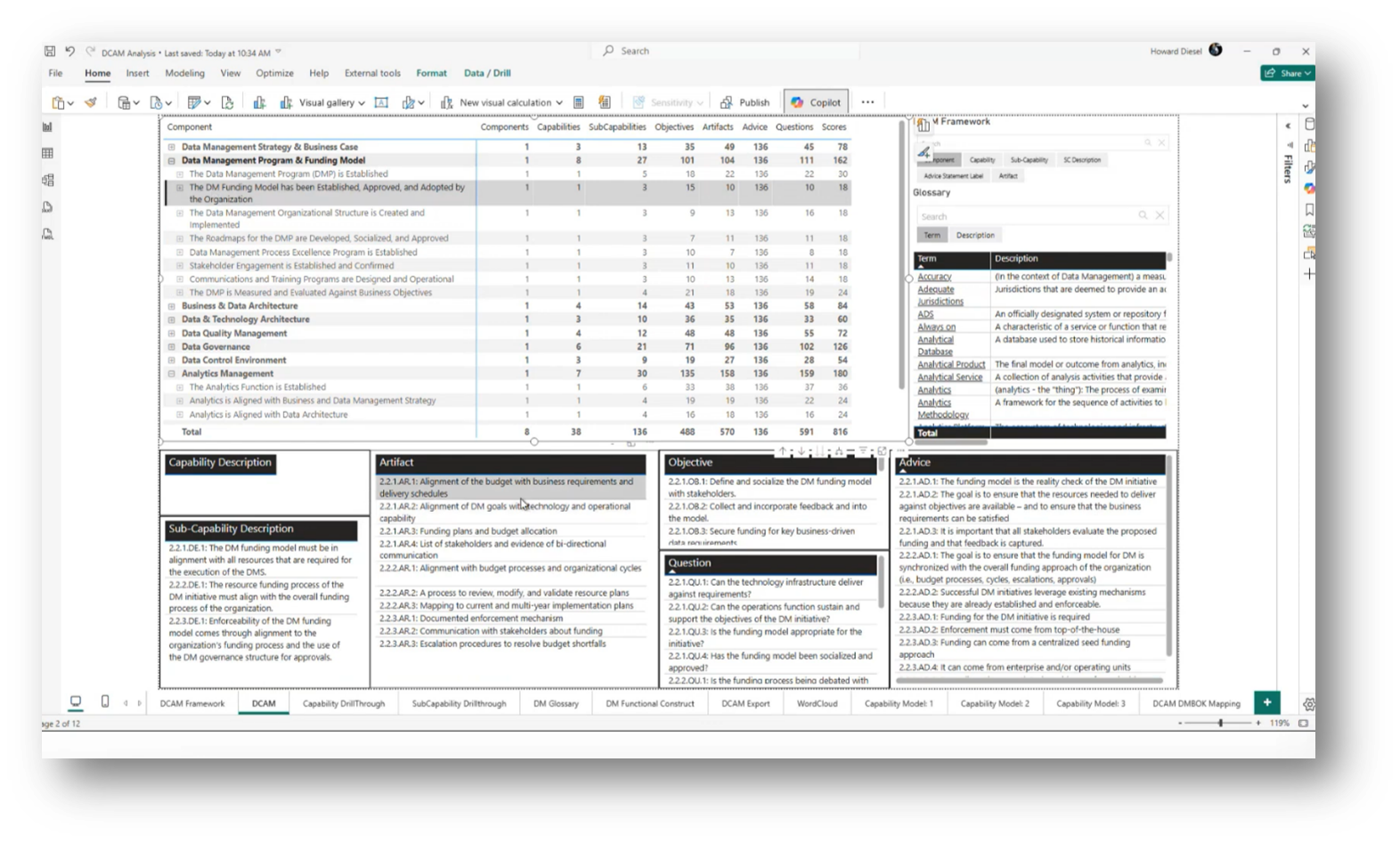

Howard Diesel opens the webinar and notes the webinar series is in its third week, with the final week approaching. The focus will be on creating a concise presentation tailored for a senior executive audience, reducing the current 160 slides to around 15 core slides, with additional material included in appendices. Howard Diesel and Andrew Andrews will co-present, highlighting the differences in operating models between the Enterprise Data Management (EDM) Council and Daima International, with additional slides on this topic expected next week.

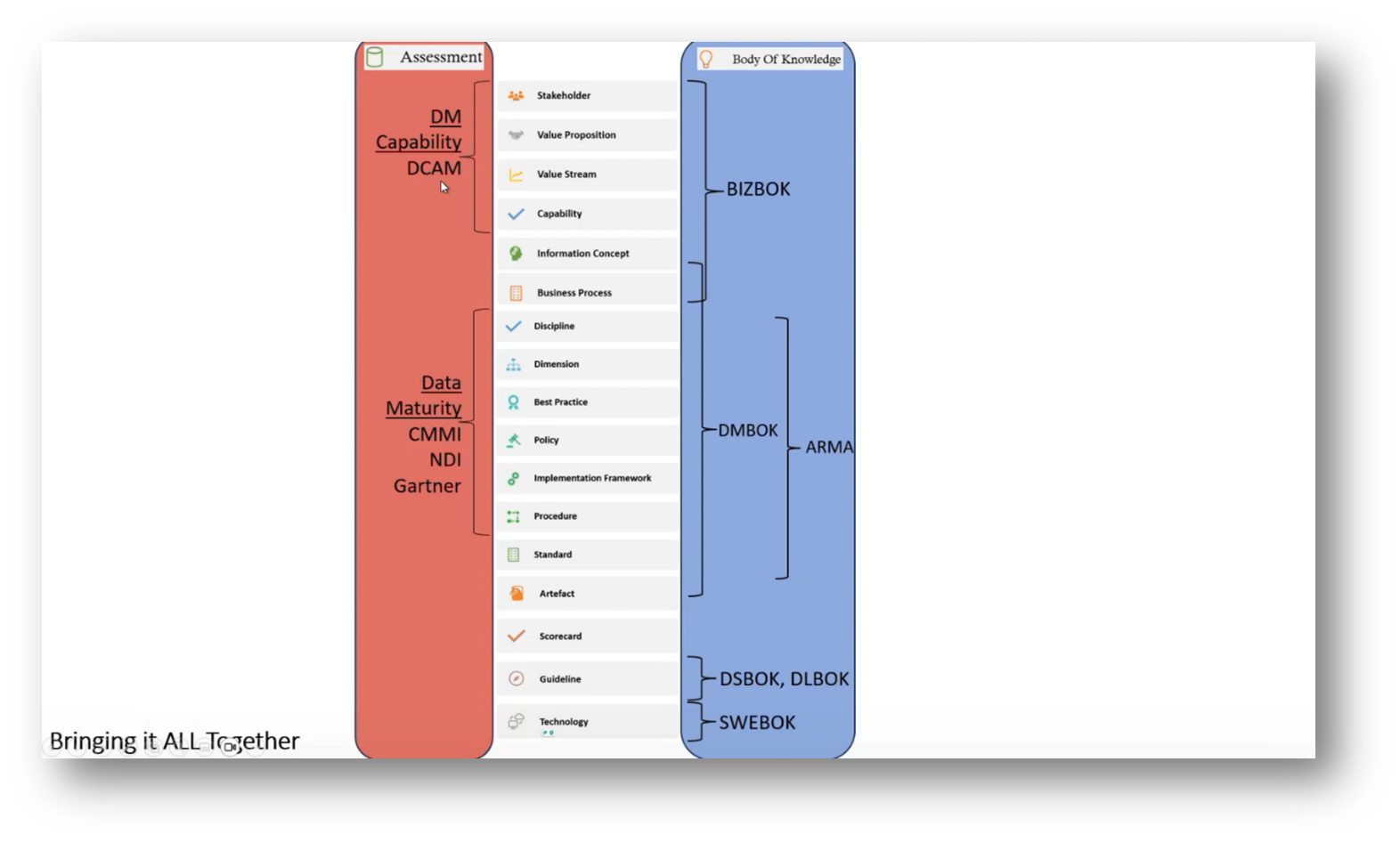

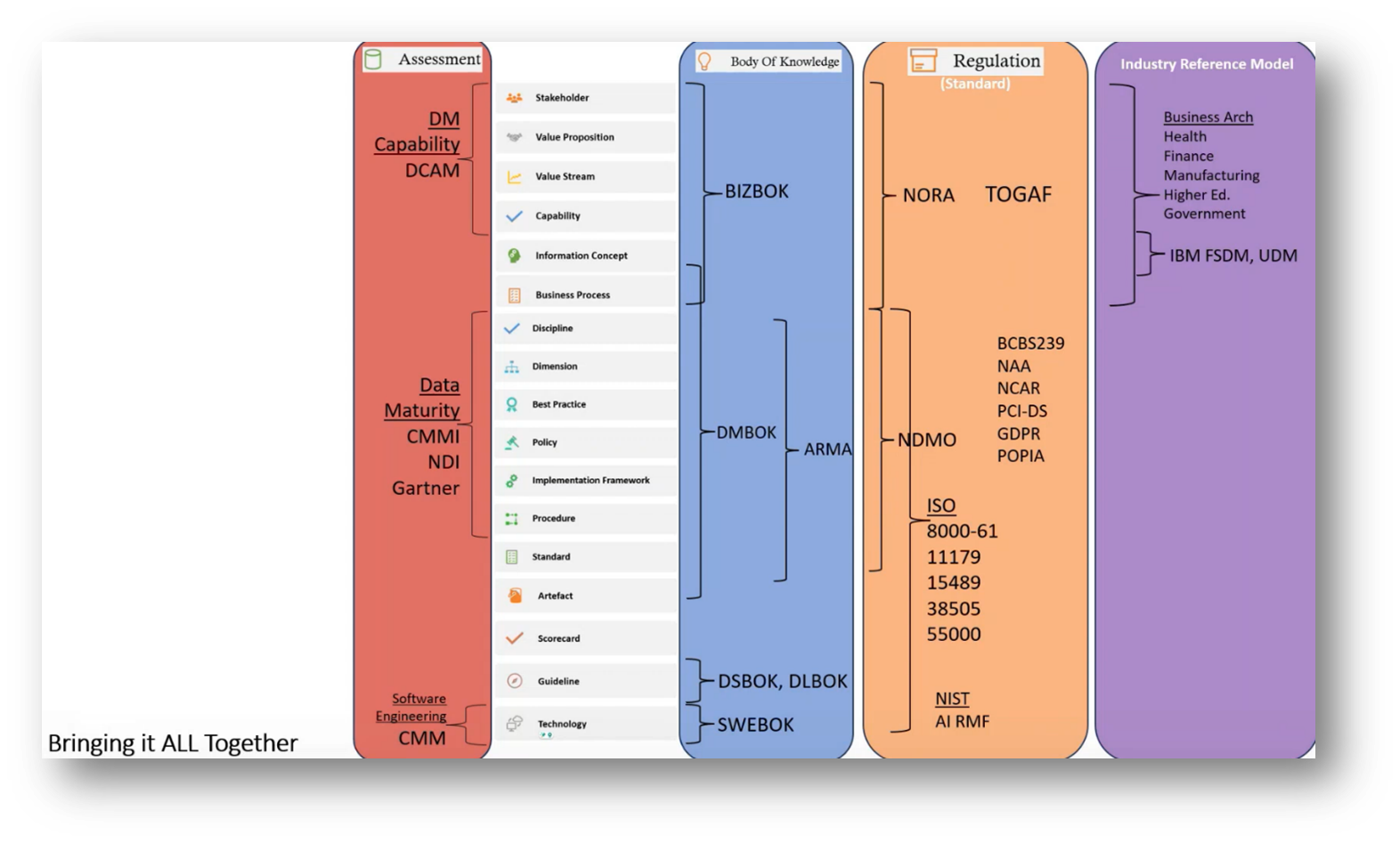

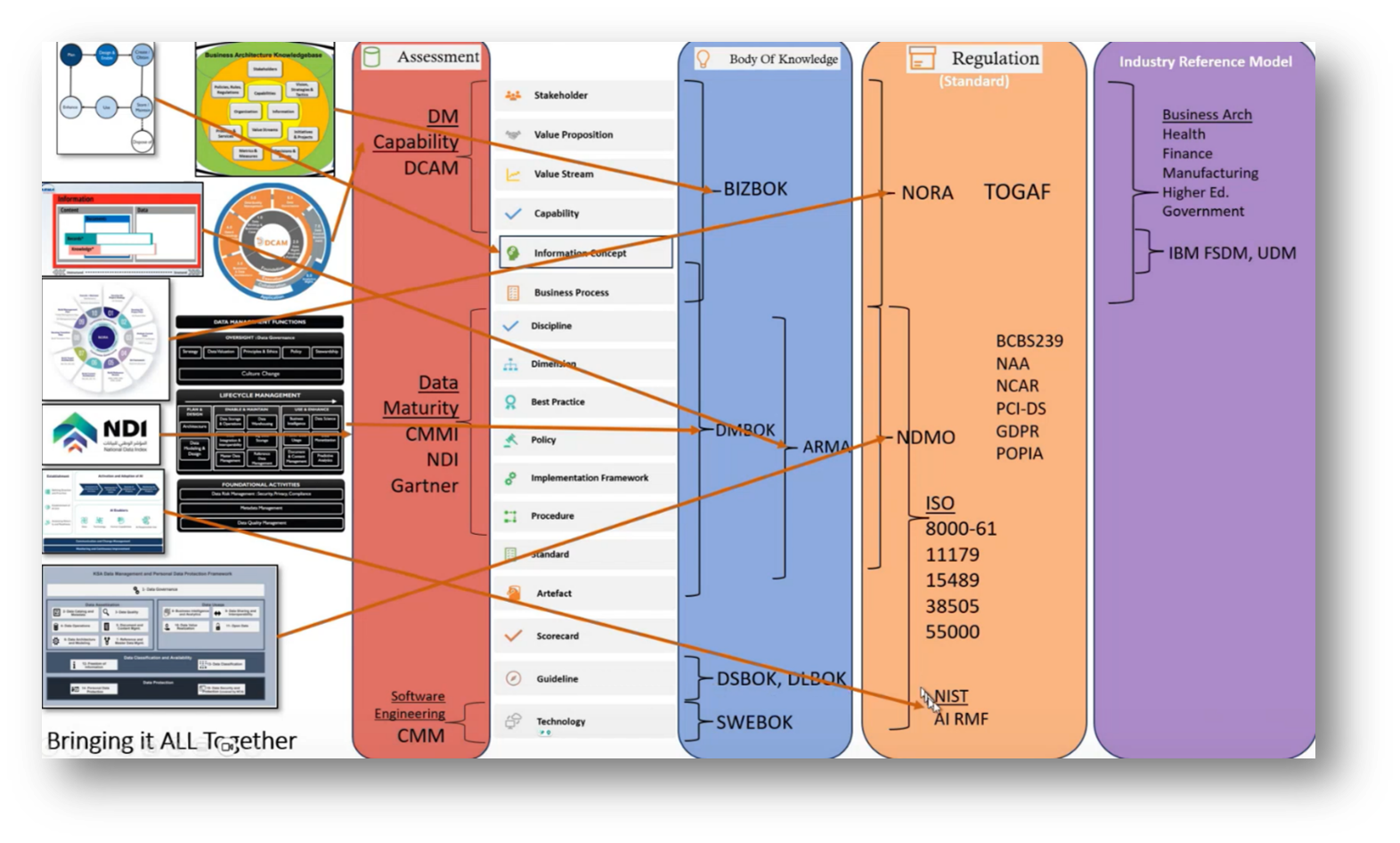

Figure 1 DM Framework Mappings

Importance of Unity in Data Governance and AI Governance

When dealing with the areas of data governance, AI governance, and data privacy governance, it’s crucial to avoid overcomplicating the framework with separate, conflicting compliance efforts. Howard shares John Bottega’s emphasis on the need for a unified approach to governance that simplifies and focuses on essential areas while fostering a common vision. Additionally, the current trend of fragmented governance has led to confusion and inefficiency, making it imperative to streamline efforts and clearly define the issues at hand. Andrew will provide further insight into the challenges we are facing in this context.

Figure 2 Introduction

The Interoperability of DCAM and EDM

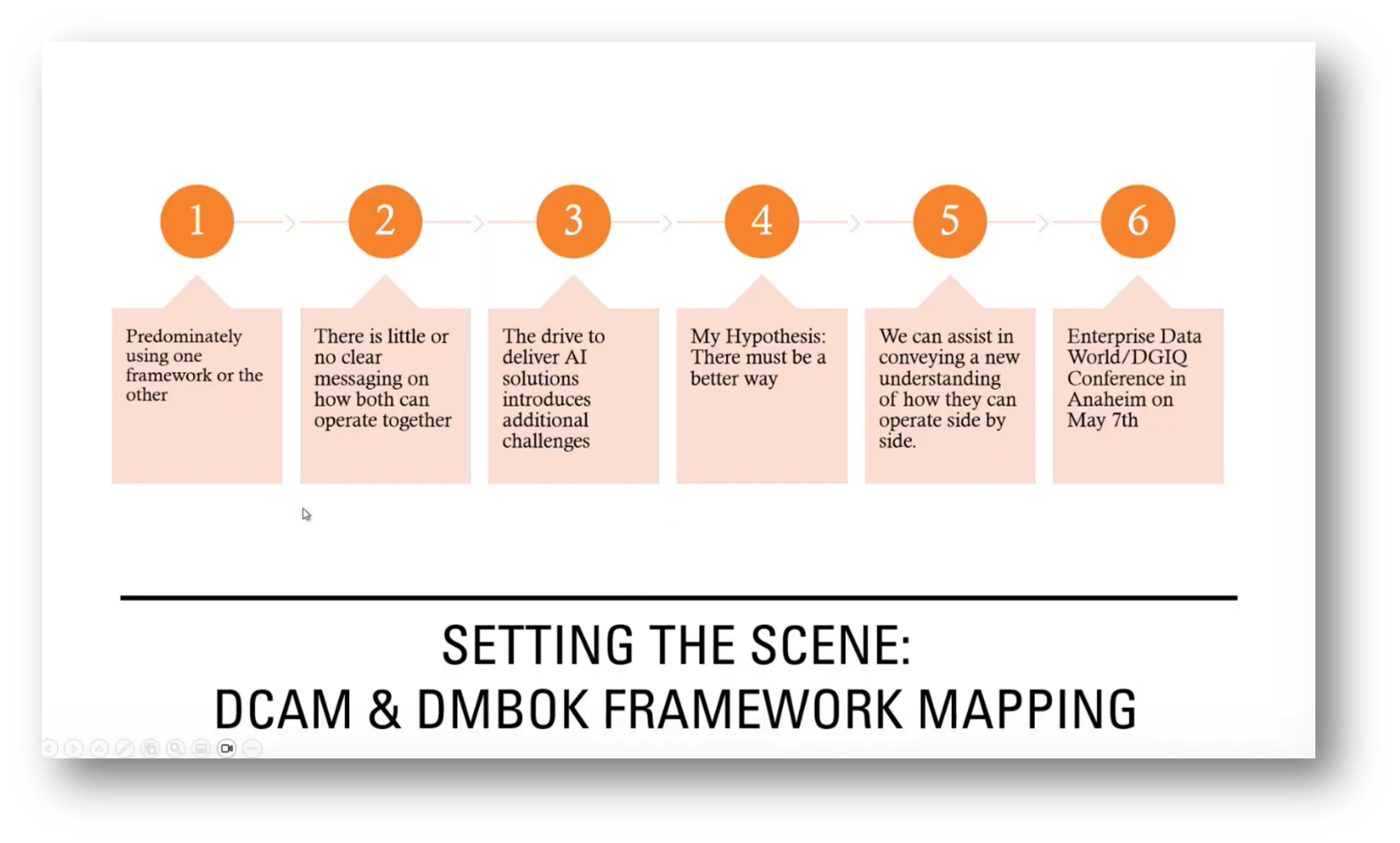

Andrew Andrews reflects on his five-year journey in data governance. He shares a lack of visible interaction between the Data Management Body of Knowledge (DMBoK) and the Data Capability Assessment Model (DCAM) in his previous enterprise role. This observation sparked their interest in understanding the broader awareness of DCAM within the DAMA network. Despite being deeply involved in DAMA Australia and the international board, Andrew found limited comprehension of DCAM's relevance.

After joining the EDM Council, Andrew encountered a mindset where clients identified solely as DMBoK or DCAM practitioners, often excluding the other framework. This experience inspired them to create a presentation highlighting the interoperability of DMBoK and DCAM, leading to the acceptance of their pitch by the editorial board for the upcoming WDGIQ conference.

Andrew points out the collaborative effort to integrate DCAM and DMBoK into everyday practice, aiming to change mindsets and normalise their presence on practitioners' bookshelves. He proposes that, much like a doctor prescribing complementary medications, these tools can be used together to enhance outcomes.

By applying an AI lens to this integration, the initiative hopes to deliver effective AI projects that benefit both the DCAM community and the broader Data Management and DAMA communities, which are influential in the field of data. The goal is to promote collaboration among practitioners while remaining faithful to their communities and to encourage a reevaluation of the role of data practitioners in the world.

Figure 3 Setting the Scene: DCAM & DMBoK Framework Mapping

Data Management and Stakeholder Engagement

Howard takes over and outlines the importance of aligning business architecture with stakeholder value, emphasising that all initiatives should focus on enhancing the value delivered to customers. He discusses the essential components of a business strategy, starting with stakeholder identification and the value proposition, which directs the organisation's capabilities, particularly in data management.

This framework extends down to business processes and the integration of data governance and quality practices that support better decision-making. Additionally, the assessment of artifacts produced during projects is highlighted, with a focus on maintaining quality through scorecards to evaluate work products. Howard then concludes by stating the necessity for guidelines, tips, and technology implementation as part of the body of knowledge needed for effective business and data management practices.

Key frameworks in the data management landscape are outlined. Additionally, Howard highlights the Business Architecture Body of Knowledge (BIZBOK) for business architecture, DM Bock for data architecture, Armor for records management, Data Science Body of Knowledge (DS-BoK) for data stewardship and literacy, and SWE Bock for software engineering. He emphasises the distinct roles of DMBOK and DCAM, noting that DCAM serves as a model for capability assessments, unlike other maturity models such as CMMI, NDI, Gartner’s, and TDWI, which focus on different areas and are not centered on capabilities. Notably, DCAM and DMMA (Data Management Maturity Model). DMMA’s are identified as separate entities, with DCAM providing a framework for effectively communicating the practices necessary for successful data management within an organisation.

Figure 4 DM Framework Mappings

Figure 5 Framework Mappings

Figure 6 Framework Mappings Pt.2

Navigating the Complexity of Software Engineering and Data Management Regulations

The complexities of software engineering are amplified by the necessity to navigate multiple regulations and frameworks, such as Saudi Arabia's Nora and NDMO standards, various ISO standards, BCBS, and PCI. This results in a landscape where professionals must simultaneously consider multiple compliance requirements while managing their projects.

Additionally, the presence of industry reference models and data management practices introduces further challenges. The need for adherence to data privacy regulations like GDPR and local laws such as DPL in Saudi Arabia adds to the confusion, making it crucial for individuals to integrate best practices across all frameworks rather than addressing them in isolation. The overarching challenge lies in efficiently managing these diverse requirements to produce the necessary evidence for compliance while maintaining effective governance.

Figure 7 Framework Mappings Pt.3

Figure 8 Framework Mappings Pt.4

Data Governance and AI Regulation

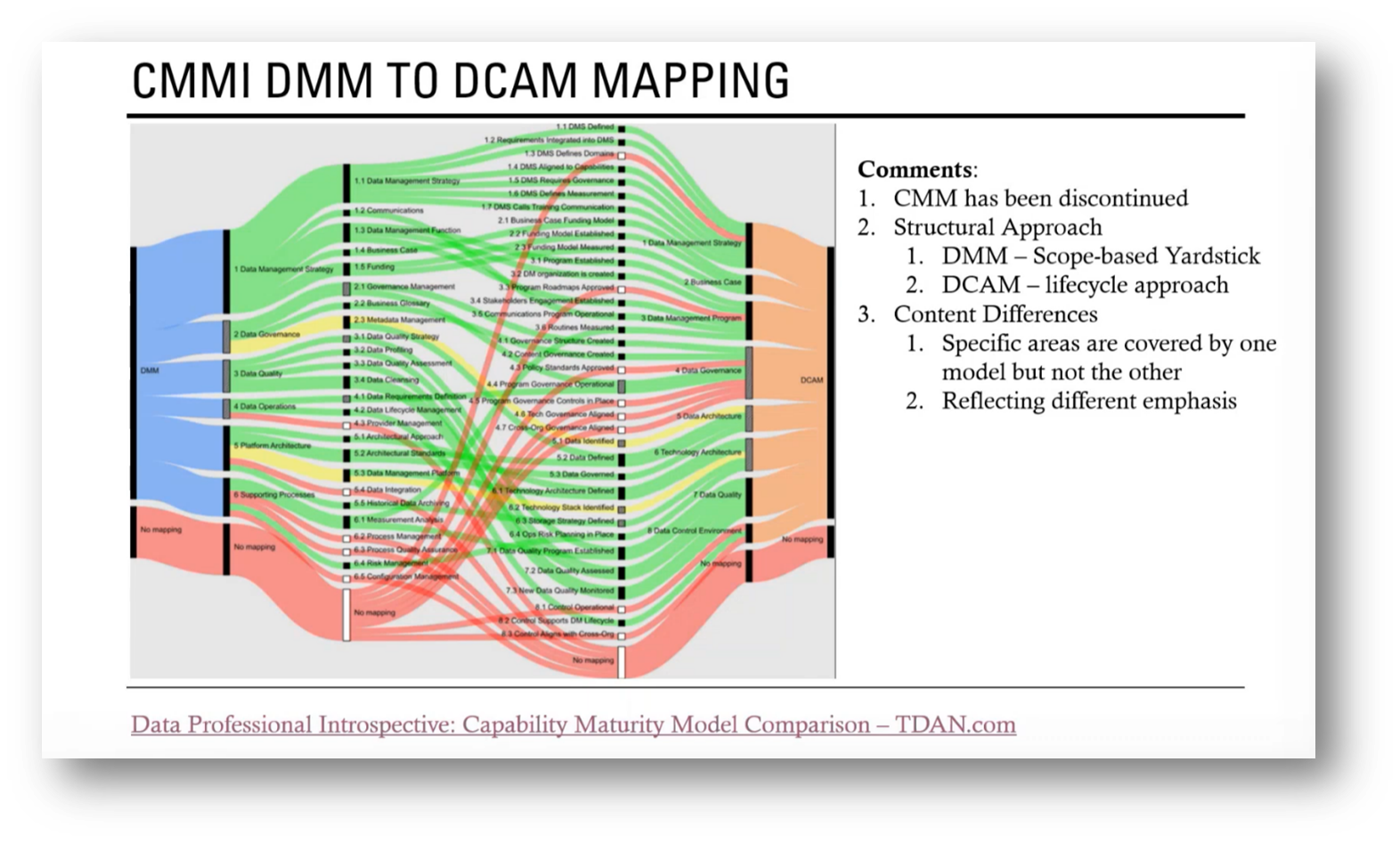

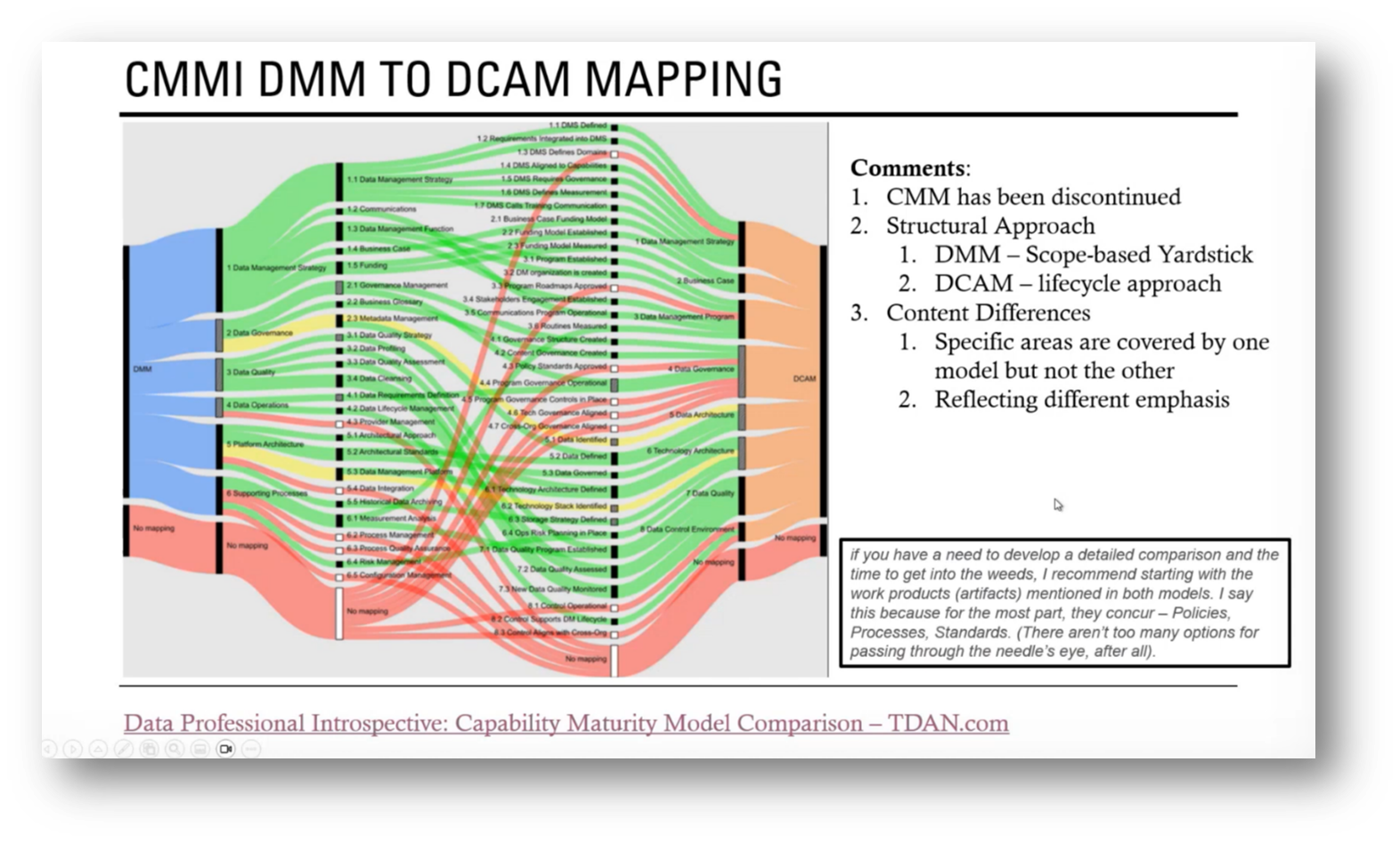

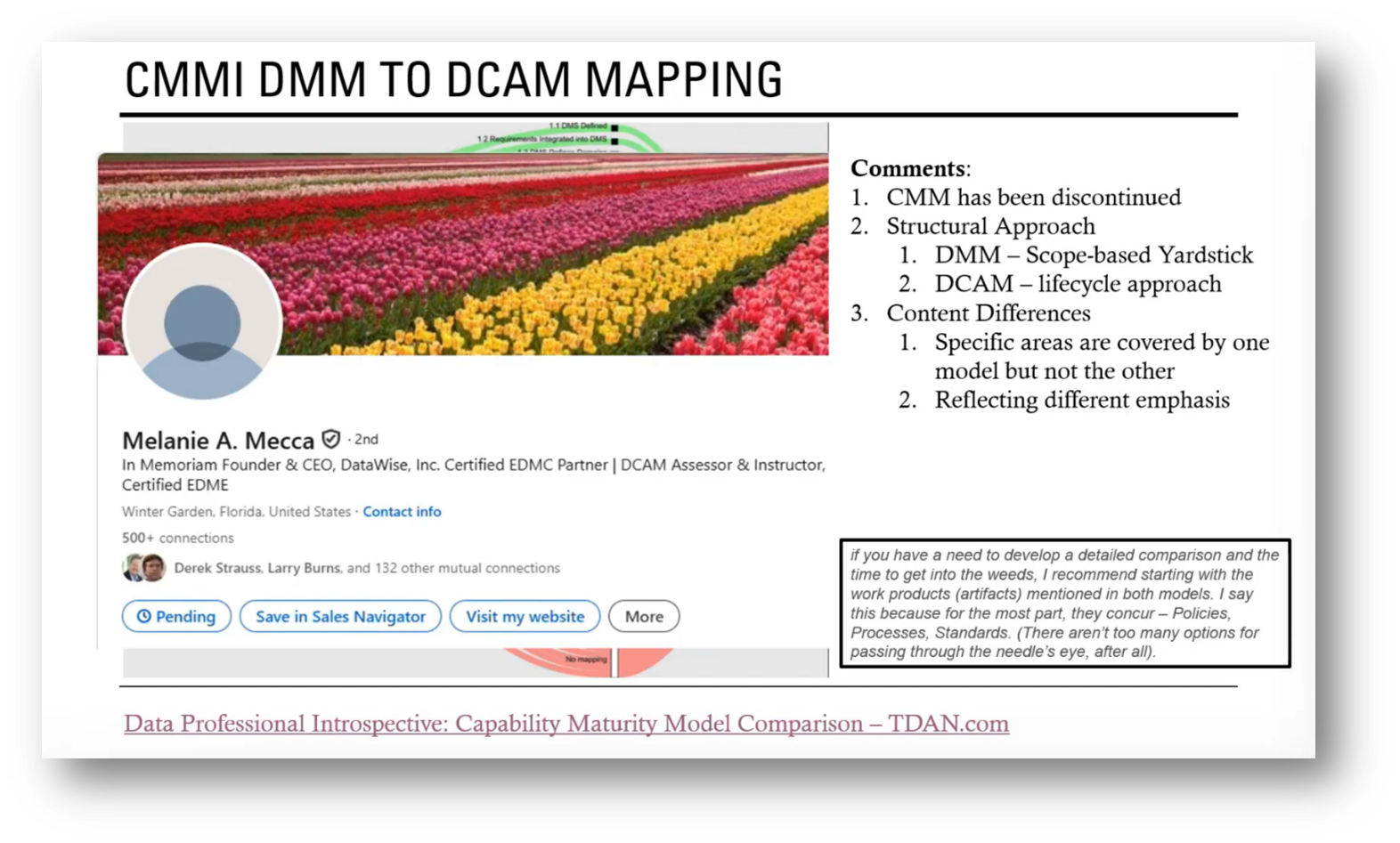

Last week, the focus was on data citizens and their use of AI, while this week emphasises the role of data professionals responsible for governance, maturity assessments, and compliance within organisations. Additionally, Howard discusses the challenges faced in reconciling frameworks such as the Data Management Maturity (DMM) model from CMMI and DCAM.

Melinda Mecca’s article highlights issues like inconsistencies between tasks and deliverables and the difficulty of mapping scores between assessment models. While the DMM has a scope-based approach addressing areas like data quality and strategy, DCAM emphasises a lifecycle perspective. The complexity of differentiating these frameworks and their purposes was particularly evident during a recent DCAM course, where distinctions between data governance, management strategy, and related elements proved challenging to navigate.

Howard highlights the content differences between two data management models, specifically addressing the absence of AI considerations in the DCAM framework compared to others. He emphasises the importance of understanding the fundamental aspects of the data life cycle, which includes managing master data through data quality, governance, and integration.

The necessity of a solid business case for creating master data is also underlined, raising questions about the need for data sharing versus unnecessary creation without justification. Notably, Melinda's article recommends focusing on the artifacts defined in each framework, such as policies, processes, and standards, as they are more universally understood compared to complex concepts like business case funding models. This approach facilitates a more effective collaboration between the frameworks at the work product level.

Figure 9 Data Professionals

Figure 10 CMMI DMM to DCAM Mapping

Figure 11 CMMI DMM to DCAM Mapping Pt.2

Figure 12 CMMI DMM to DCAM Mapping Pt.3

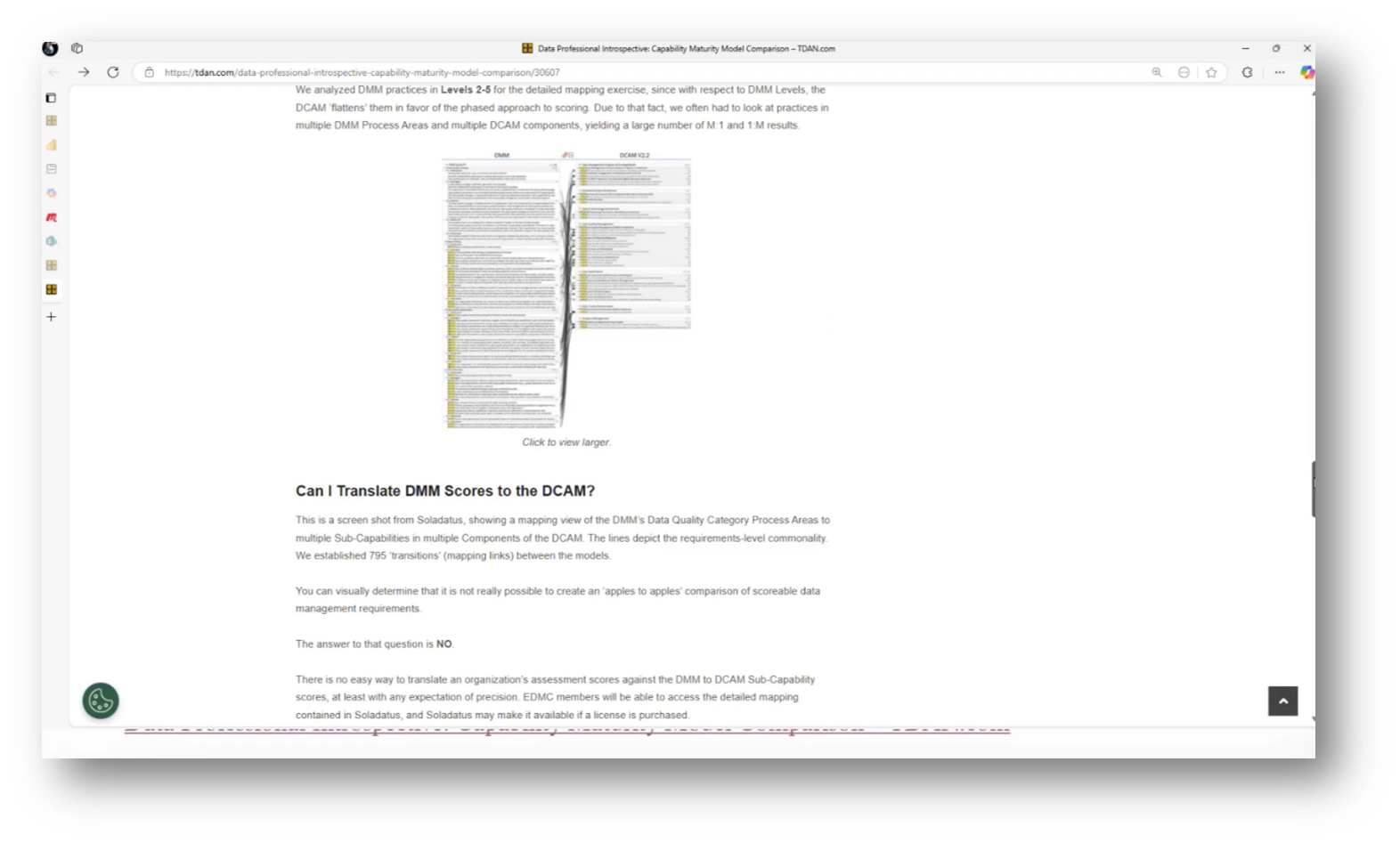

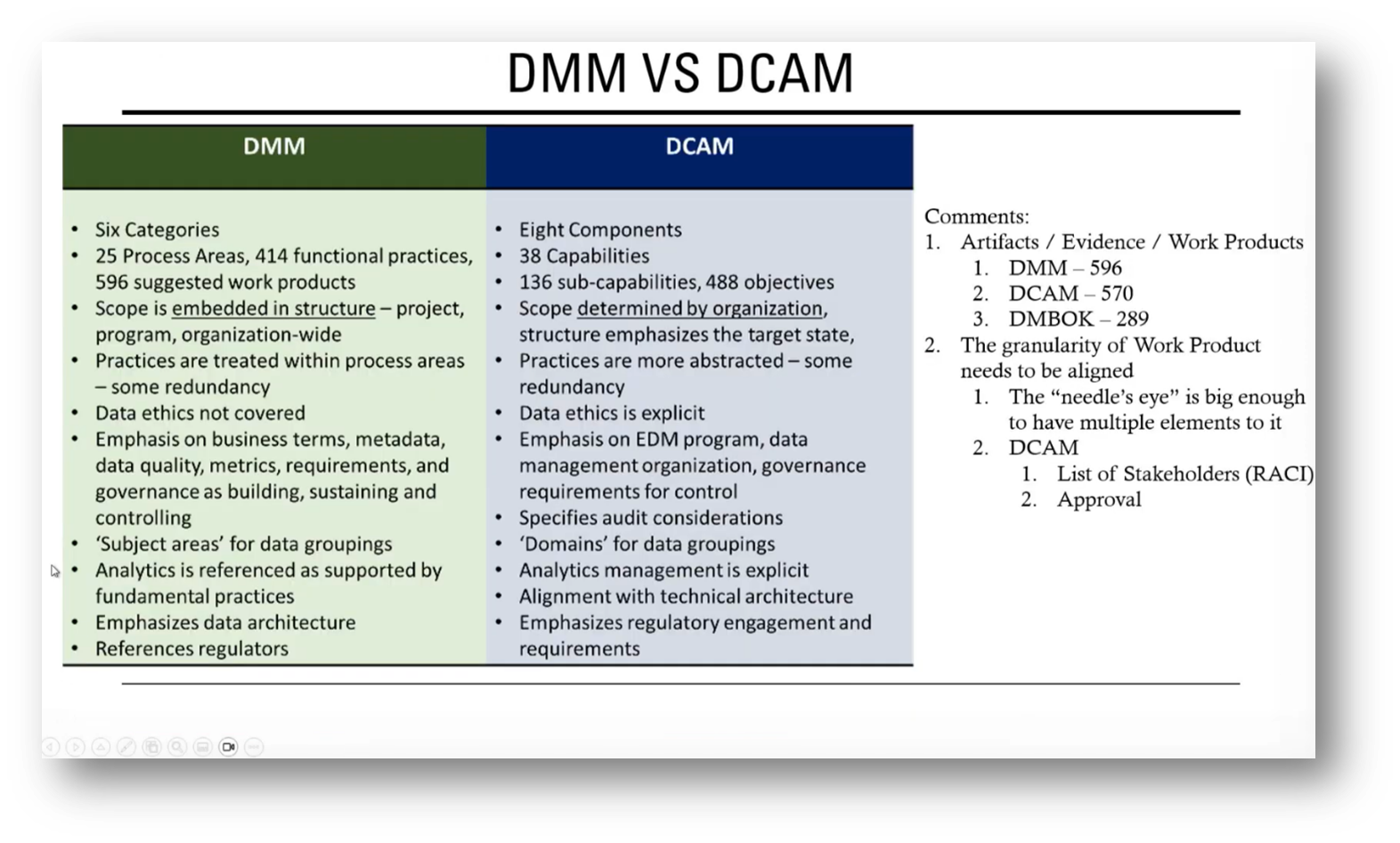

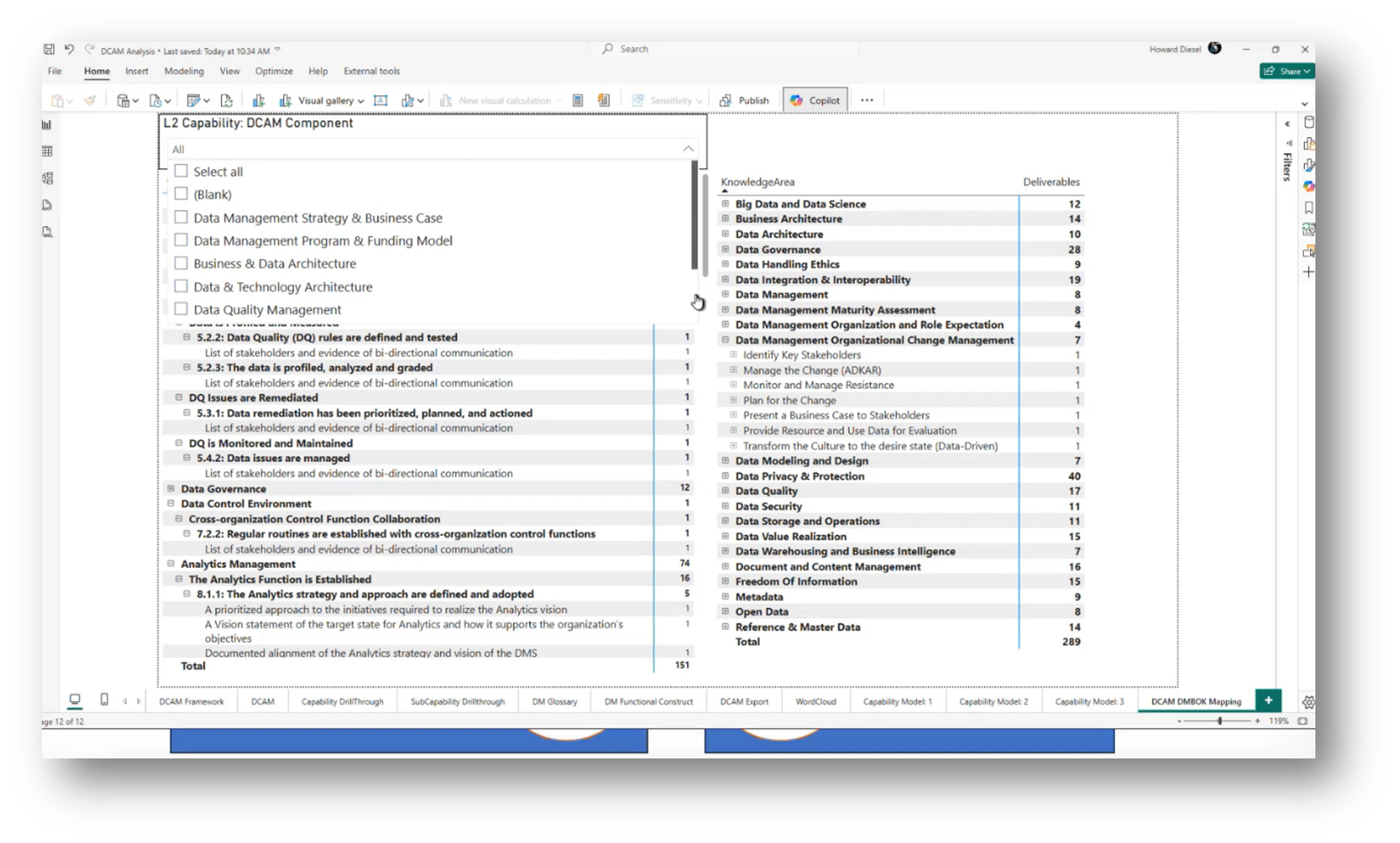

Differences and Challenges in Data Modelling

The recent passing of Melanie, a pioneering figure in data management, was felt deeply in the community, as highlighted in an April 2023 article on TDAN. Recognised as a certified EDM Council partner and the founder of the DMM project—which later evolved into D Cam— Melanie was instrumental in advancing data management methodologies. Her work included analysing the differences between the DMM and DCAM frameworks, employing solid artist mapping to bridge these processes, though she concluded that a direct translation was not feasible. Andrew notes that the DMM encompasses 596 work products, while D Cam includes 570, and the DM Box contains 289, reflecting the complexity of aligning various data management approaches.

The challenge of understanding the granularity of work products within DCAM involves recognising the various components that contribute to a comprehensive data strategy. Key artifacts such as budget alignment, stakeholder identification, escalation procedures, and communication processes all play a role in shaping an effective data strategy. However, DCAM requires a deeper examination of these elements and their alignment with specific evidence criteria.

This level of detail is crucial during assessments, as any missing components can result in unfavourable evaluations, which may be particularly sensitive for organisations, such as banks, that aspire to demonstrate their data management capabilities against industry standards. Hence, accurately aligning all elements is essential to ensure compliance and to uphold a strong reputation.

Figure 13 CMMI DMM to DCAM Mapping Pt.2

Figure 14 Melanie Mecca's Blog

Figure 15 DMM Vs DCAM

Figure 16 DCAM Framework

Figure 17 DCAM Framework Pt.2

Data Life Cycle and Data Governance Approaches

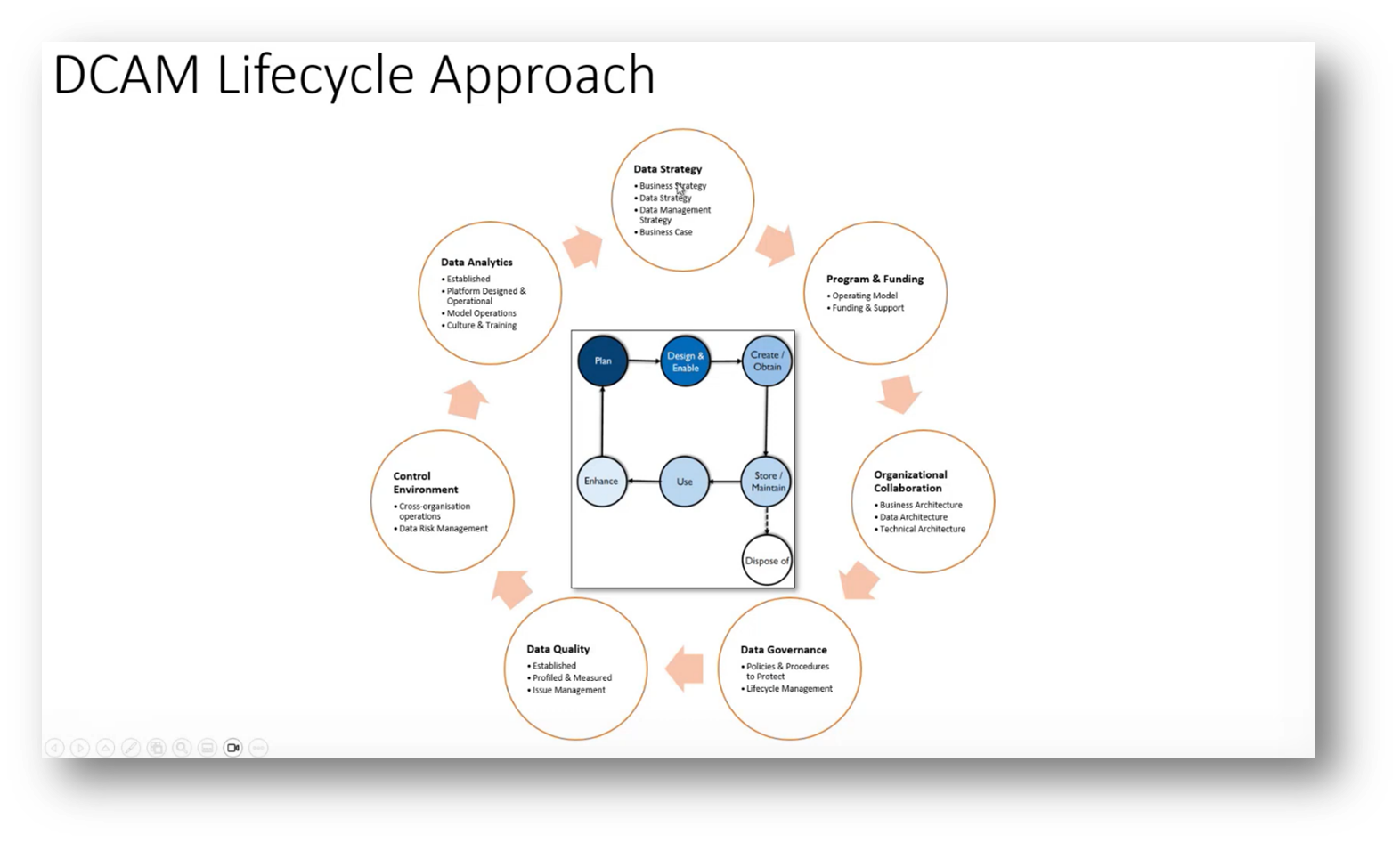

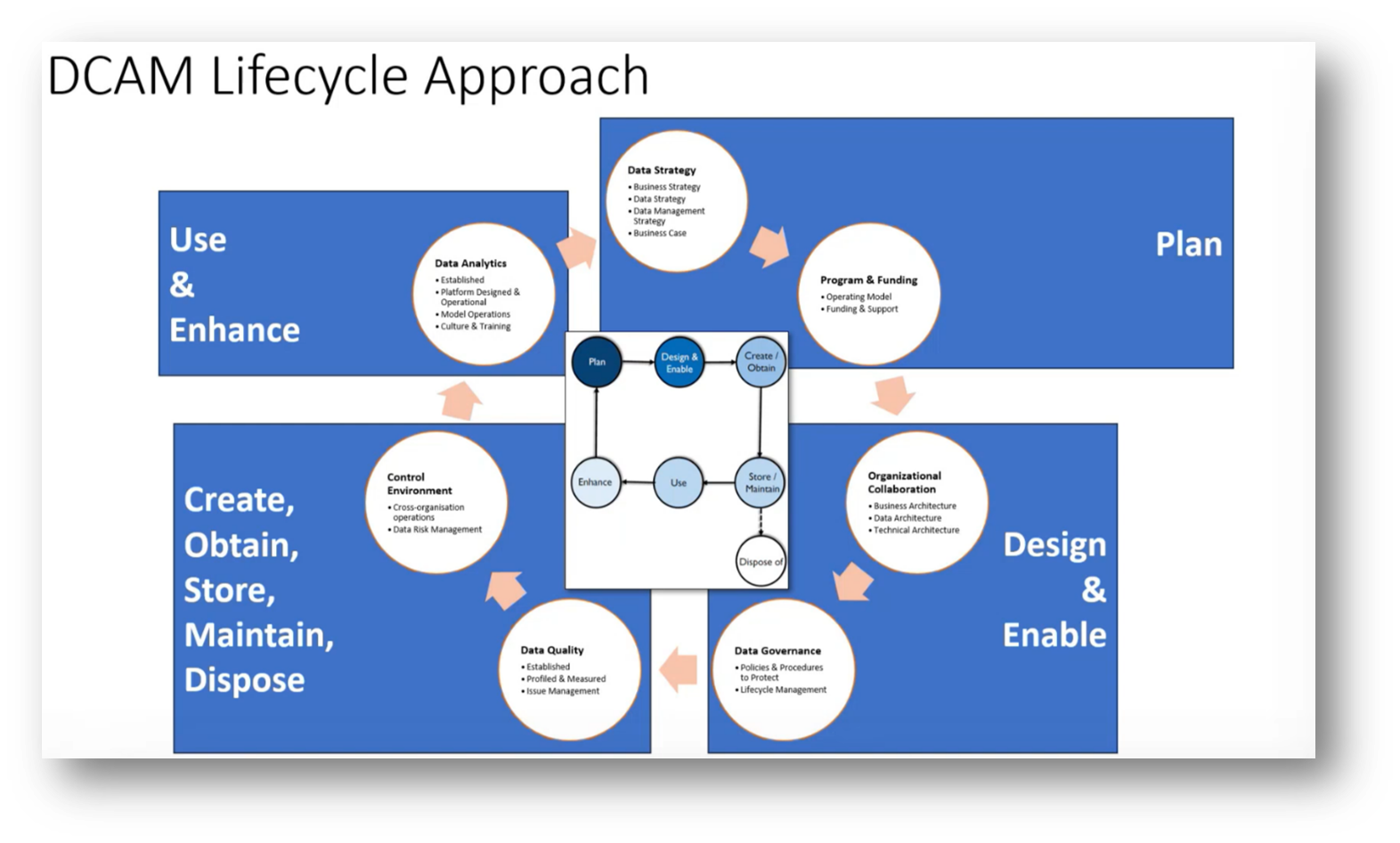

Howard explores the mapping and comparison of data life cycle management with the DCAM framework. He begins with an overview of the data life cycle, which encompasses stages from planning and designing to storing, using, enhancing, and disposing of data, while addressing the differences between data life cycle and data lineage.

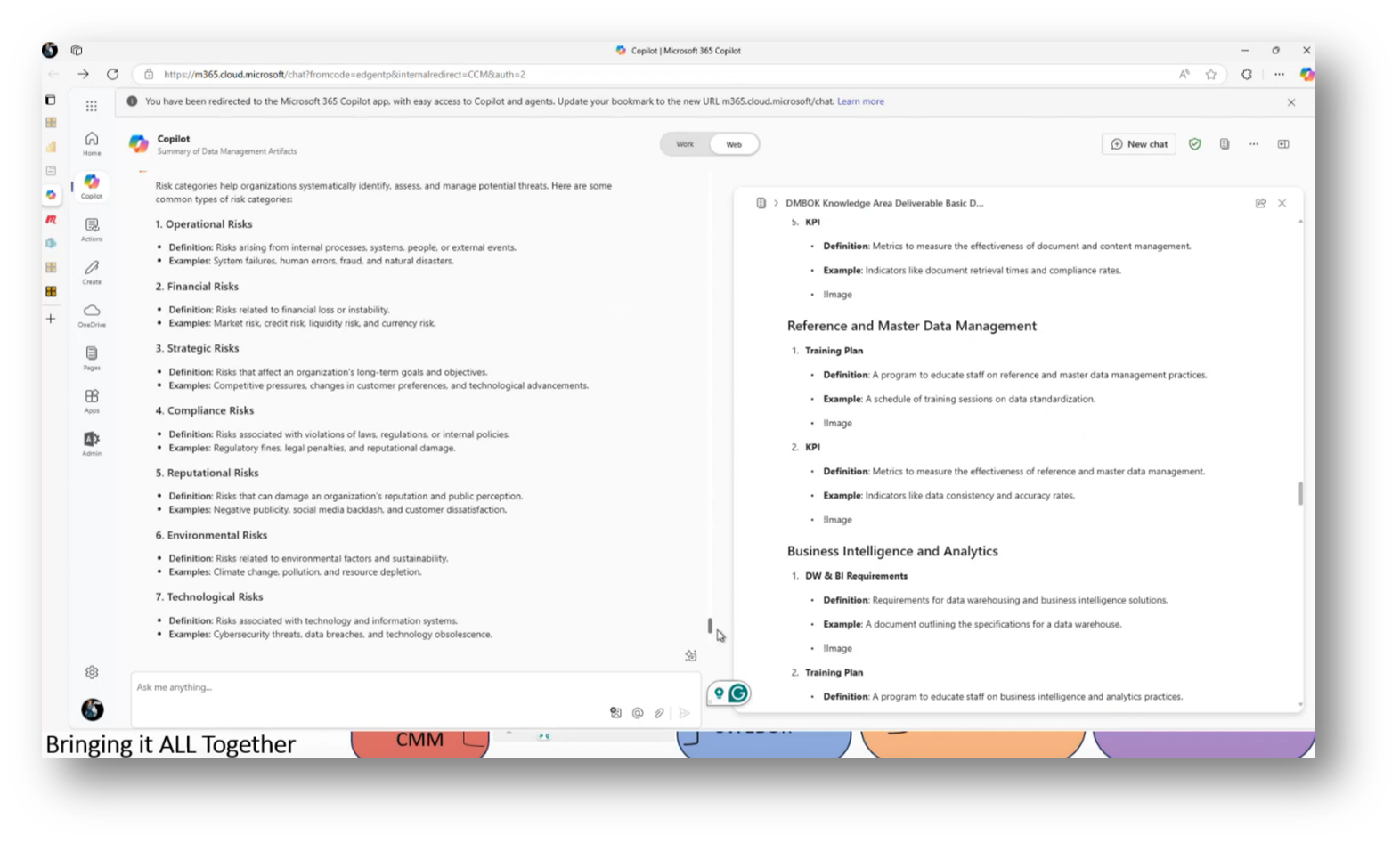

The DCAM framework consists of eight key components: data strategy and program funding, collaboration and business architecture, data technical architecture, data governance, data quality, a control environment for managing risk and data movement, and data analytics. These components are categorised into planning, design, governance, and quality control, illustrating a structured approach to managing data throughout its life cycle.

The importance of quality data management is emphasised, encompassing data acquisition, storage, maintenance, and disposal, as foundational to effective data strategies and analytics. An attendee highlights the alignment between DCAM and The Open Group Architecture Framework (TOGAF), noting the need for data governance to permeate every phase of the data life cycle rather than being overly concentrated in the analytics phase. They point out concerns regarding the overlap of analytics management with broader knowledge areas, suggesting that many considerations should be addressed in the planning phase. The importance of a cohesive strategy, operating model, and governance practices is reiterated as critical for enhancing data analytics while ensuring that capabilities are integrated throughout the entire process, not just isolated in the analytical stage.

Figure 18 DCAM Lifecycle Approach

Figure 19 DCAM Lifecycle Approach Pt.2

Figure 20 DCAM DMBoK Mapping

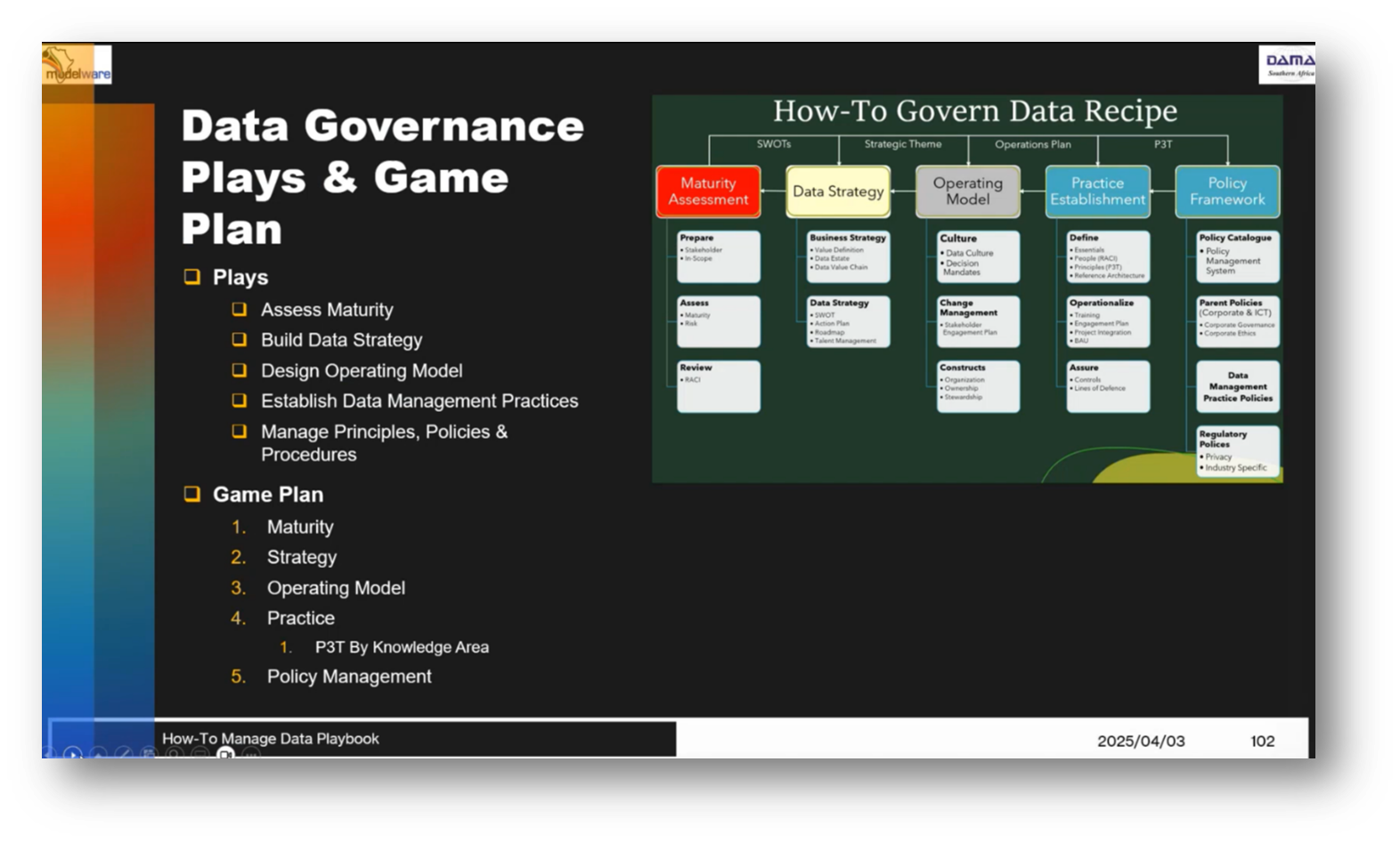

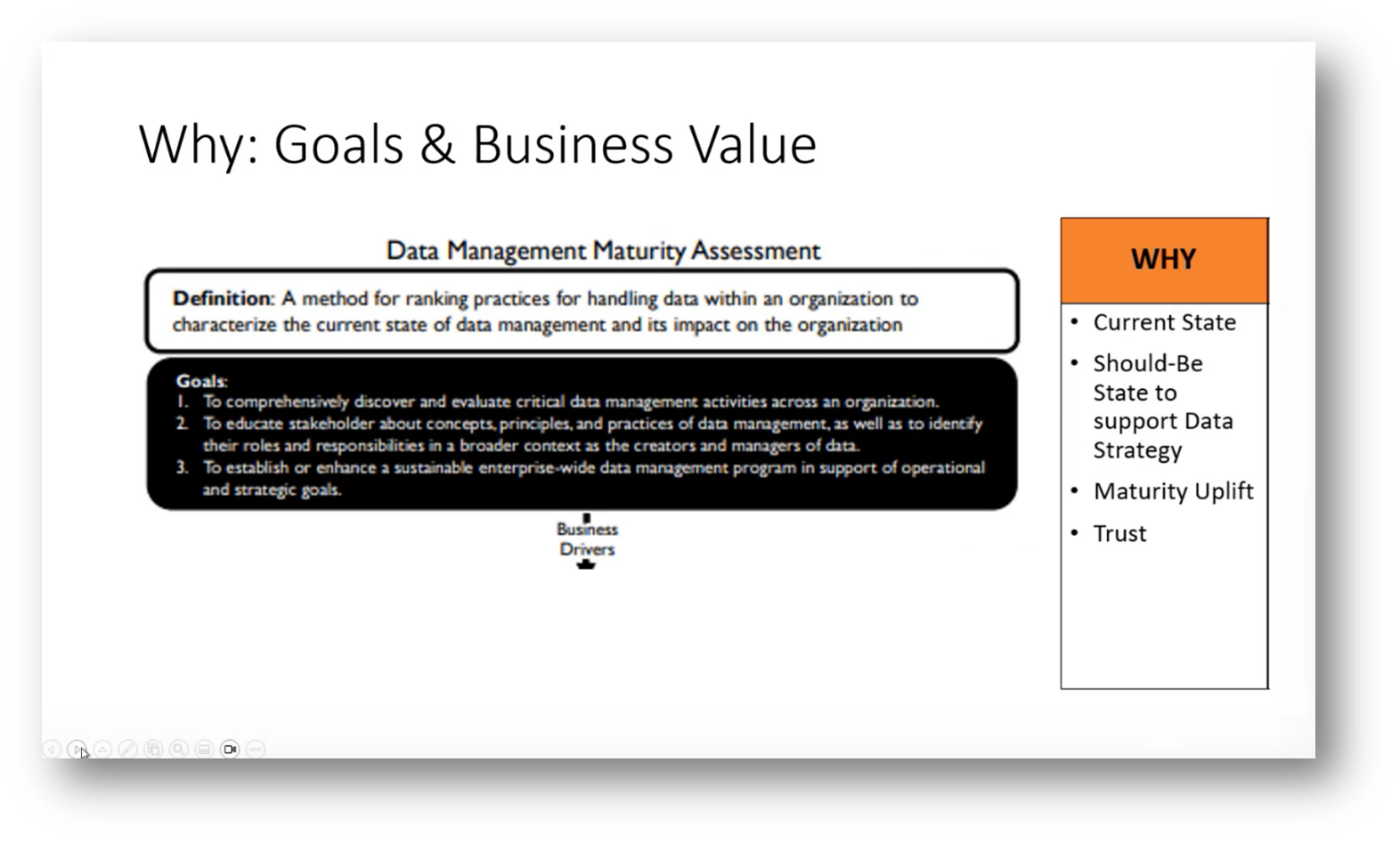

The Purpose and Structure of Data Management Maturity Assessment

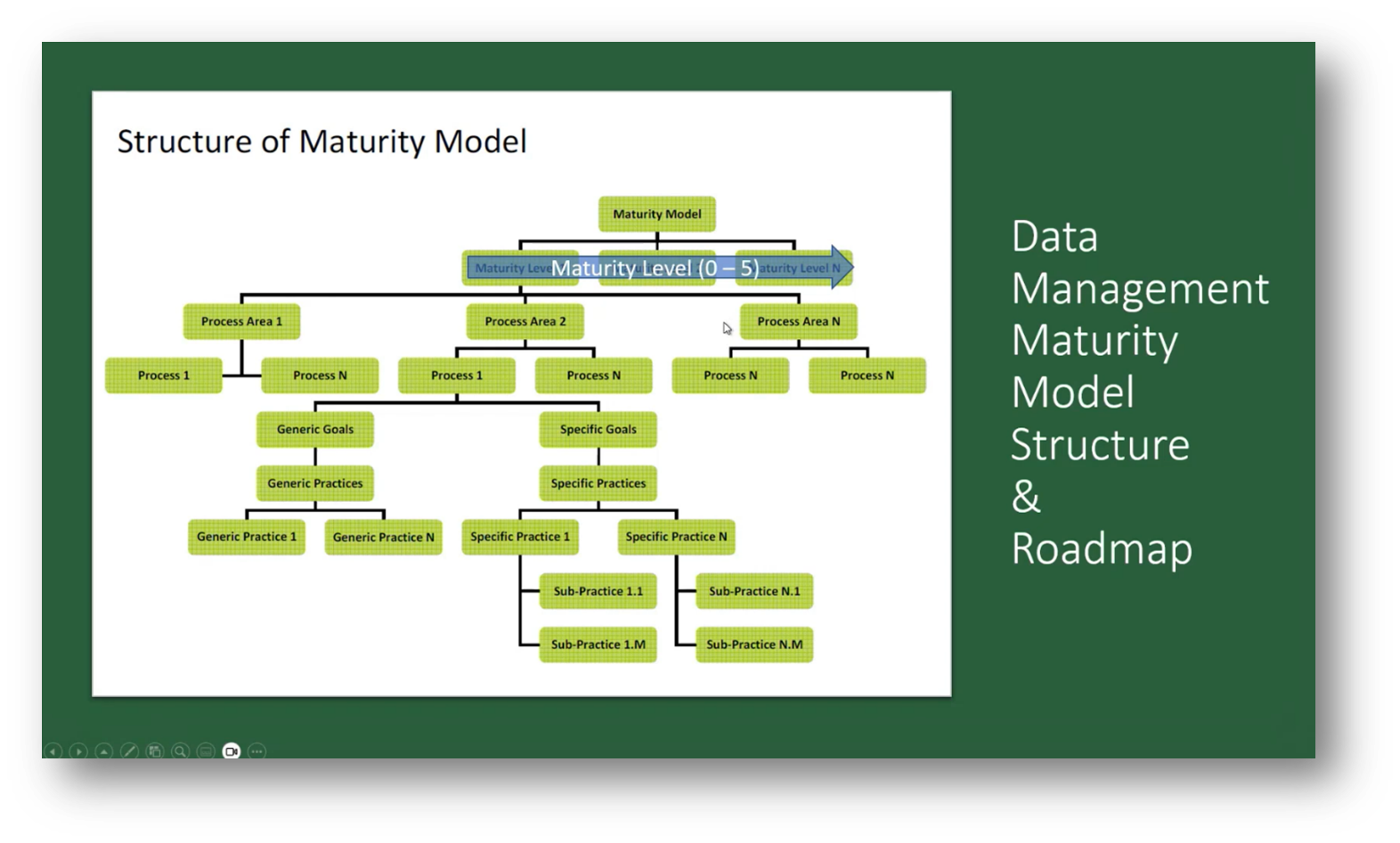

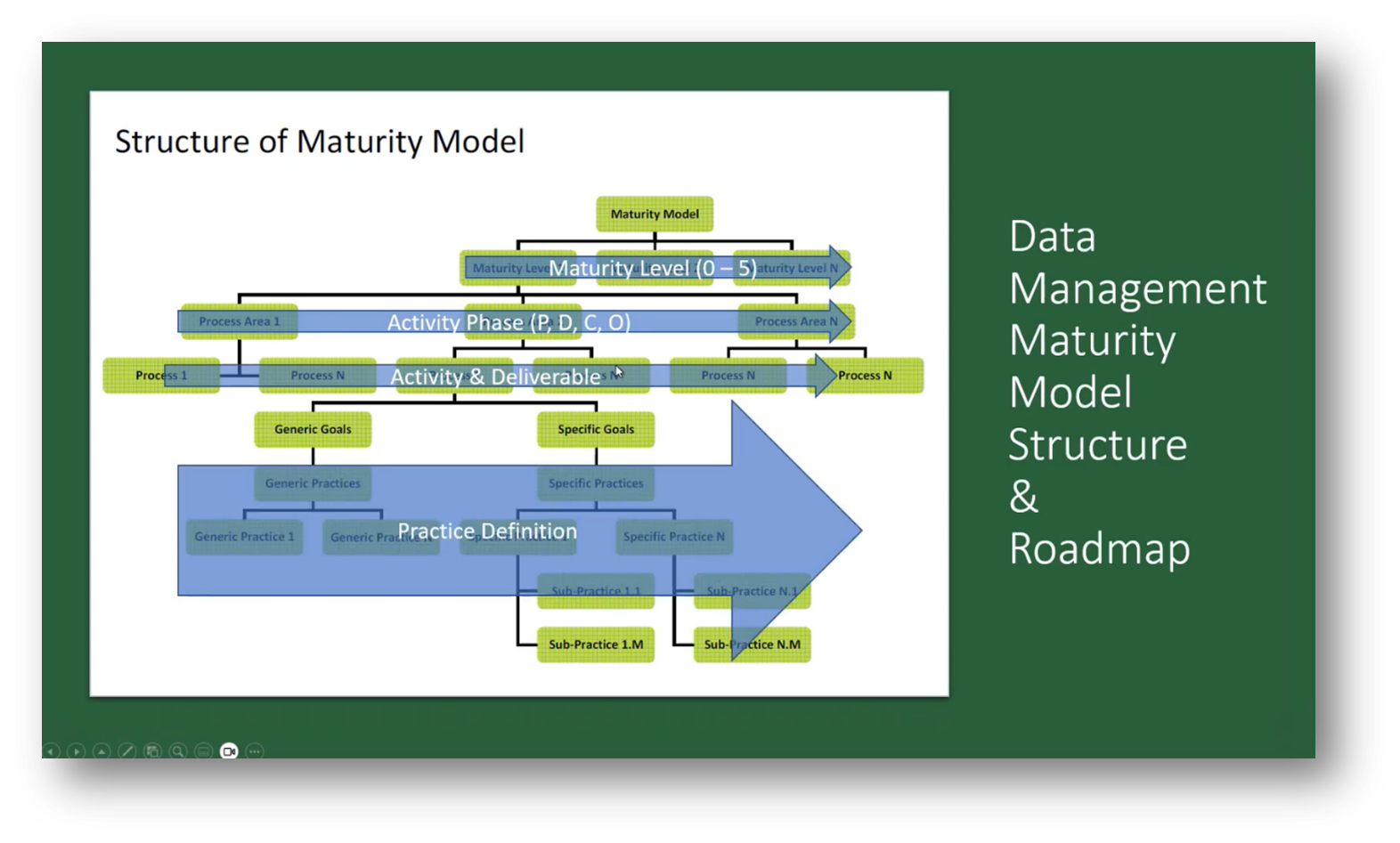

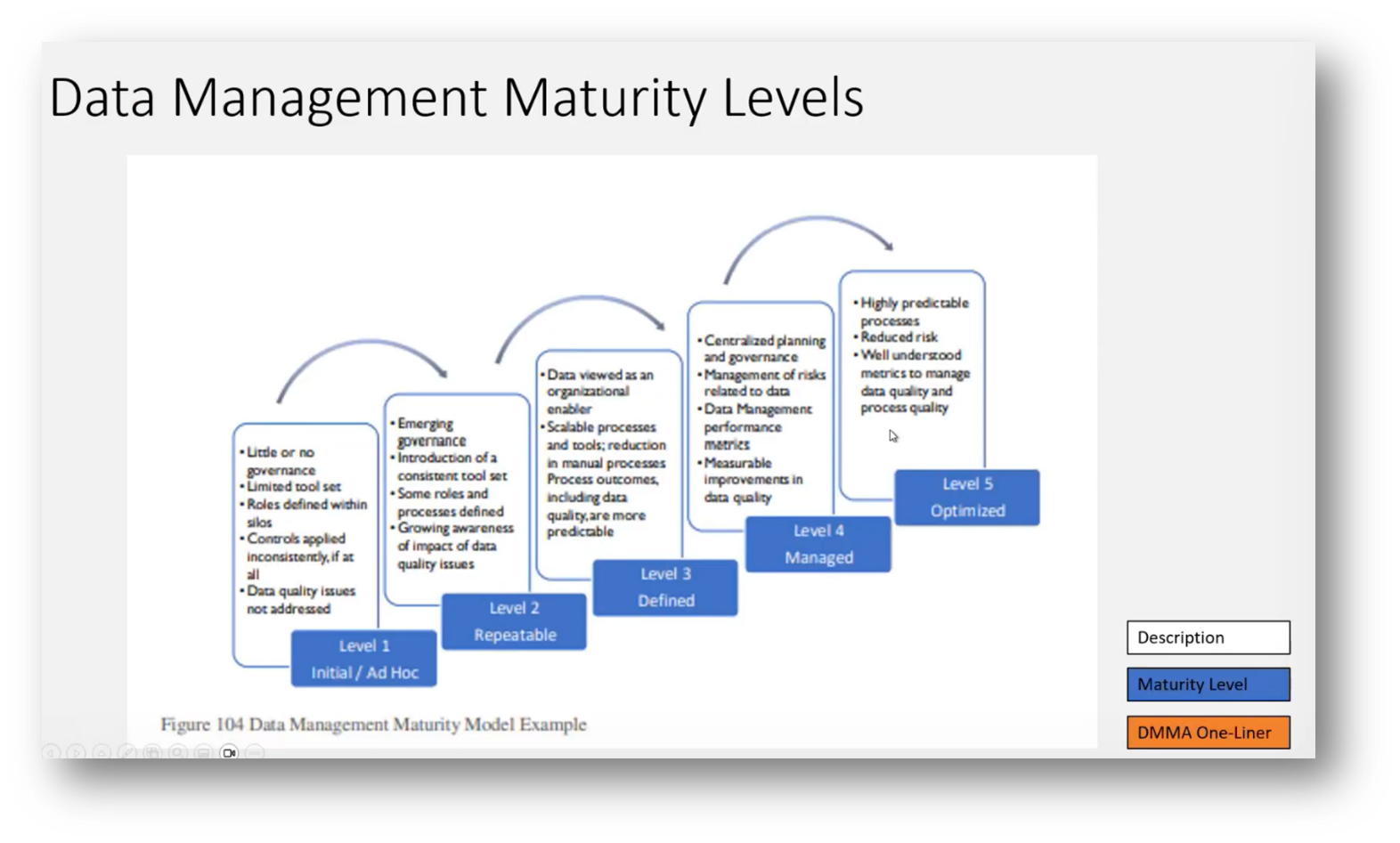

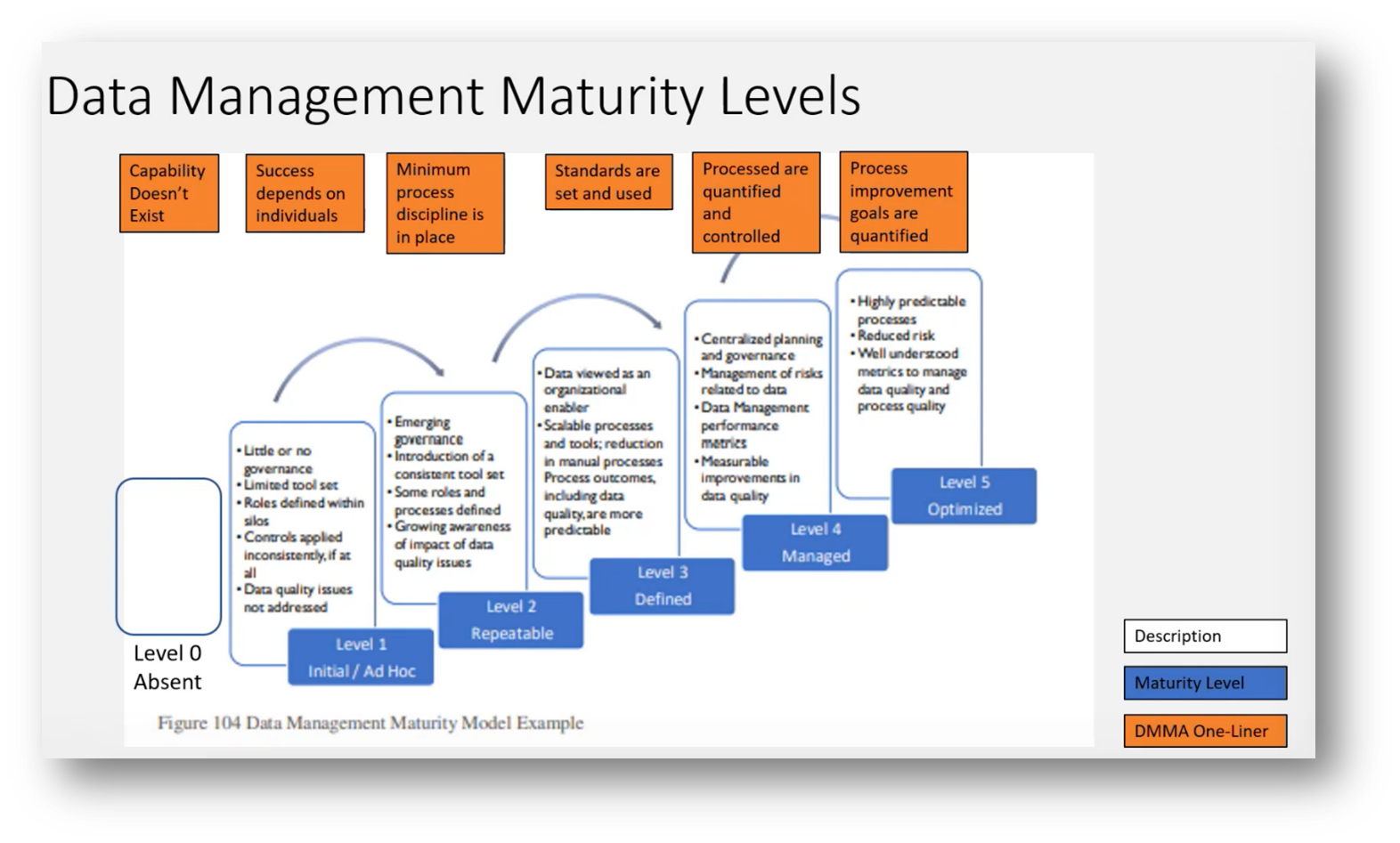

A data management maturity assessment, as defined by the DMBoK, evaluates an organisation's current data management practices rather than its capabilities. It serves as a method for ranking these practices to identify the necessary maturity uplift required to align with data strategies and compliance standards, such as BCBS, while fostering organisational trust.

The maturity model categorises processes into various levels, emphasising the importance of procedures, policies, and delivery mechanisms to create effective data management. Notably, there is no "level 0", indicating the absence of data practices, suggesting that organisations may rely heavily on individual expertise without defined processes. A score of one out of five in the assessment reflects a dependency on key individuals rather than a lack of potential, highlighting the need for improved knowledge management and process controls.

Figure 21 Data Governance Plays & Game Plan

Figure 22 Why: Goals & Business Value

Figure 23 Data Management Maturity Model Structure & Roadmap

Figure 24 Data Management Maturity Model Structure & Roadmap Pt.2

Figure 25 Data Management Maturity Levels

Figure 26 Data Management Maturity Levels Pt.2

Data Governance in the Organisation

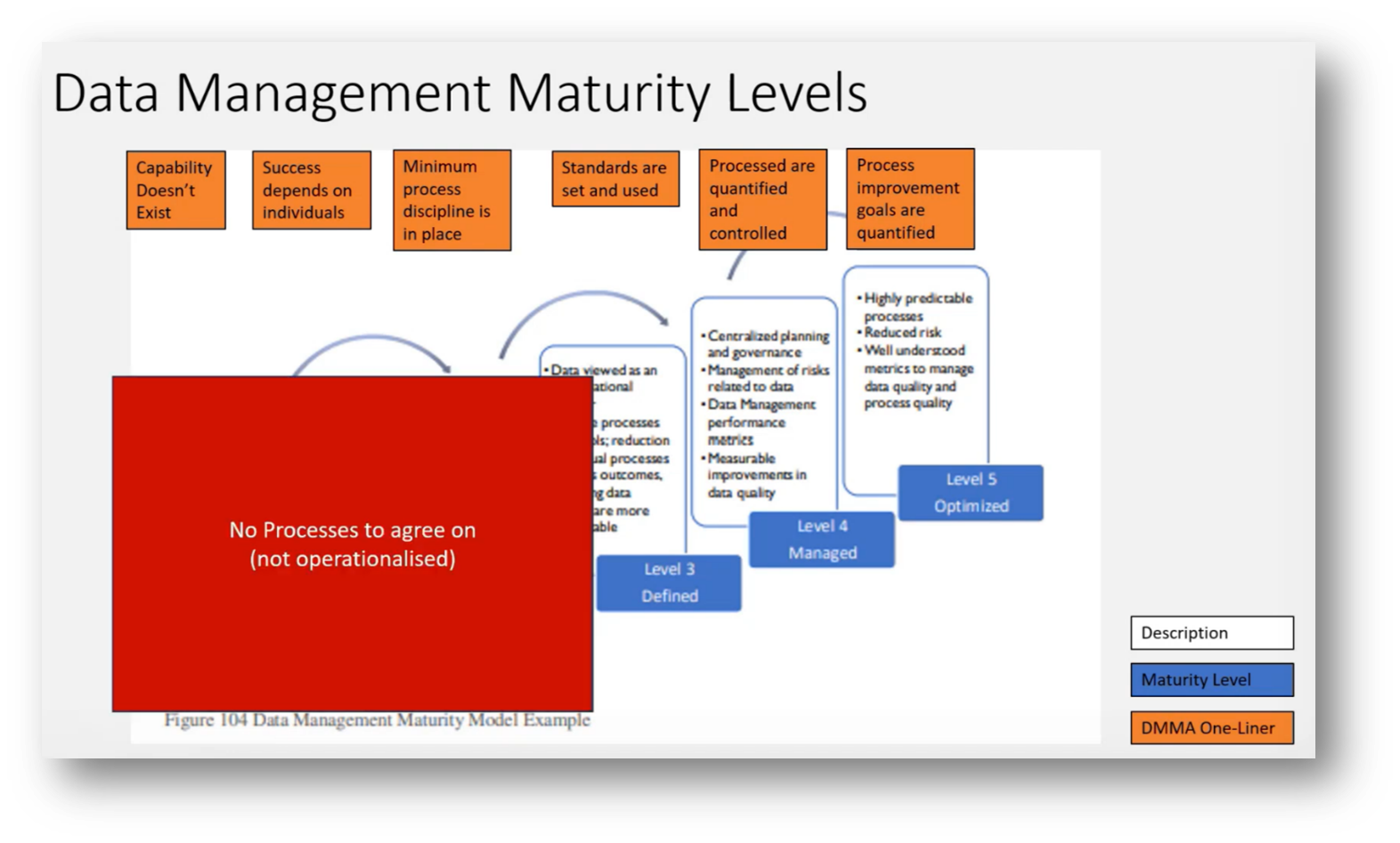

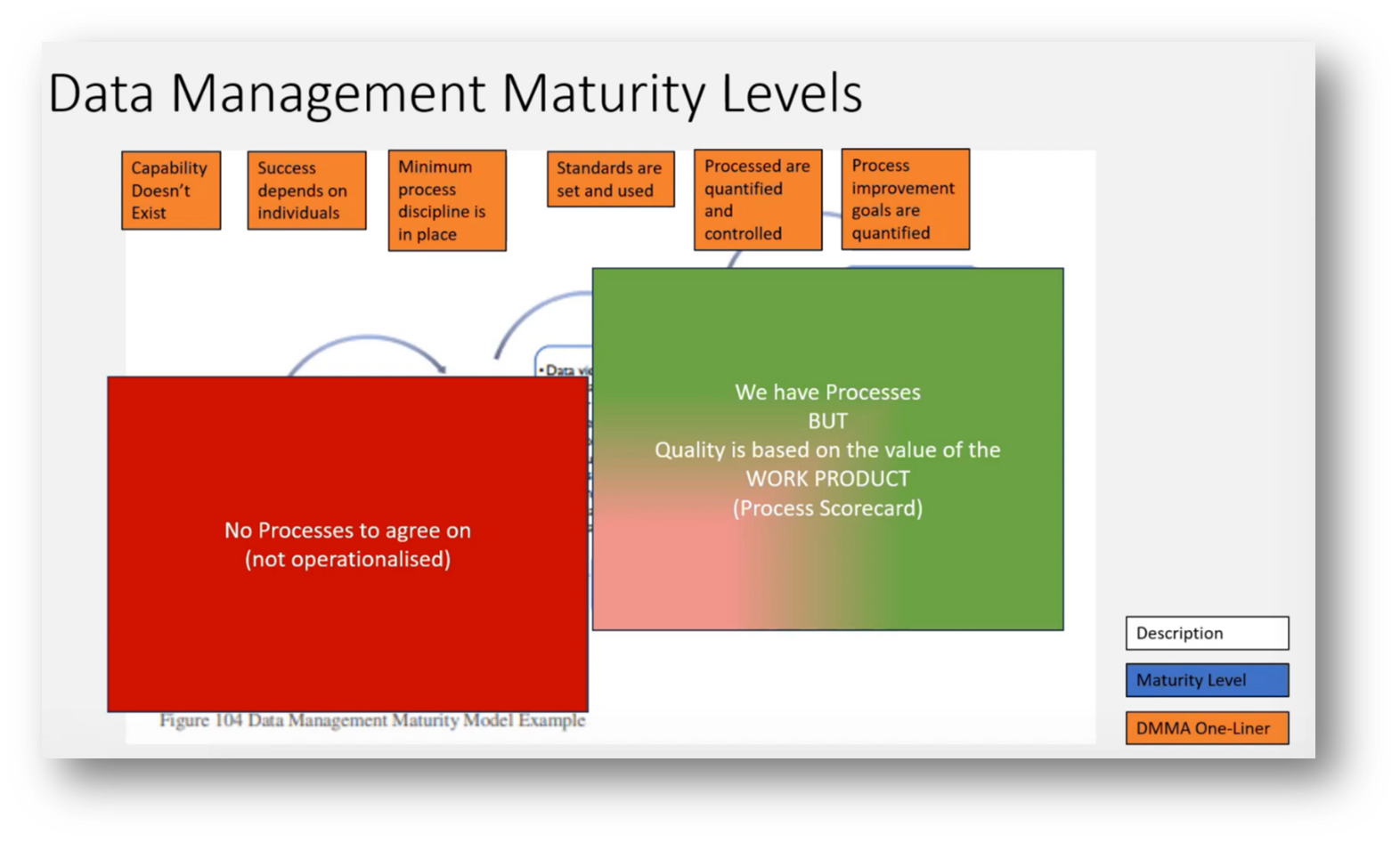

Howard highlights the challenges organisations face in establishing effective data governance, particularly in the context of master data and process standardisation. He emphasises that, despite having governance policies and procedures, many business units lack a clear understanding of concepts such as master data, controls, and the role of data stewards, often resulting in a classification at levels 1 or 2 of data governance maturity.

Additionally, Howard points out that although processes may exist, they are not operationalised or standardised, leading to confusion and a lack of agreement on best practices. Moving towards a level 3 maturity, where standards are set and recognised, is crucial for improving data processes. Comprehensive training and engagement with stakeholders are essential to bridge these knowledge gaps and enhance overall data management effectiveness.

Figure 27 Data Management Maturity Levels Pt.3

Implementing Quality Control and Governance in Data Management

The importance of quality control and quantification in evaluating data work products through a structured process, such as a data modelling scorecard, is emphasised. This scorecard assesses compliance with standards, indicating areas for improvement as identified by data governance and architects. The conversation also highlights the challenges faced by professionals unfamiliar with data management frameworks like DMBoK, CMM, and TDWI.

A critical point made is the misconception surrounding Master Data Management (MDM) tools; organisations at lower maturity levels may prematurely invest in these systems without a solid understanding of their data, leading to long-term issues. It is recommended that robust data management processes be established before implementing MDM tools, ideally once organisations reach Level 3 maturity.

Figure 28 Data Management Maturity Levels Pt.4

Challenging Aspects of Maturity Assessments

Howard reflects on past experiences with maturity assessments in the banking sector, highlighting the emotional challenges associated with such evaluations. He recalls his engagement with banks, utilising various frameworks and recognising the difficulties faced, particularly from leadership perspectives.

An attendee expresses frustrations over the assessments, feeling they often lead to negative feedback rather than constructive solutions, suggesting that management consultants focus more on highlighting deficiencies to justify their services. Overall, the conversation underscores the importance of approaching maturity assessments with caution due to their potential emotional impact.

Data Management and Assessment Strategies in the Digital Age

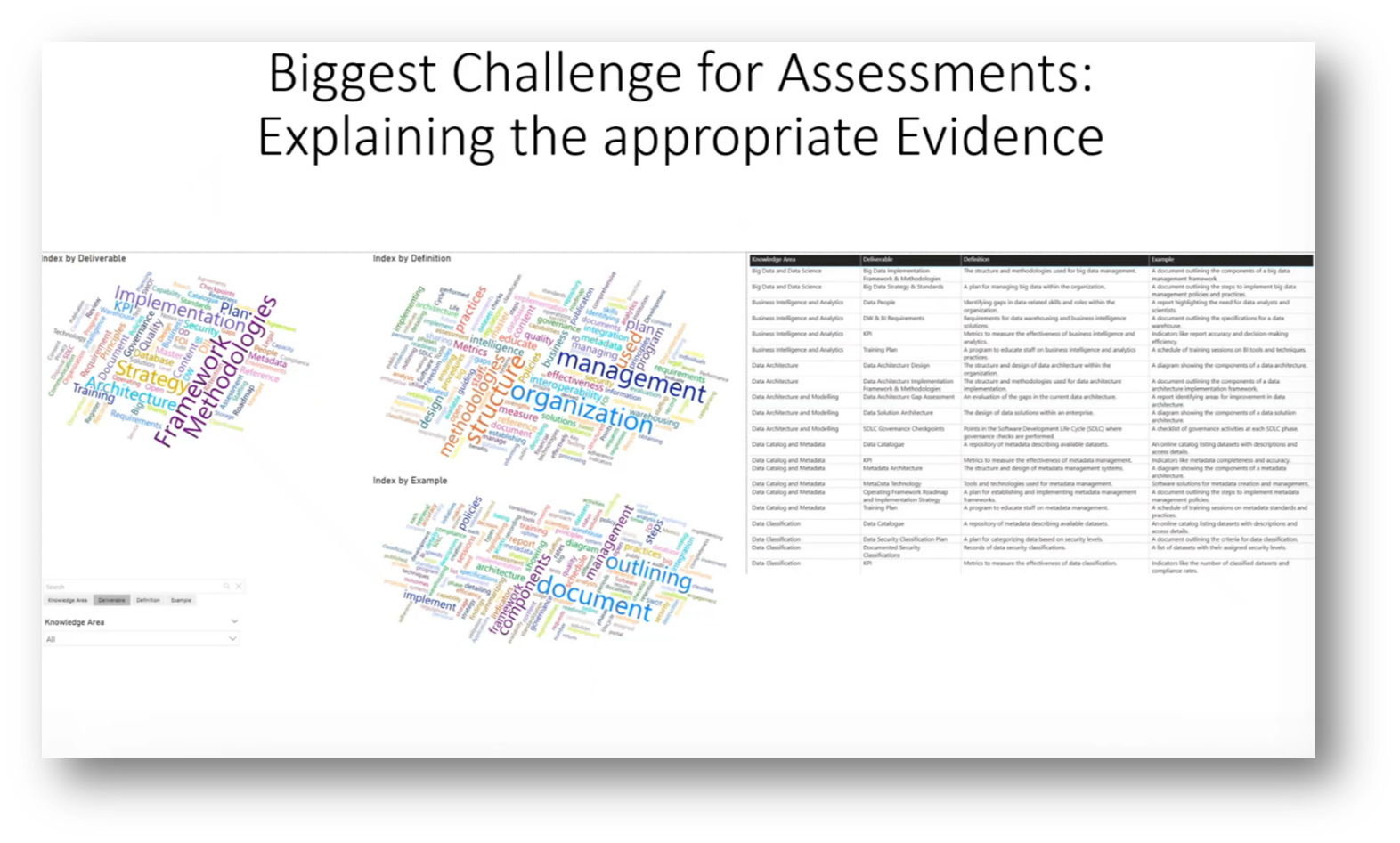

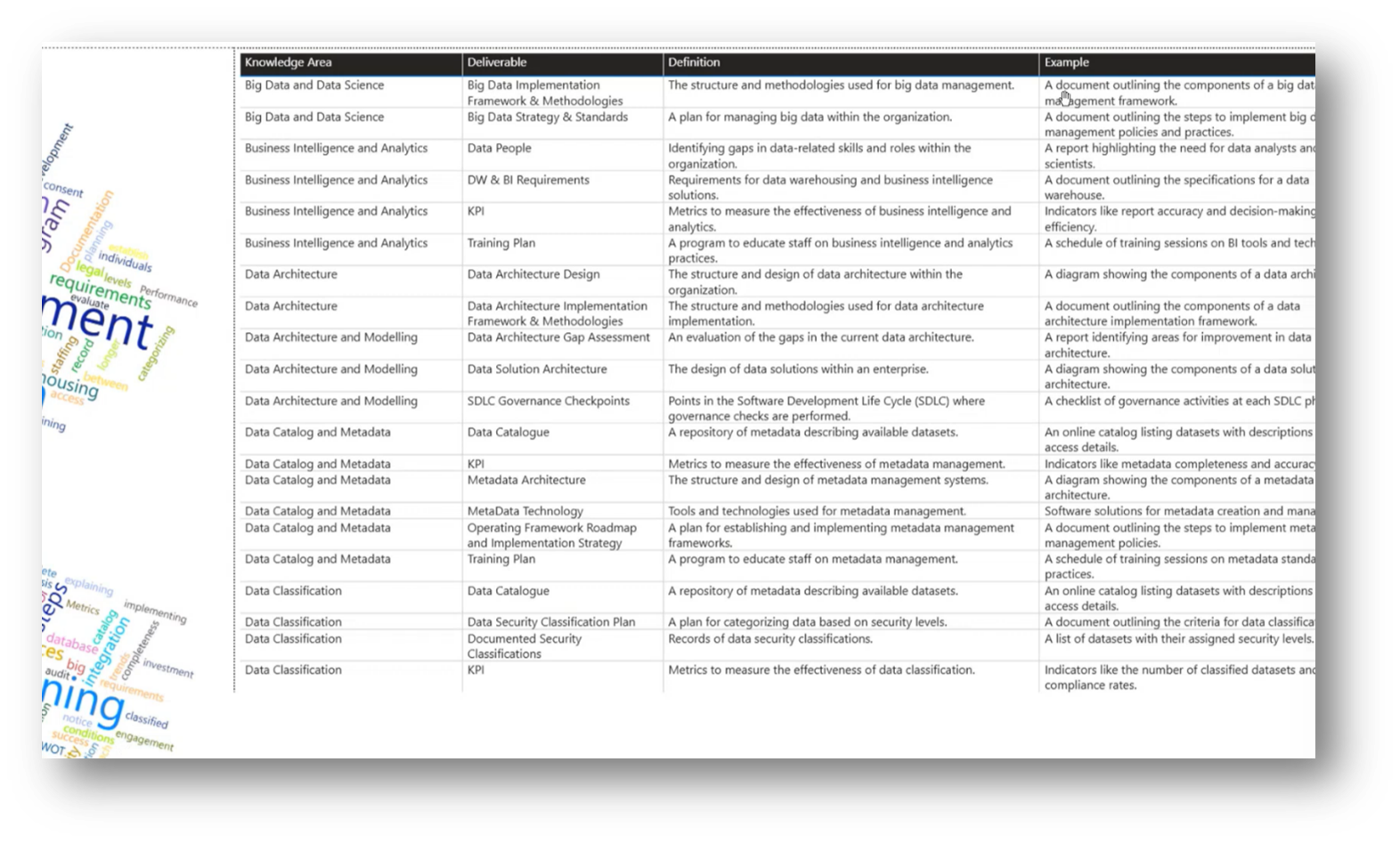

Concerns are highlighted regarding the lengthy process of conducting maturity assessments. Howard emphasises that in today’s fast-paced digital environment, these assessments should ideally not exceed one month. An attendee expresses the need for a well-prepared approach, ensuring participants are equipped with the necessary understanding and materials in advance. To facilitate this, a library of required evidence, including implementation frameworks and strategies for big data and data science, will be developed, providing clear definitions, examples, and templates to streamline the process.

The process of conducting assessments and collecting data should be streamlined and efficient, allowing stakeholders to understand the purpose without requiring lengthy procedures. Utilising self-assessment tools, such as Excel spreadsheets, enables participants to complete their own evaluations, after which evidence is requested if there's a significant discrepancy in self-scores compared to others. The urgency in providing evidence—within a day—encourages accountability. Lessons learned from experiences in the payment card industry emphasise the importance of upfront clarity in requirements to enhance efficiency and reduce the duration of audits. Implementing quarterly self-assessments for data management maturity, with evidence reviews as needed, can significantly improve the assessment process.

Figure 29 Biggest Challenge for Assessments

Figure 30 Biggest Challenge for Assessments Pt.2

Data Maturity and Accountability in Organisations

The idea revolves around creating an app that allows users to generate self-assessments related to their organisation's maturity levels; however, discrepancies often arise in scoring, as evaluations can vary significantly among individuals—some may rate a process as a Level 3 while others view it as a zero.

This inconsistency can be influenced by varying perspectives, with some people being overly critical, like internal auditors, while others may offer more favourable scores to portray a positive image. To address these challenges, it is essential to engage regularly with business, IT, and audit teams to establish a clear glossary of definitions and standards specific to the organisation. Effective assessment depends on comprehensive documentation, such as policies, procedures, evidence of approval processes, and relationships with architects, to ensure that the maturity levels accurately reflect the organisation's capabilities.

An attendee highlights the importance of effectively challenging stakeholders to ensure accurate reporting on data maturity levels. They emphasise the value of utilising different frameworks, such as the PDMA and SFIA skill levels, to provide clarity and establish a shared understanding of maturity levels among team members. The attendee also notes the significance of clear communication regarding the objectives of data collection, as misunderstandings can lead to resistance from business personnel who may question the necessity of their involvement. They share another experience where discrepancies in data maturity levels were addressed rigorously, ultimately reinforcing the need for consistent evidence to support claims. The conversation underscored the continual learning process involved in managing stakeholder engagement and aligning perceptions of data maturity.

Maturity Assessment and Data Management Strategies

Howard highlights the importance of establishing a clear scope with the business when conducting maturity assessments, as businesses often compare results across departments, leading to discomfort with ratings. He notes that some organisations prefer a gap assessment approach, evaluating the effectiveness of controls based on defined policies rather than adopting a maturity level framework, which can generate more debate. Additionally, the shift towards a risk-based approach requires focusing on the risks and impacts of current practices. Howard emphasises the value of DCAM, which encourages organisations to manage data throughout its entire lifecycle, ensuring comprehensive oversight and management rather than focusing solely on specific phases.

Understanding Effective Data Modelling and Compliance

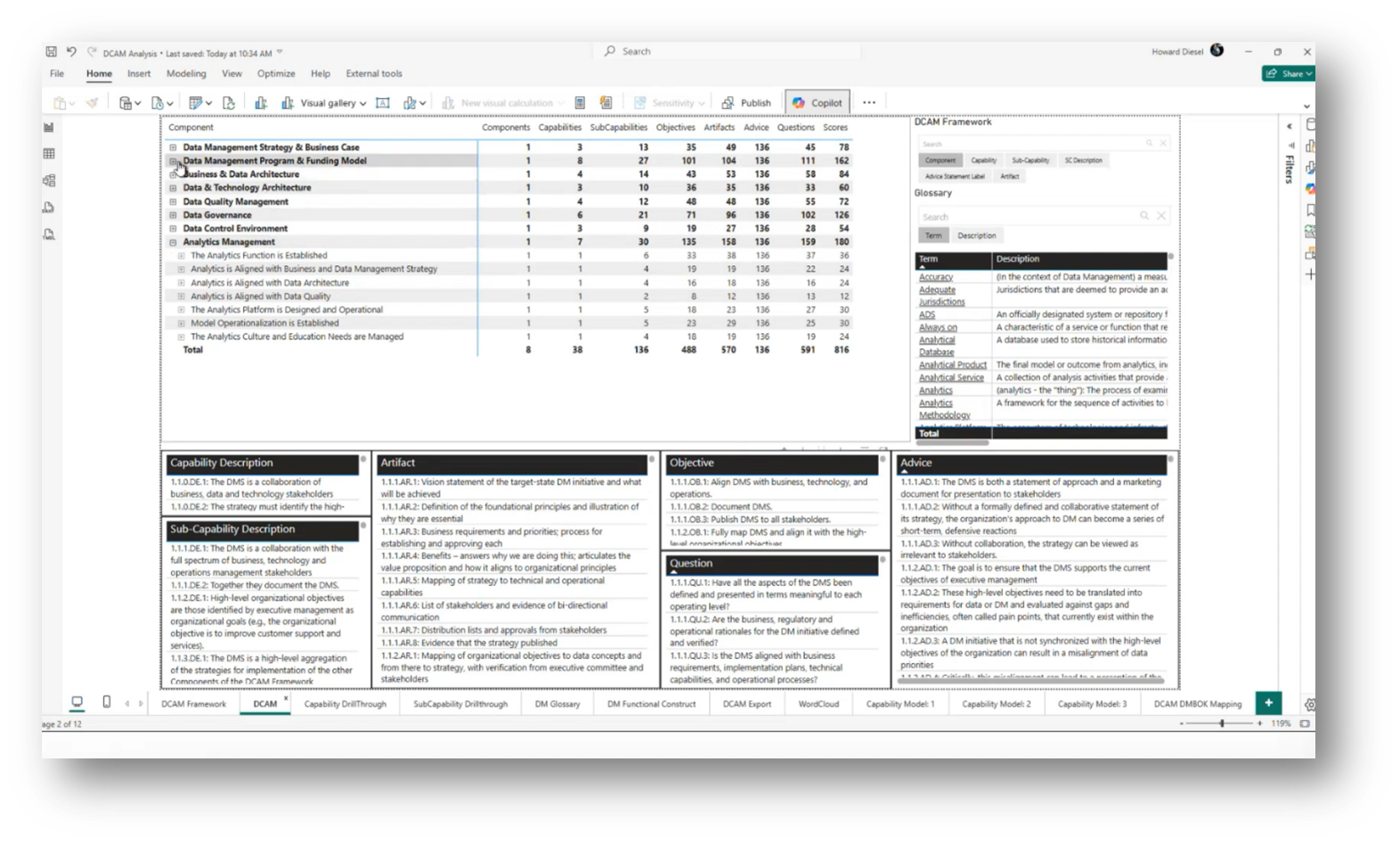

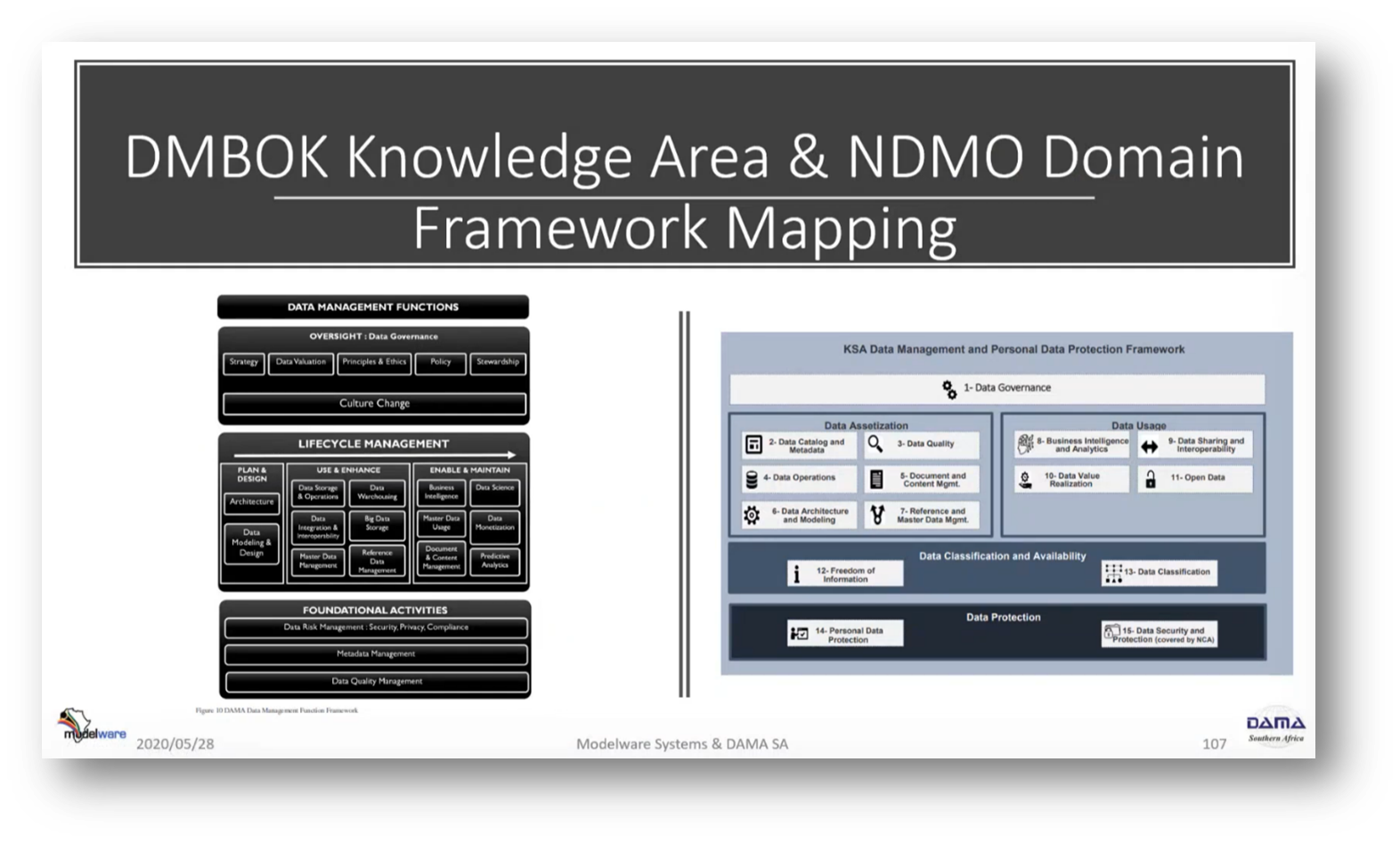

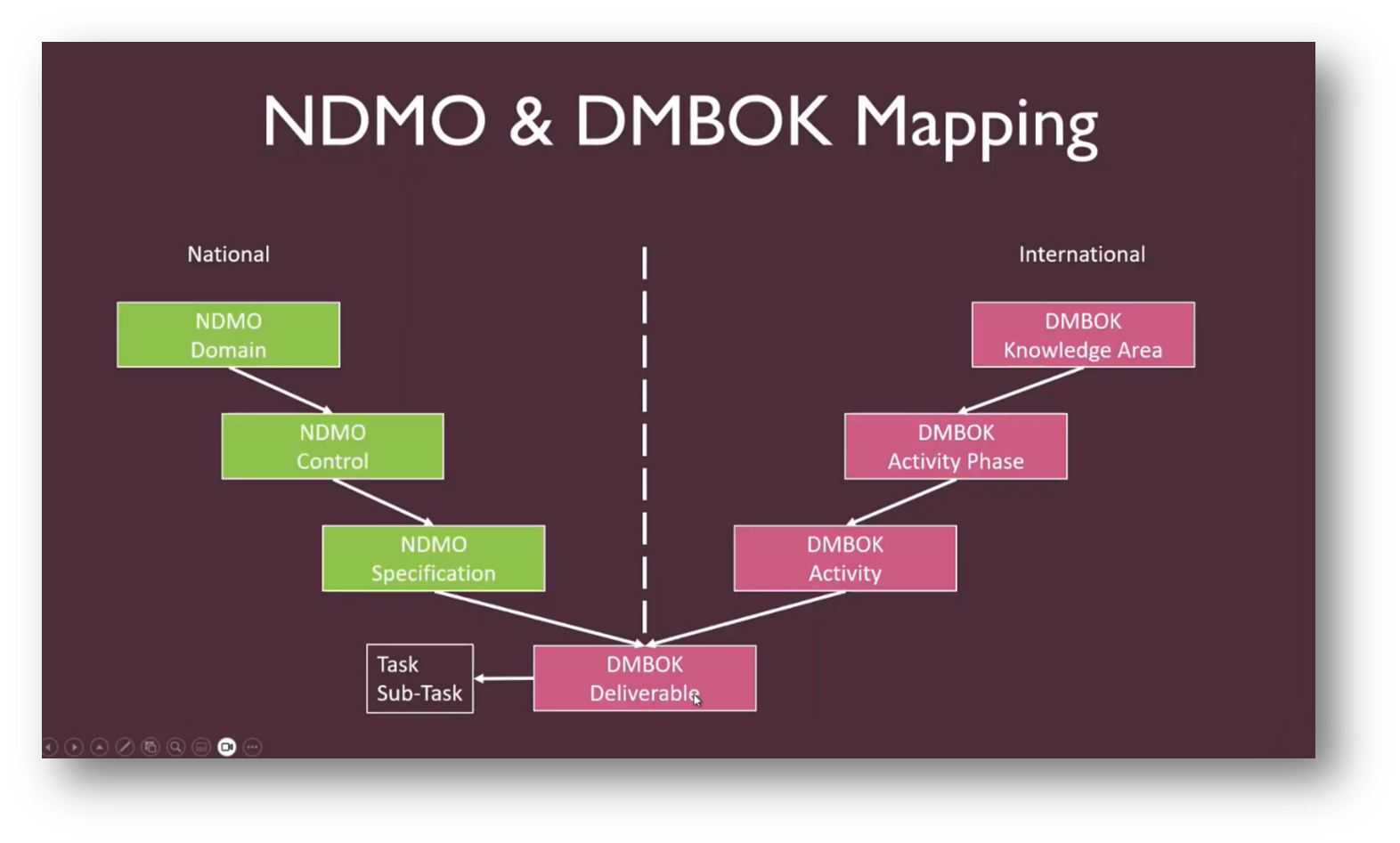

The approach taken against the DMBoK involved a thorough analysis of specifications in the NDMO to ensure alignment with deliverables, emphasising the need for evidence of data modelling outcomes rather than just completed processes. By integrating findings into Power BI, it became clear which specifications required specific operational plans and how to consolidate efforts across various domains.

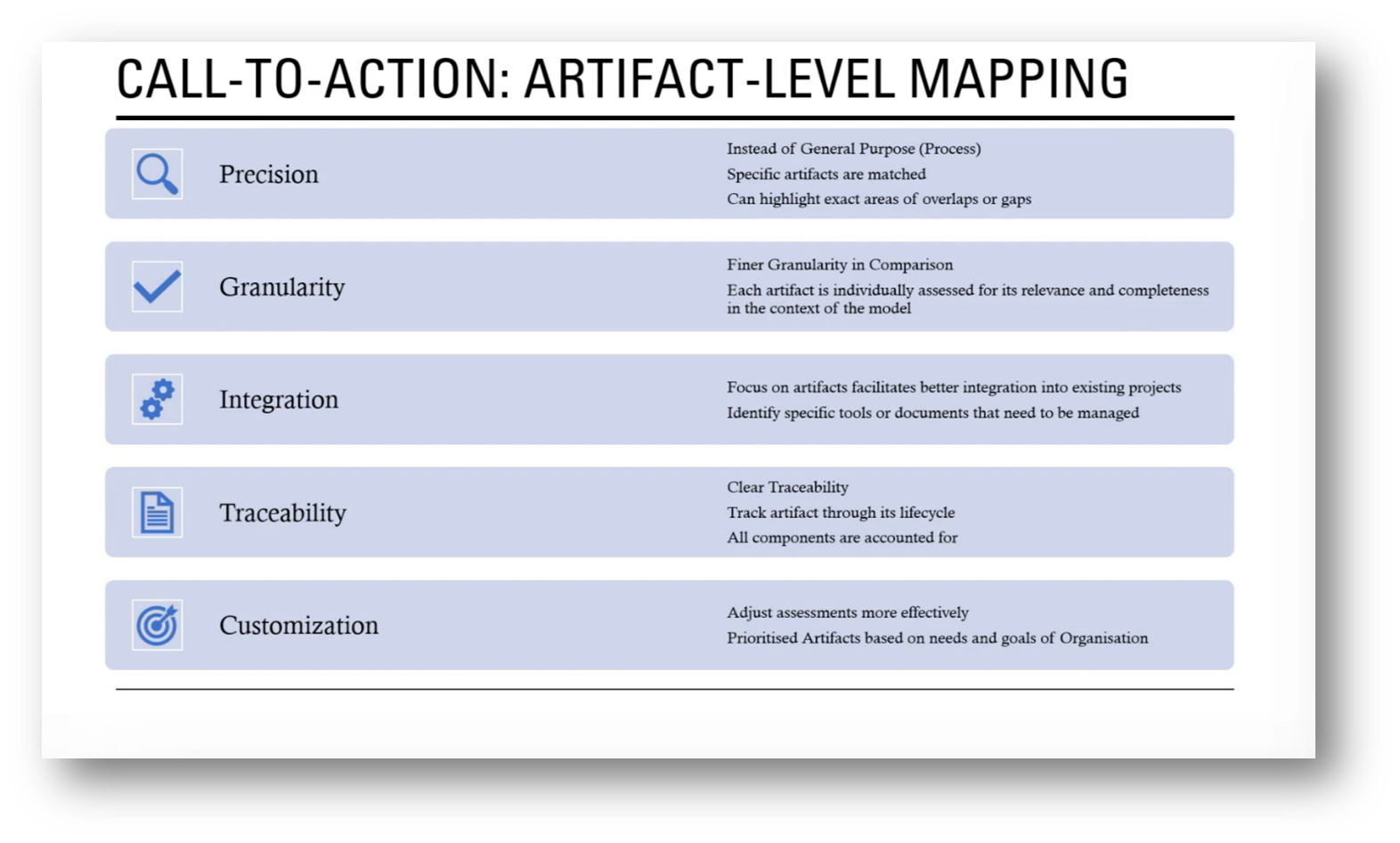

In applying a similar method to DCAM, it is crucial to examine the hierarchy beyond the top level, focusing on artifacts as demonstrable evidence of compliance. Evaluating questions and advice related to each artifact is essential for ensuring thorough understanding and adherence to standards, ultimately linking everything to the final deliverable.

Figure 31 DMBoK Knowledge Area & NDMO Domain Framework Mapping

Figure 32 NDMO & DMBoK Mapping

Figure 33 NDMO & DMBoK Mapping Pt.2

Figure 34 Call-To-Action

Data Management and Strategy in Project Management

Howard then focuses on the integration of data frameworks, specifically the relationship between the DCAM and the DMBoK. He emphasises the importance of maintaining evidence throughout the project lifecycle, including the creation of strategies, use cases, and data quality assessments, to ensure accountability and visibility. Additionally, key points include establishing clear granularity in data strategies, tailoring artifacts for specific project needs, and recognising the need for timely assessments to prevent outdated information. Ultimately, the goal is to illustrate how these frameworks complement each other, highlighting their distinct levels and the value of effective metadata management in driving data-related initiatives.

The importance of effectively communicating the value of data management practices and capabilities to stakeholders is emphasised, even those with established data experience who may question their necessity. Howard emphasises the need for concrete examples and use cases to persuade the audience about the significance of data quality, metadata, and other deliverables, particularly during data maturity assessments.

Howard underlines the role of industry best practices, like those outlined in DCAM, in mitigating risks and ensuring that all necessary controls are in place. Ultimately, it is crucial to link each data management capability back to the organisational value it provides, ensuring that the justifications for these practices are clearly articulated and understood by business architects and decision-makers.

Navigating Business Risks and Project Prioritisation

In management, particularly within different business units, an ongoing challenge involves dealing with managers who are under pressure due to budget constraints, project deadlines, and resource limitations. These managers often prioritise projects that can be easily deprioritised, which can impact the allocation of resources and attention to critical initiatives.

To navigate this, it is essential to attach efforts to significant risks rather than transient projects, as risks tend to be more permanent and compelling. Securing a high-level endorsement, such as the CSSA mandate from the CEO, can bolster the priority of these initiatives, eliminating objections to their importance. Therefore, staying informed about business dynamics and maintaining visibility in the organisation are crucial to ensure that vital risks remain prioritised.

Data Management and Risk Management Strategies

Howard emphasises the importance of understanding data governance maturity scores, highlighting that a score like 1.2 is meaningless without context regarding its business impact and associated risks. He underscores the necessity of a risk framework to illustrate how inadequate data management can jeopardise organisational stability and value delivery. Advocates argue that data management should be recognised as a critical business capability, especially in the absence of robust business architecture, which complicates the challenge further. Howard also points out the importance of being equipped with knowledge about the risks of inaction, as well as leveraging insights from internal audits and governance, risk, and compliance (GRC) practices to strengthen data governance strategies.

To effectively connect business needs with risk management, it is crucial to demonstrate how data directly impacts value propositions, such as cross-selling capabilities. Engaging closely with the risk manager, one can highlight the significance of understanding which risks on the register are reliant on data, particularly in relation to tangible business issues, such as potential financial losses from legal matters. By incorporating a column indicating data-related problems, it becomes easier to address these challenges. This approach aligns with the concept of managing risks through established controls and mitigation strategies, which are essential in a risk-focused work environment.

The management of data risk has historically been integrated into broader risk categories such as operational, financial, strategic, compliance, reputational, and environmental risks, often leading to its underappreciation. However, the importance of effectively articulating data risks is increasingly recognised, particularly regarding cyber threats and ethical data usage, as seen with regulations like GDPR. Engaging with risk officers has clarified the language of data risk management, which is crucial for achieving business alignment and support.

Successful data value realisation relies on the ability to quantify risks and their mitigations effectively. In practice, identifying changes reliant on data and incidents that must be prevented can help highlight critical risks to executives, thus elevating visibility and securing more opportunities for discussion and influence within the organisation. Additionally, participation in groups like the EDM Council can provide valuable insights and frameworks for managing data risks in a banking context. You've always been keen.

Figure 35 Risk Categories

If you would like to join the discussion, please visit our community platform, the Data Professional Expedition.

Additionally, if you would like to be a guest speaker on a future webinar, kindly contact Debbie (social@modelwaresystems.com)

Don’t forget to join our exciting LinkedIn and Meetup data communities not to miss out!