Data-Value Driven - Reference Architecture for Data Managers

Executive Summary

This webinar outlines the essential aspects of developing a Data-Value Driven Reference Architecture, emphasising the concept of P3T in technology management and the significance of Reference Architectures in facilitating effective Data Management and integration. Howard Diesel addresses the key challenges encountered in implementing Data Management architectures, highlighting the critical role of Data Managers in this process. Furthermore, the webinar examines the evolution and structure of Reference Architectures, as well as the principles that govern their design and implementation. It underscores the importance of vendor assessment and interoperability by implementing robust Data Management strategies. Lastly, Howard shares how organisations can navigate the complexities of architectural principles, including those pertinent to Big Data, thereby enhancing the overall effectiveness of their enterprise architecture.

Webinar Details

Title: Data-Value Driven - Reference Architecture for Data Managers

URL: https://youtu.be/QIGoJBWSVl4

Date: 2022/11/03

Presenter: Howard Diesel

Meetup Group: African Data Management Community Forum

Write-up Author: Howard Diesel

Contents

Concept and Challenges of Building a Data-Value Driven Reference Architecture

Concept of P3T in Technology Management

Understanding the Concept of Reference Architectures

Data Management and Integration Architectures

Challenges and Approaches in Implementing Data Management Architecture

The Structure of a Reference Architecture

Understanding the Challenges in Data Management Architecture

The Evolution of Reference Architectures

Understanding the Creation of Reference Architectures

Understanding the Structure and Functioning of Reference Architecture

Understanding the Process of Building and Implementing Reference Architectures

The Role of Data Managers in Reference Architecture

Understanding the Principles of Reference Architectures

The Importance of Data Management in Enterprise Architecture

Vendor Assessment and Interoperability in Data Management

Implementing Data Management Strategies

Data Management and Vendor Assessment

Architectural Principles and Their Implications

Big Data Architecture Principles and Data Management

Concept and Challenges of Building a Data-Value Driven Reference Architecture

Howard Diesel opened the webinar and shared that the presentation would be on Reference Data Architecture. He highlighted that constructing a comprehensive Reference Architecture for Data Management encompasses various technologies and knowledge areas, not limited to Big Data. Additionally, Howard emphasised the importance of involving data architects and the architecture team in this process, mentioning their own experience in building a Reference Architecture, which took about six months and proved to be a significant learning opportunity.

Figure 1 "Building a Reference Architecture"

Concept of P3T in Technology Management

The beginning of Howard’s presentation centred around the term "P3T," which stands for Principles, Policies, Procedures, and the Reference Architecture for Technologies. Howard noted that an explanation of the acronym was required, especially after a recent inquiry. Additionally, an attendee shared that they had previously spent a month discussing these principles and their operationalisation and outlined how to integrate them into Business As Usual (BAU) and ensure compliance. Moving forward, the focus will now shift to understanding and constructing the Reference Architecture that supports these technologies as outlined in the Data Management Body of Knowledge (DMBoK).

Understanding the Concept of Reference Architectures

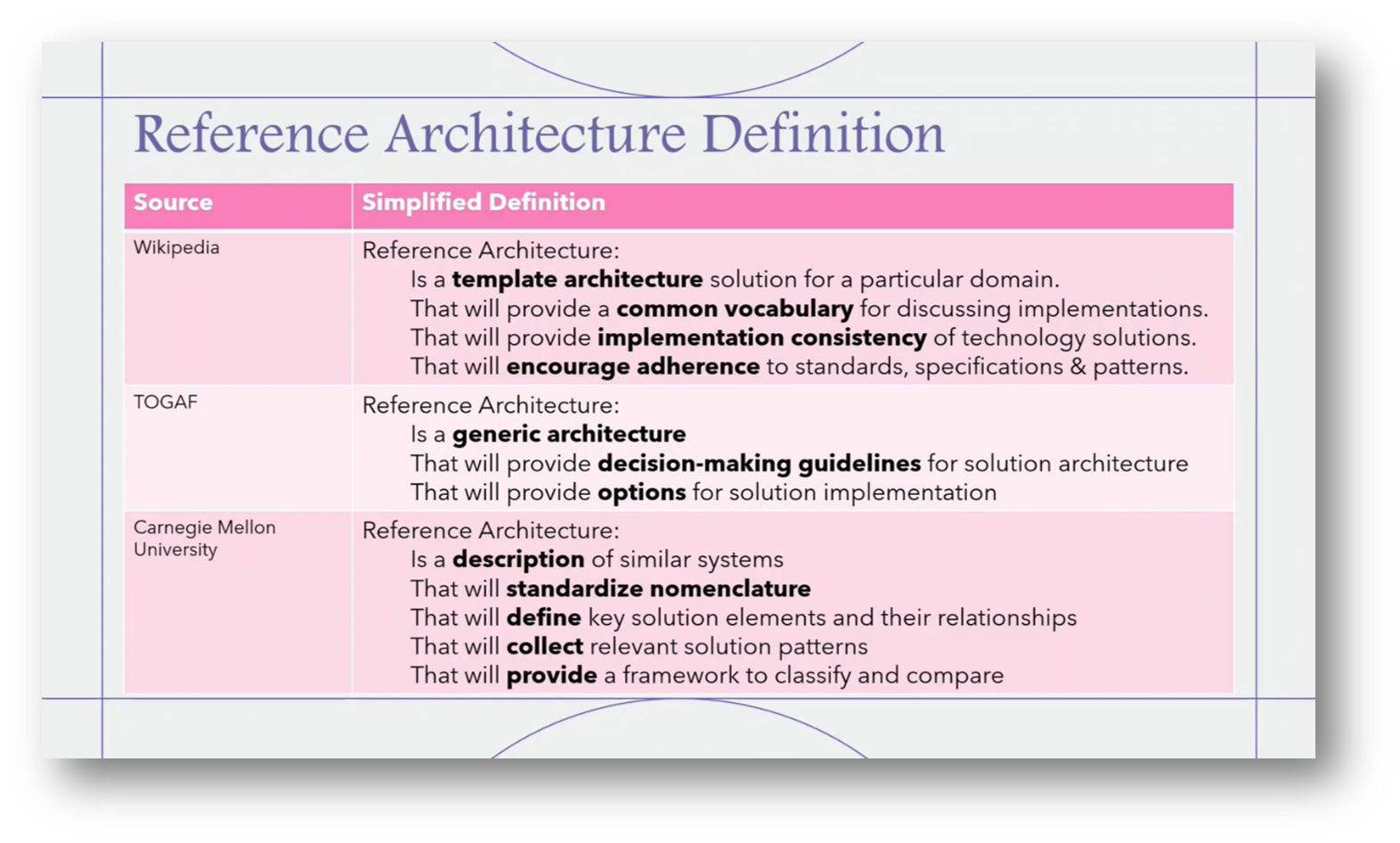

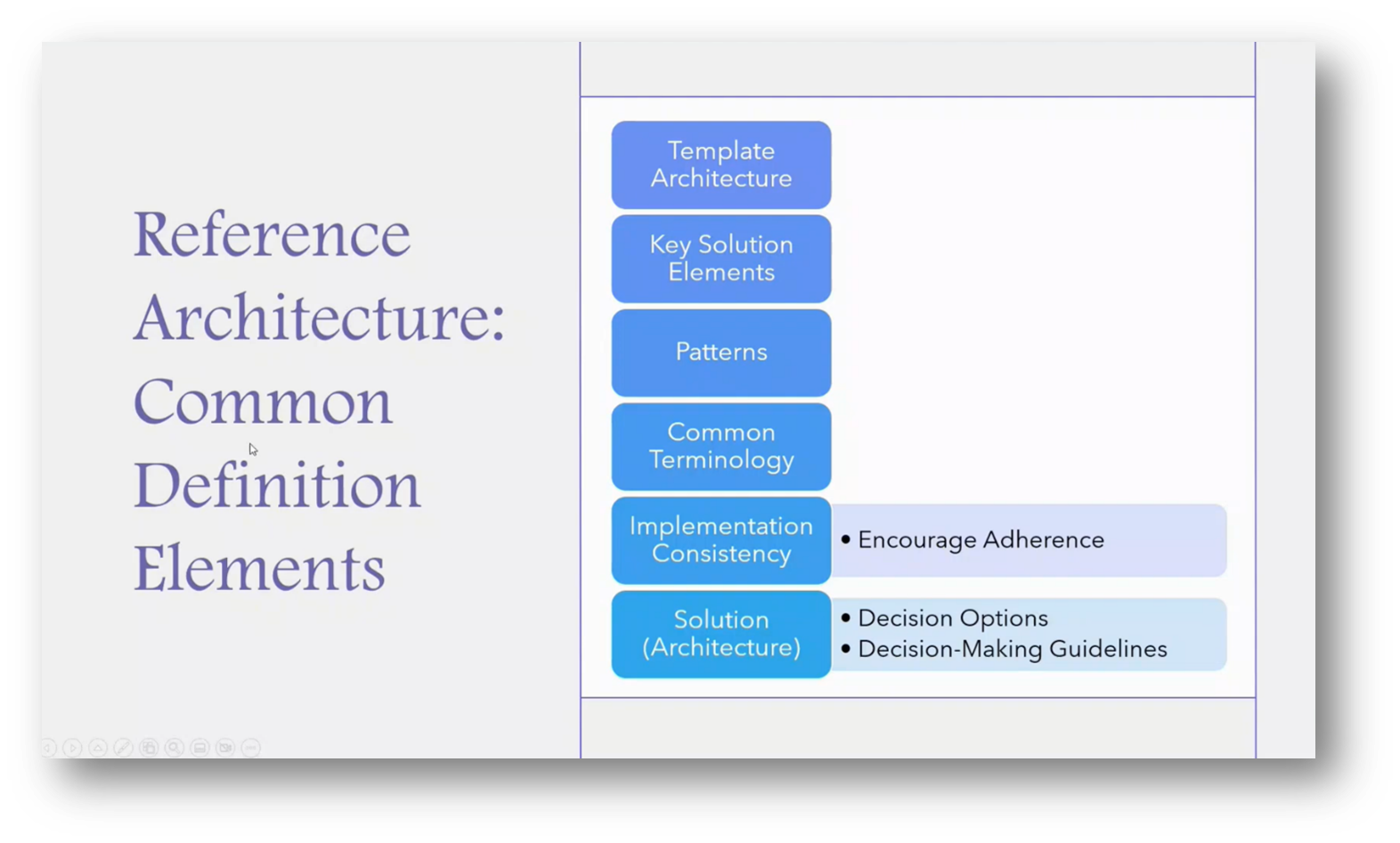

A Reference Architecture is a template architecture solution designed for a specific domain, particularly in Data Management, providing a common vocabulary to ensure implementation consistency. It encompasses various elements, including industry data models and key solution components.

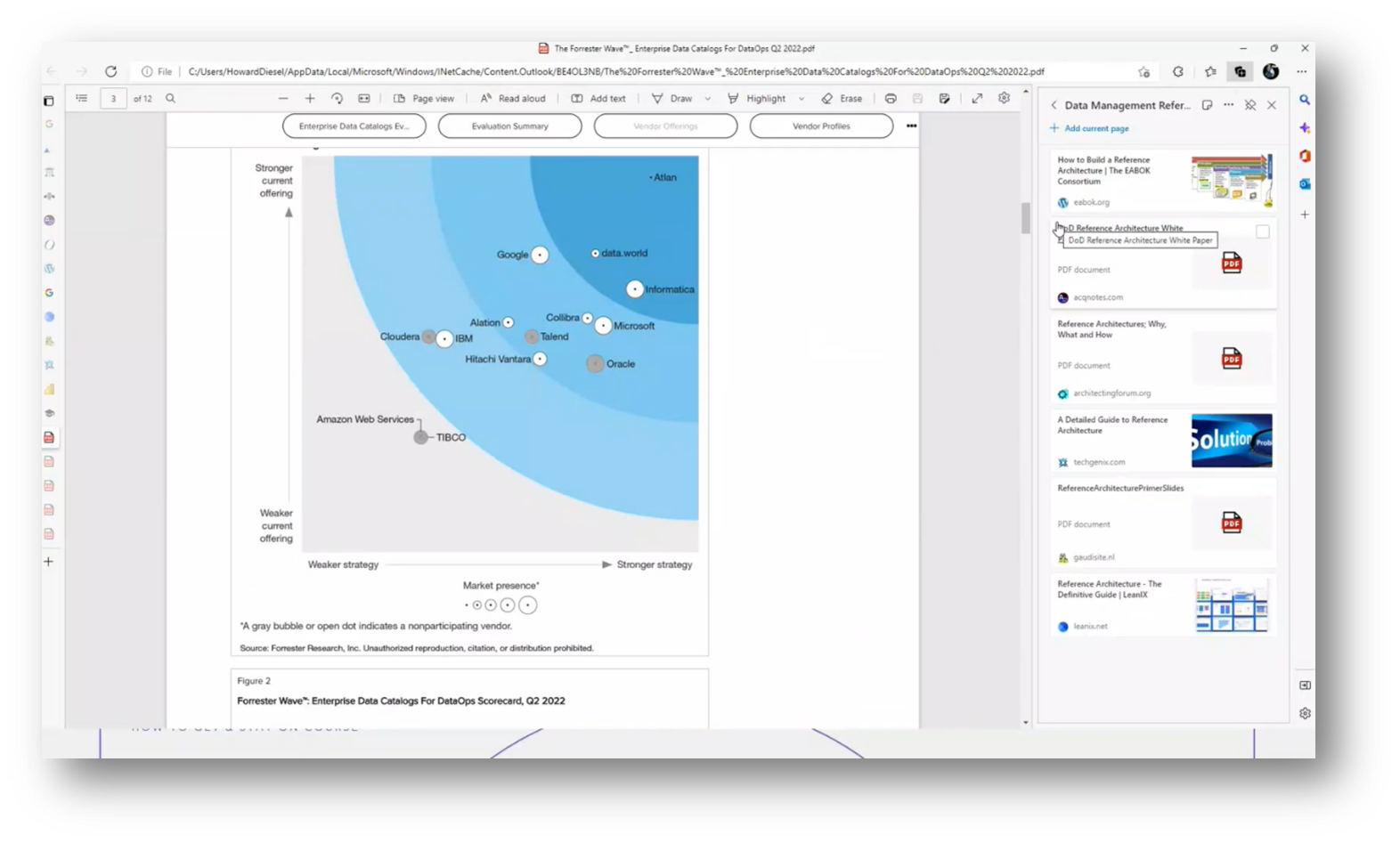

Organisations often face the dilemma of whether to choose a single vendor for an integrated solution, like Informatica, which offers a comprehensive view across all Data Management aspects, or to adopt a best-of-breed approach by selecting individual solutions across different domains and integrating them. This balance between integrated and specialised solutions is crucial for effective Data Management.

Figure 2 Building a Reference Data Architecture Deck

Figure 3 "Essential Concepts"

Figure 4 "What is a Reference Architecture?"

Figure 5 "Reference Architecture Definition”

Data Management and Integration Architectures

Adhering to architectural standards for decision-making is important in solution architecture, as emphasised by the TOGAF framework. Additionally, Howard emphasised the need for a consistent and automated approach to create necessary artefacts, avoiding cumbersome manual processes in Excel. The aim is to provide a template architecture that ensures uniformity when engaging with different vendors, aligning with overall Data Management goals.

Carnegie Mellon’s perspective highlights the standardisation of terminology, the definition of key solution elements and their relationships, and the integration of Data Governance and quality within a Data Integration Reference Architecture. Ultimately, it aims to establish a framework for classifying and comparing vendor solutions, spanning from Data Management concepts to technology architectures, thereby facilitating better decision-making and integration.

Challenges and Approaches in Implementing Data Management Architecture

Howard shared on a project where he developed a Data Management architecture for the central bank within a six-month timeframe. Initially, a key oversight on his part was attempting to create this architecture without fully understanding the existing policies and procedures, which necessitated a return to the drawing board. However, the resulting architecture serves as a template that incorporates essential solution elements, patterns from the DMBoK, and a common vocabulary to ensure consistency in implementation and Metadata sharing across different technologies. Furthermore, Howard added that while it does not dictate specific solutions like Informatica, it provides guidelines for making informed decisions, considering constraints such as budget and organisational maturity.

Figure 6 Common Definition Elements

The Structure of a Reference Architecture

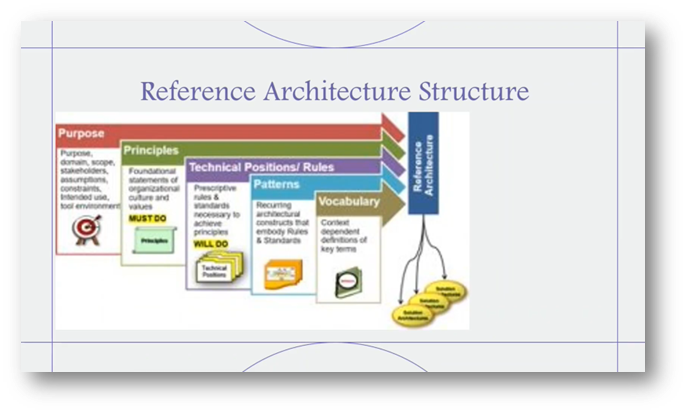

A Reference Architecture serves several critical purposes, including defining the scope, stakeholders, and guiding principles essential for creating Master Data solutions within an organisation. It acts as a framework that incorporates technical rules and patterns while allowing for continuous feedback.

This architecture facilitates the implementation of methods such as hub and spoke or centralised repositories, ensuring that departments can procure solutions in alignment with the guidelines. By adhering to the Reference Architecture, organisations can seamlessly integrate and collaborate across different departments or entities, maintaining consistency and interoperability in their Data Management practices.

Figure 7 "Why do we need a Reference Architecture?"

Figure 8 "Reference Architecture Structure"

Understanding the Challenges in Data Management Architecture

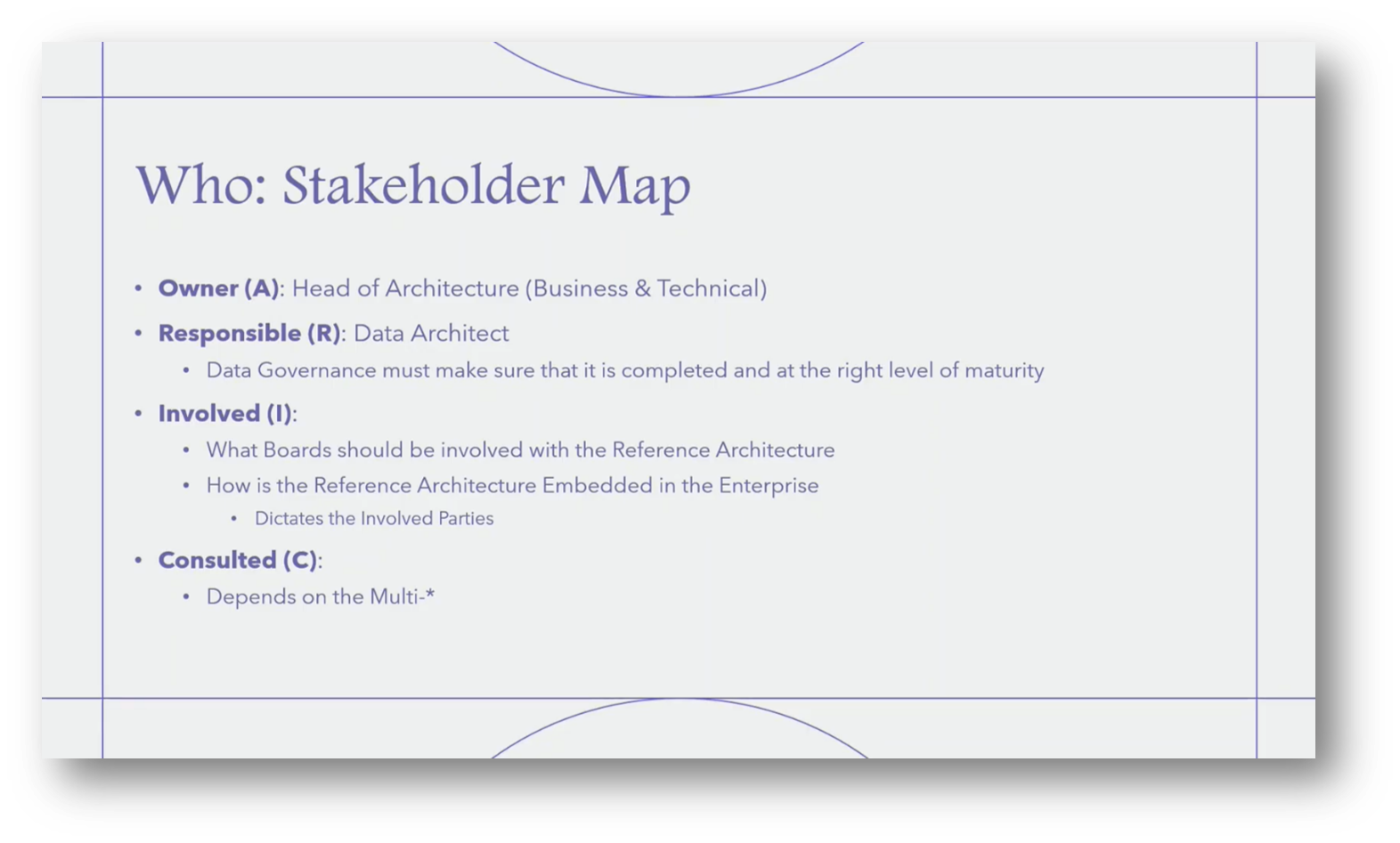

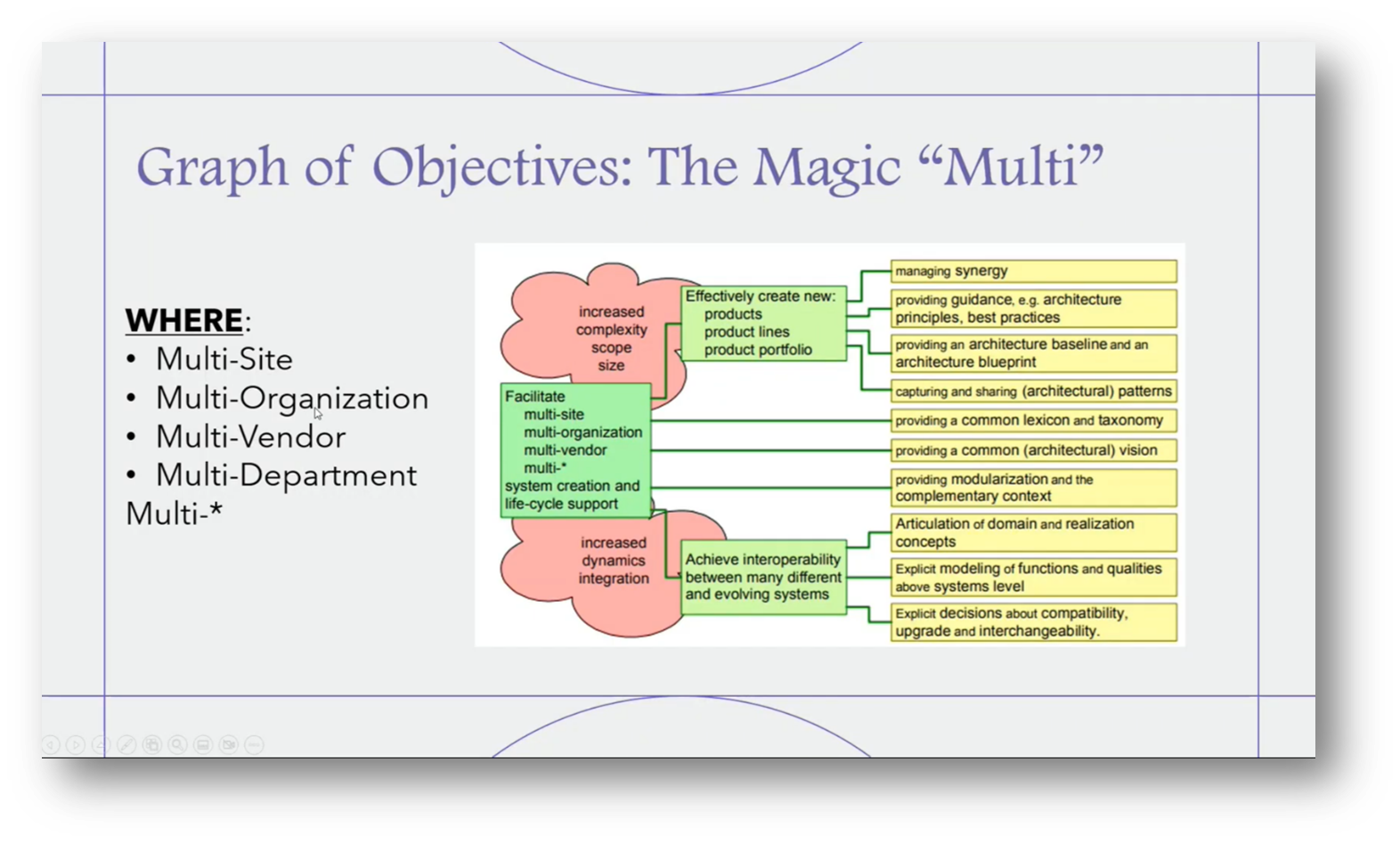

The responsibility for building the Reference Architecture for Data Management typically falls to the head of architecture, with the data architect playing a crucial role in its development and implementation. This process involves collaboration between Data Governance, Enterprise Information Management (EIM), and architecture to ensure the architecture meets necessary maturity levels and addresses concerns across various Data Management practices. Additionally, it is essential to present the architecture to an architecture board, if available, and understand how the Reference Architecture will be integrated into the enterprise structure, which may include multiple sites, organisations, vendors, or departments. The organisational setup will dictate the stakeholders that need to be involved in the development process.

Figure 9 "Who is Responsible for Building the Reference Architecture?"

Figure 10 Stakeholder Map

Figure 11 Graph of Objectives

The Evolution of Reference Architectures

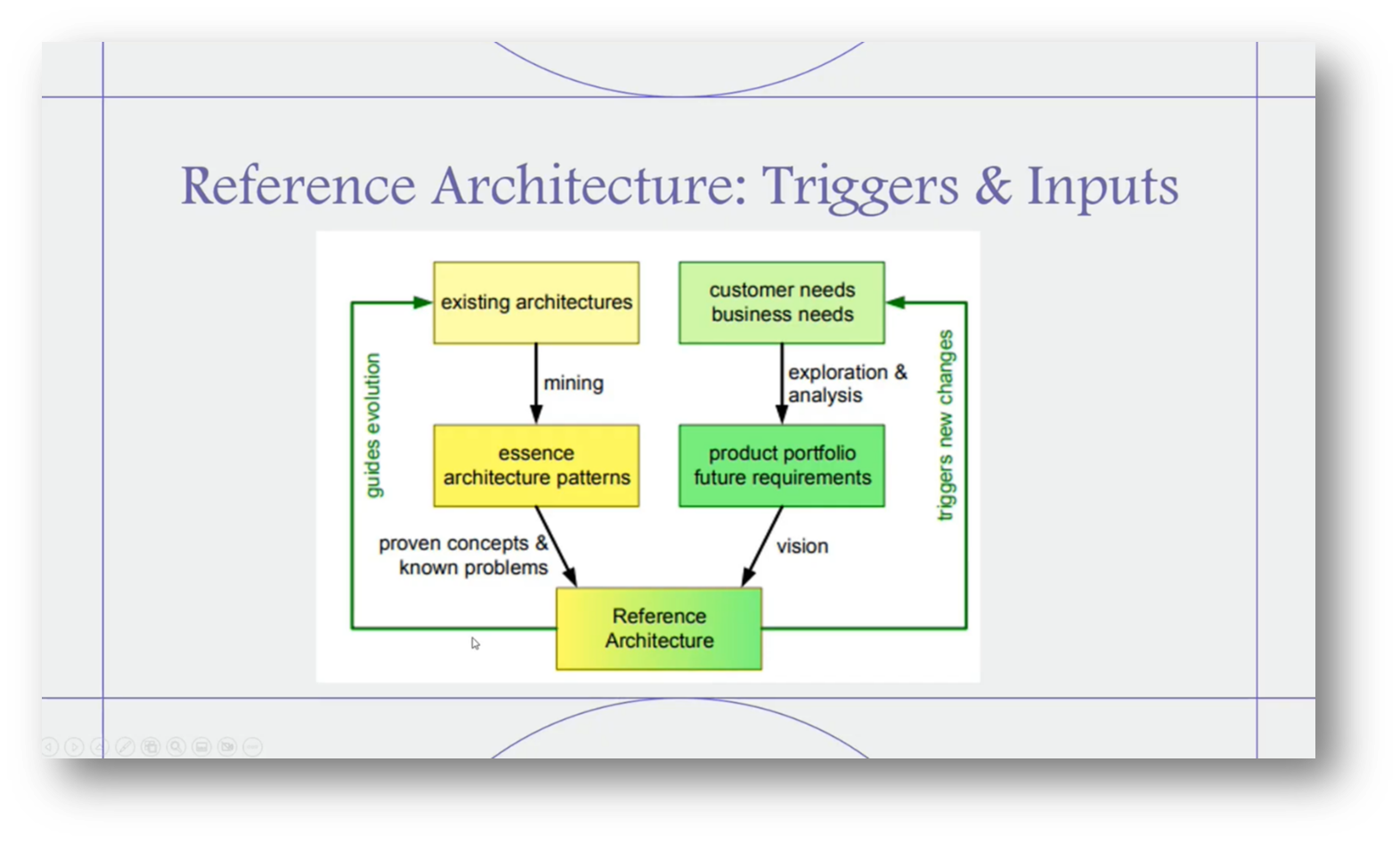

A Reference Architecture is a dynamic framework that evolves in response to changing business needs and capabilities. Initially, it is developed by understanding customer and business requirements, which guide the creation of the Reference Architecture. However, it is not a one-time task; as new use cases or business capabilities emerge, the architecture must be redefined and updated. Additionally, it serves as a guide for modifying existing architectures by identifying successful patterns and solutions that can be integrated back into the Reference Architecture, ensuring it remains relevant and effective over time.

The ongoing evolution of Reference Architectures necessitates continuous enhancement to accommodate new approaches and environmental changes, particularly as organisations transition to cloud-based scenarios. It raises critical questions about the lifespan of existing architectures, such as whether the emergence of Big Data renders traditional architectures obsolete, and what criteria determine when elements of these architectures become outdated.

This shift, particularly from on-premise to hybrid cloud solutions, has significant implications for how innovations are integrated. Challenges faced during maturity assessments often reveal that existing technologies and implementations may hinder progress. Additionally, disruptive technologies, such as distributed ledger systems, pose potential threats to traditional Reference Architectures, underscoring the need for adaptive and forward-thinking design approaches.

When changing a Reference Architecture, it is essential to assess the impact on existing solutions, as some may become invalid or non-compliant with the new architecture. This prompts the need to consider sunsetting outdated technologies and developing a clear plan for migration to new approaches. A review process, as highlighted by Lean IX, involves continuously evaluating current technologies against compliance with the Reference Architecture and addressing non-compliant solutions. Additionally, this framework is particularly critical in complex environments with multiple sites, regions, organisations, and vendors, as exemplified by Informatica's challenges in managing such scenarios.

To effectively build a Reference Architecture, it is essential to avoid relying solely on one technology provider, as this can limit understanding and innovation. Organisations often have multiple departments that lead specific knowledge areas, such as Metadata and data modelling, which should be integrated into a cohesive framework.

Attention must be given to the various lifecycle elements involved in these processes. Developing a Reference Architecture is particularly crucial for organisations, like merchants, that are acquiring new databases and technologies; establishing governance around this architecture will ensure it is implemented efficiently and effectively.

Figure 12 "Why do we need a Reference Architecture?"

Figure 13 Reference Architecture

Figure 14 When: Questions

Figure 15 "Where do we need a Reference Architecture?"

Figure 16 "How do we Build a Reference Architecture"

Understanding the Creation of Reference Architectures

A Reference Architecture is developed by capturing the essential elements of existing architectures, which is crucial for understanding various architectural designs. It integrates concepts from the DM Box, which encompasses essential knowledge areas, except perhaps data modelling. Different operating models, such as Centralised, Decentralised, and Hybrid approaches, can be identified to guide the formation of the architecture.

When looking to the future, emerging technologies such as smart cities and Industry 4.0 are reshaping architectural considerations. This evolution demands an awareness of patterns and changes in business models and market segments, all of which play a significant role in defining and refining the Reference Architecture.

Figure 17 "Short Summary of How"

Understanding the Structure and Functioning of Reference Architecture

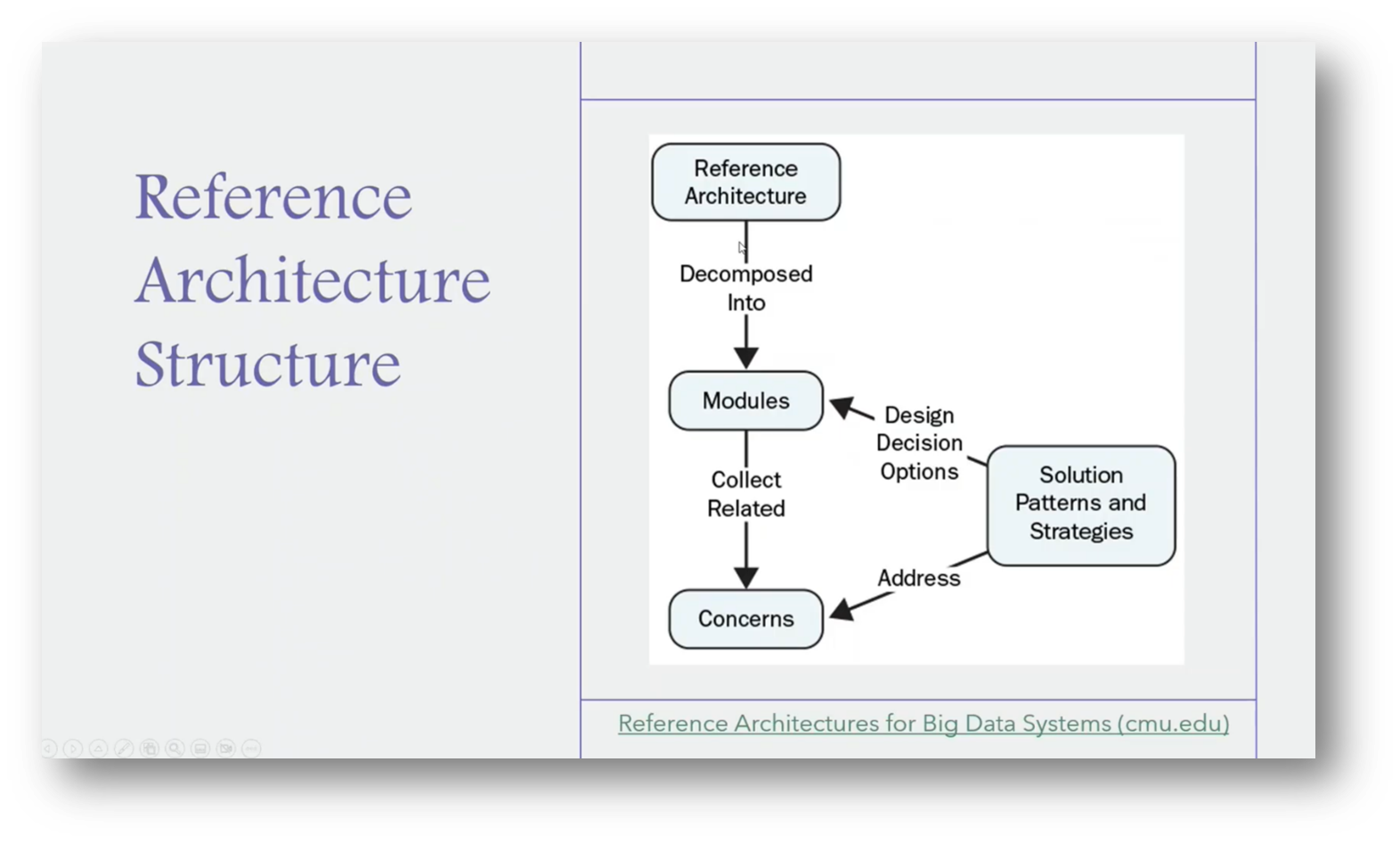

The Reference Architecture for Data Management is structured into modules that represent various knowledge areas, focused on collecting concerns derived from principles, policies, and procedures (P3). This architecture outlines the necessary deliverables and processes for effective Data Management while seeking technology solutions that align with these requirements.

An example includes discussions on Big Data and Business Intelligence (BIA), which cover various types of analytics, such as descriptive, prescriptive, and cognitive. As vendors respond to requests for information (RFI), they must address these outlined concerns and clarify the architectural patterns used within their products. This structured approach ensures that the selected technology effectively meets the organisation's needs.

Figure 18 Reference Architecture Structure

Understanding the Process of Building and Implementing Reference Architectures

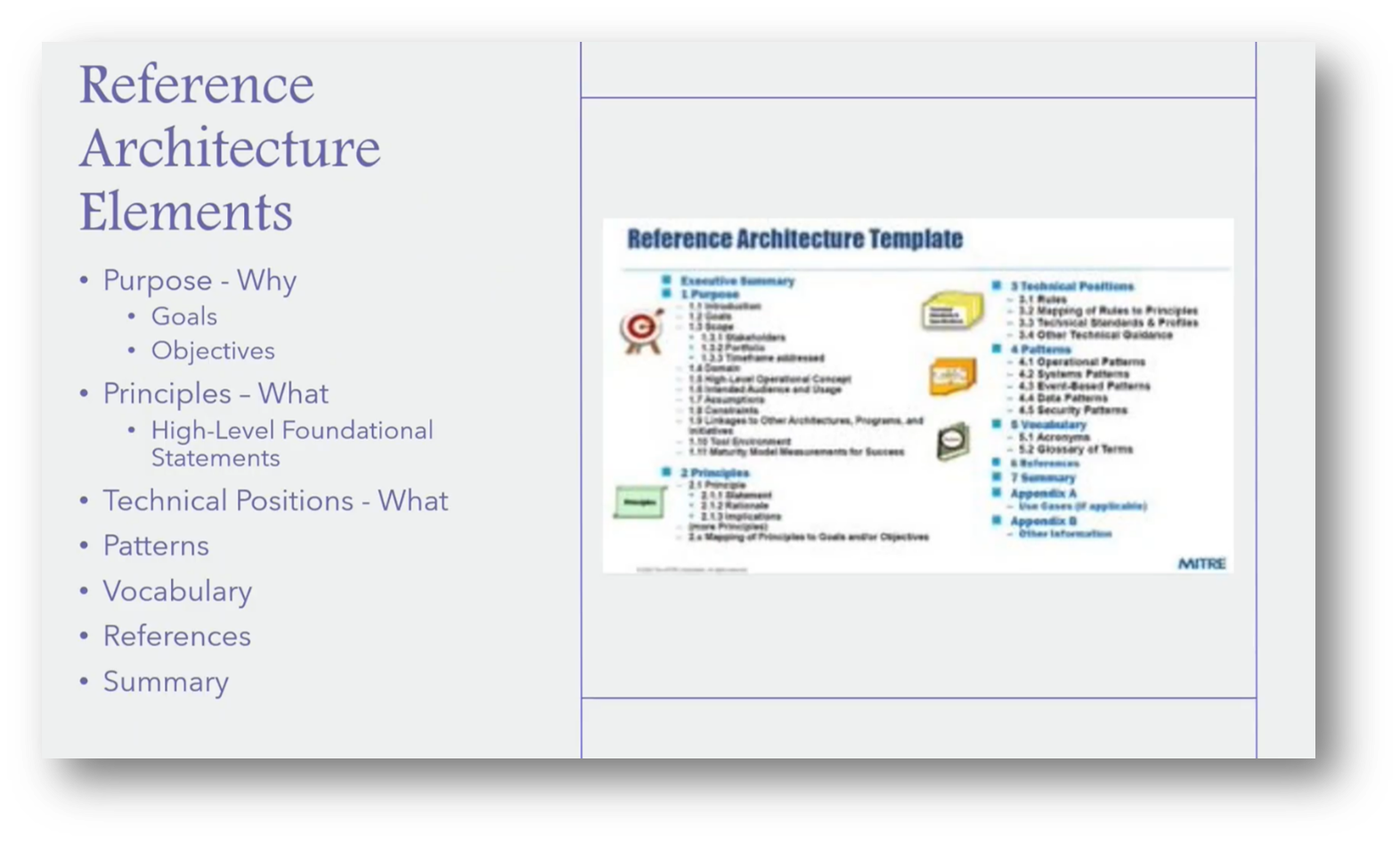

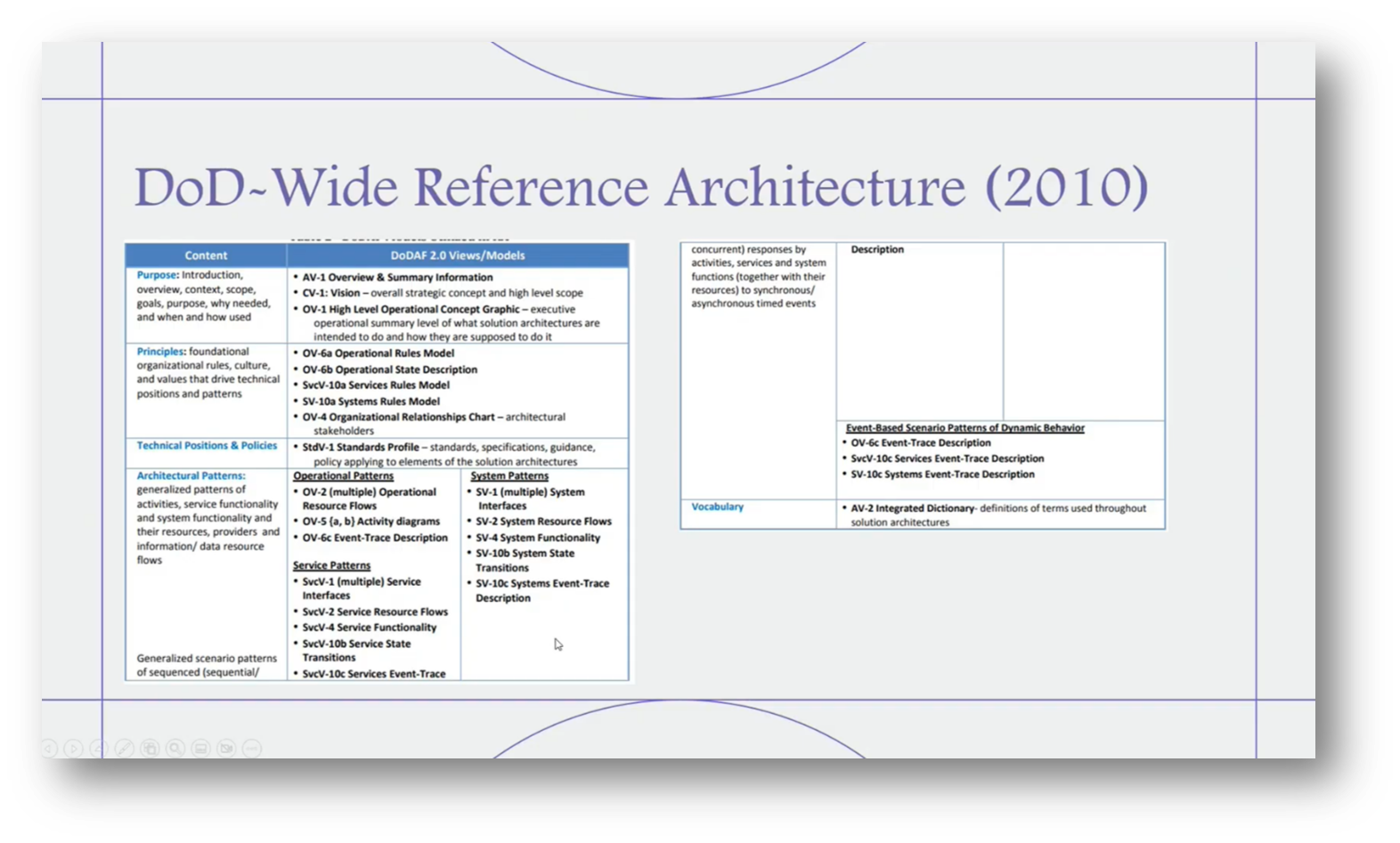

When creating a Reference Architecture document or template, it's essential to define its purpose, objectives, and the principles that guide the framework, categorised by specific knowledge areas. This includes outlining technology positions to clarify the intended functionality and establishing a standardised vocabulary relevant to the domain, such as the processes of ingestion, processing, and analysis in Big Data.

The structure should encompass purpose statements, guiding principles, positions, and policies, drawing on examples like the one developed by the DoD in 2010. By organising these elements, including architectural patterns and scenario patterns, the document can effectively serve as a comprehensive guide for building a robust Reference Architecture.

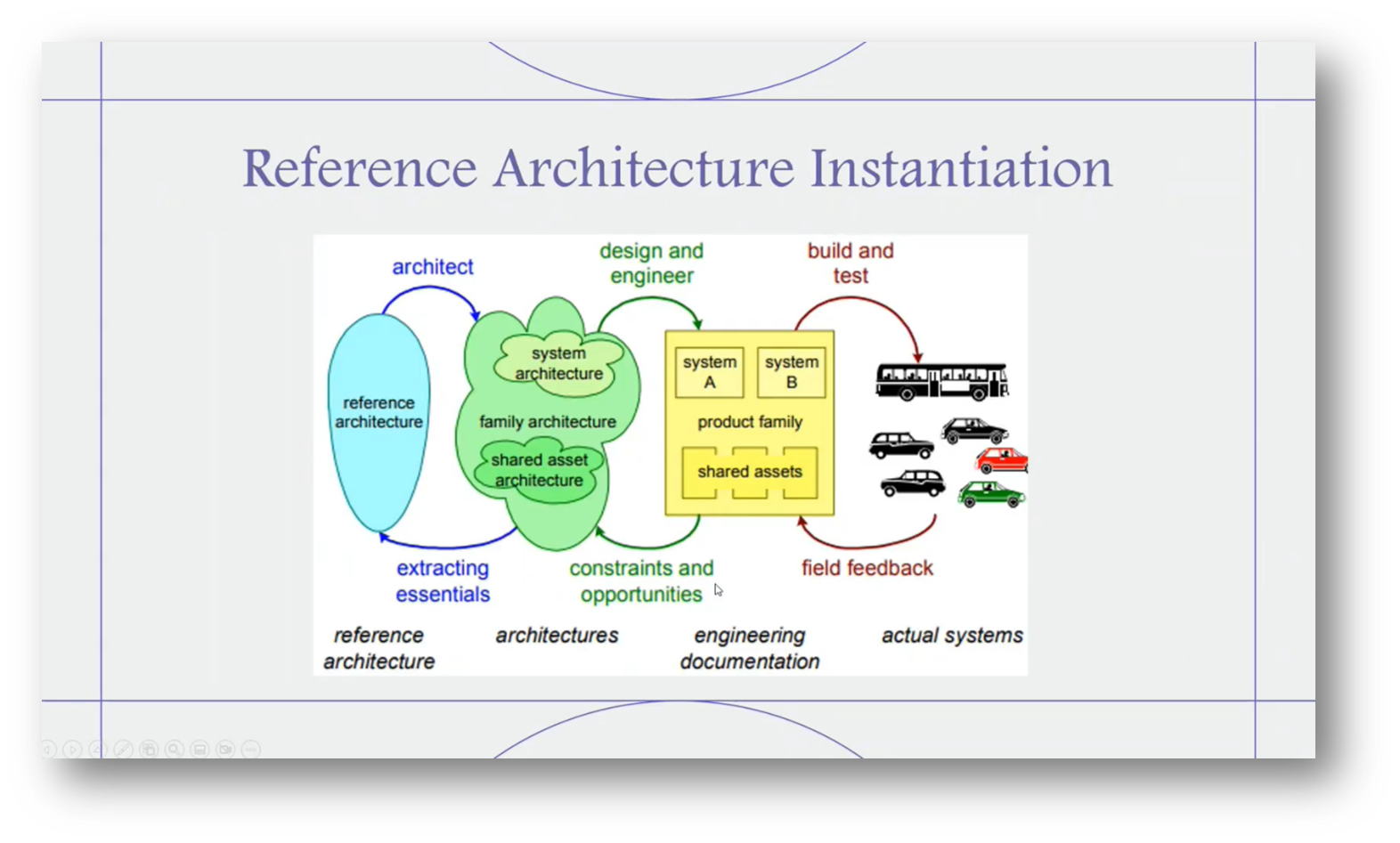

The Reference Architecture serves as a foundational framework for Data Management, distinct from business-specific reference models developed by organisations like IBM or Teradata. To effectively utilise this architecture, it is essential to create a variety of architectures tailored to different systems, integrating them into your design and engineering processes as you procure or develop new systems.

Throughout this process, it is essential to maintain a feedback loop to address the constraints and opportunities that arise. For instance, while tools like IDEA or Calibra may offer certain cataloguing functionalities, they might not support all integration patterns, highlighting the challenges that arise during implementation.

To effectively link Metadata, it's crucial to develop a comprehensive architecture that includes various data models, such as reference data models, enterprise data models, application data models, and physical implementation models. After building and testing this architecture, feedback from real-world applications creates a feedback loop for continuous improvement.

Assessing the maturity of the architecture is essential for understanding its lifecycle, as it indicates when updates are necessary to encourage compliance and conformance. Addressing instances where solution architects deviate from the Reference Architecture will highlight areas where support is needed to enhance overall effectiveness.

Figure 19 "Reference Architecture Elements"

Figure 20 DoD - Wide Reference Architecture (2010)

Figure 21 "Reference Architecture Instantiation"

Figure 22 How Mature is our Reference Architecture?

The Role of Data Managers in Reference Architecture

Before delving into the Data Manager's role, Howard addressed essential concepts related to Reference Architecture. He highlighted the definition of Reference Architecture as a generic template, emphasising that it does not specifically pertain to the architecture of the Master Data system.

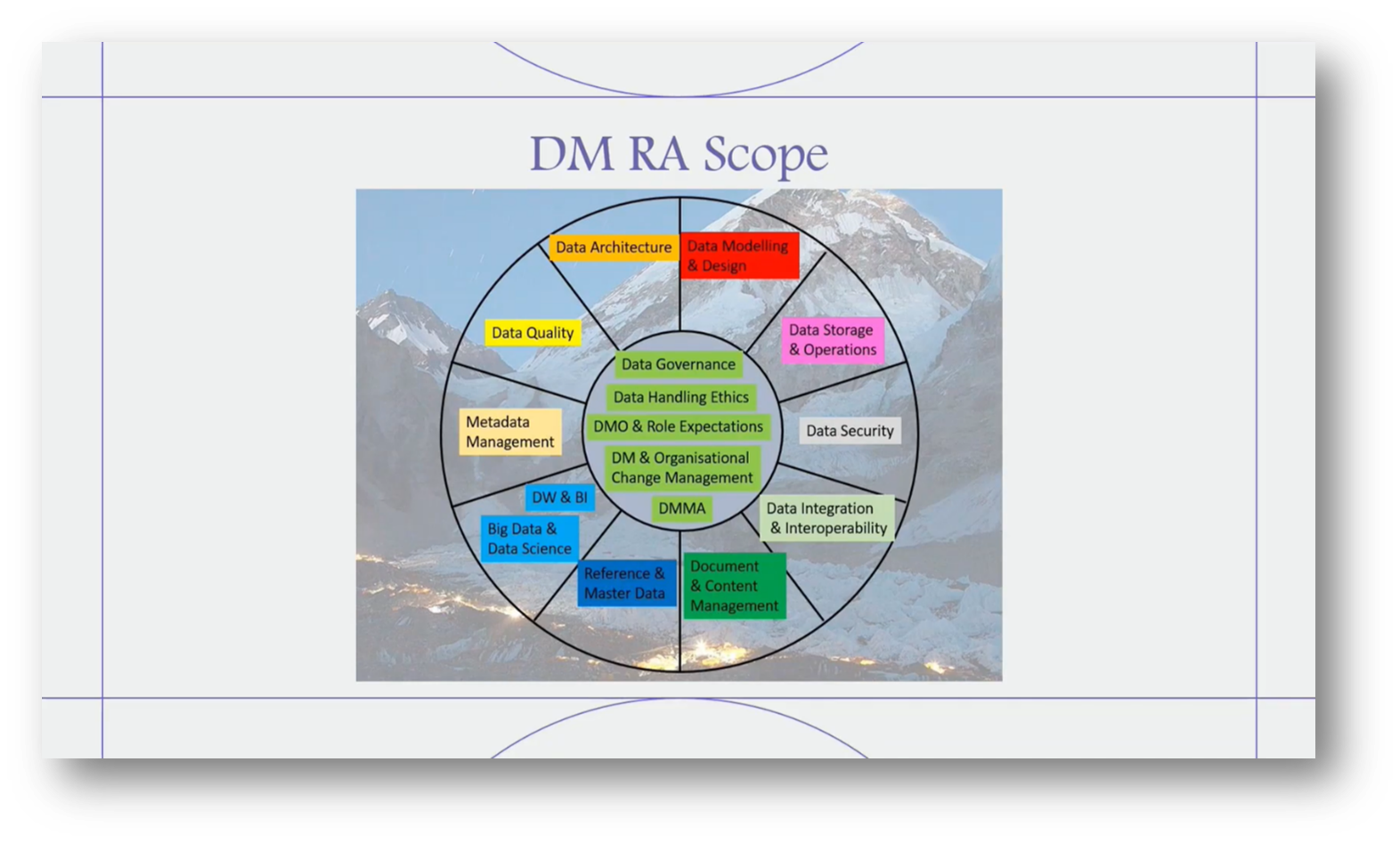

Howard outlined a template designed to meet the organisation's requirements for implementing a Master Data system. He shared the use of Reference Architecture to create a structured framework for various Data Management modules. Each Data Management module corresponded to specific knowledge areas such as Big Data, Master Data, Data Quality, and Data Integration.

This architecture will serve as a guideline for developing the organisation’s Data Management systems, ensuring consistency across different submissions, like those for NDMO. Overall, the Reference Architecture aims to streamline the implementation process in a multi-site environment, adapting to specific organisational conditions.

Howard then highlighted the concept of architecture in the context of various industries, particularly focusing on the emergence of Reference Architectures tailored to specific sectors, such as Industry 4.0 for manufacturing and frameworks for smart cities. These specialised architectures encompass essential business models relevant to each industry, emphasising the integration of data operations rather than solely hardware considerations.

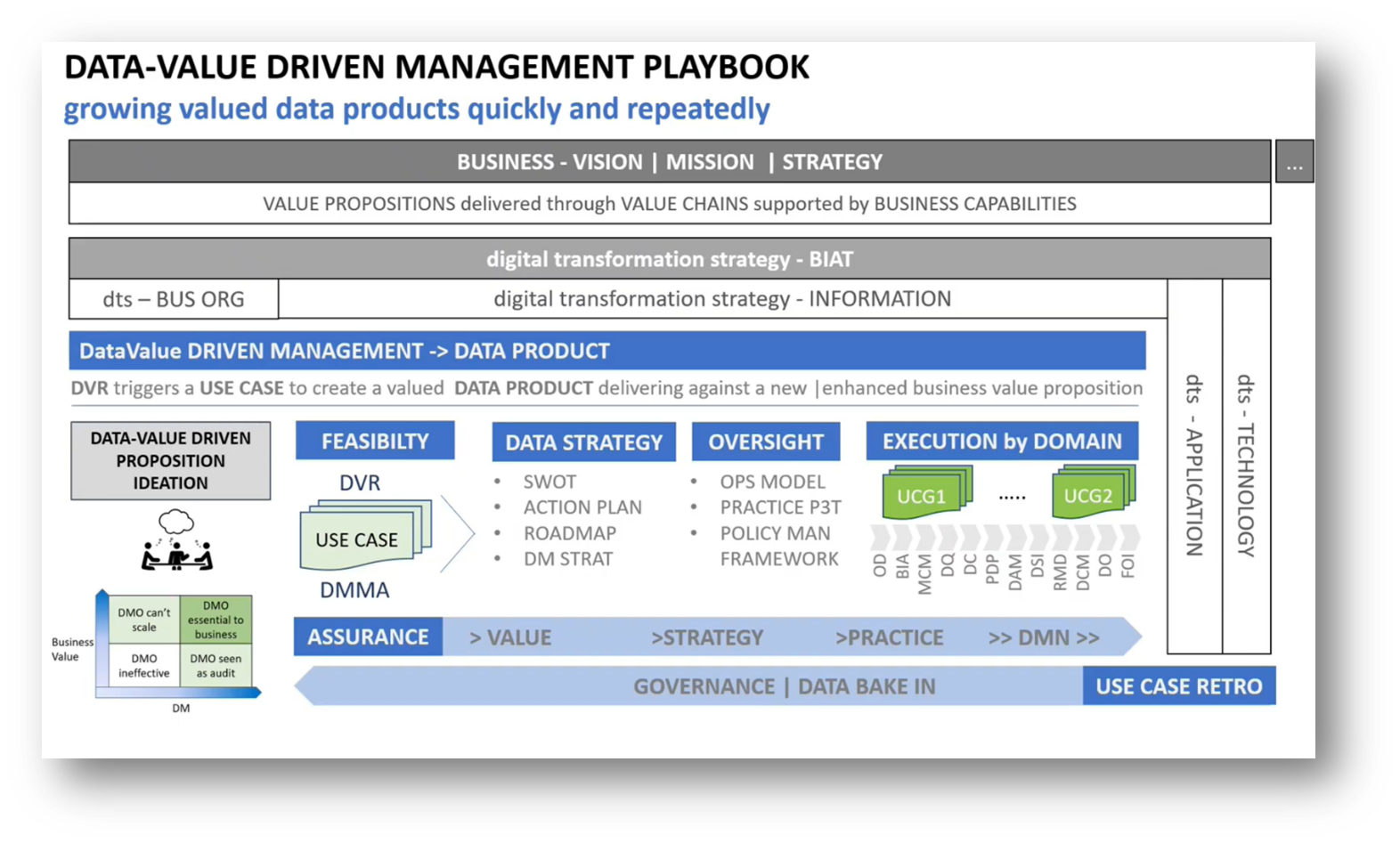

In the context of implementing Master Data Management through a DVD approach, it is crucial to establish a Reference Data Architecture that outlines how various components will interact. For instance, using Informatica, which comprises several modules focused on governance, quality, and information management, is essential in multi-vendor environments.

This solution architecture serves as a blueprint for building and integrating systems effectively, whether through in-house development or external procurement. Key discussions have highlighted the importance of understanding the significance and impact of these modules within the organisation as we move forward with the Data Manager.

Understanding the Principles of Reference Architectures

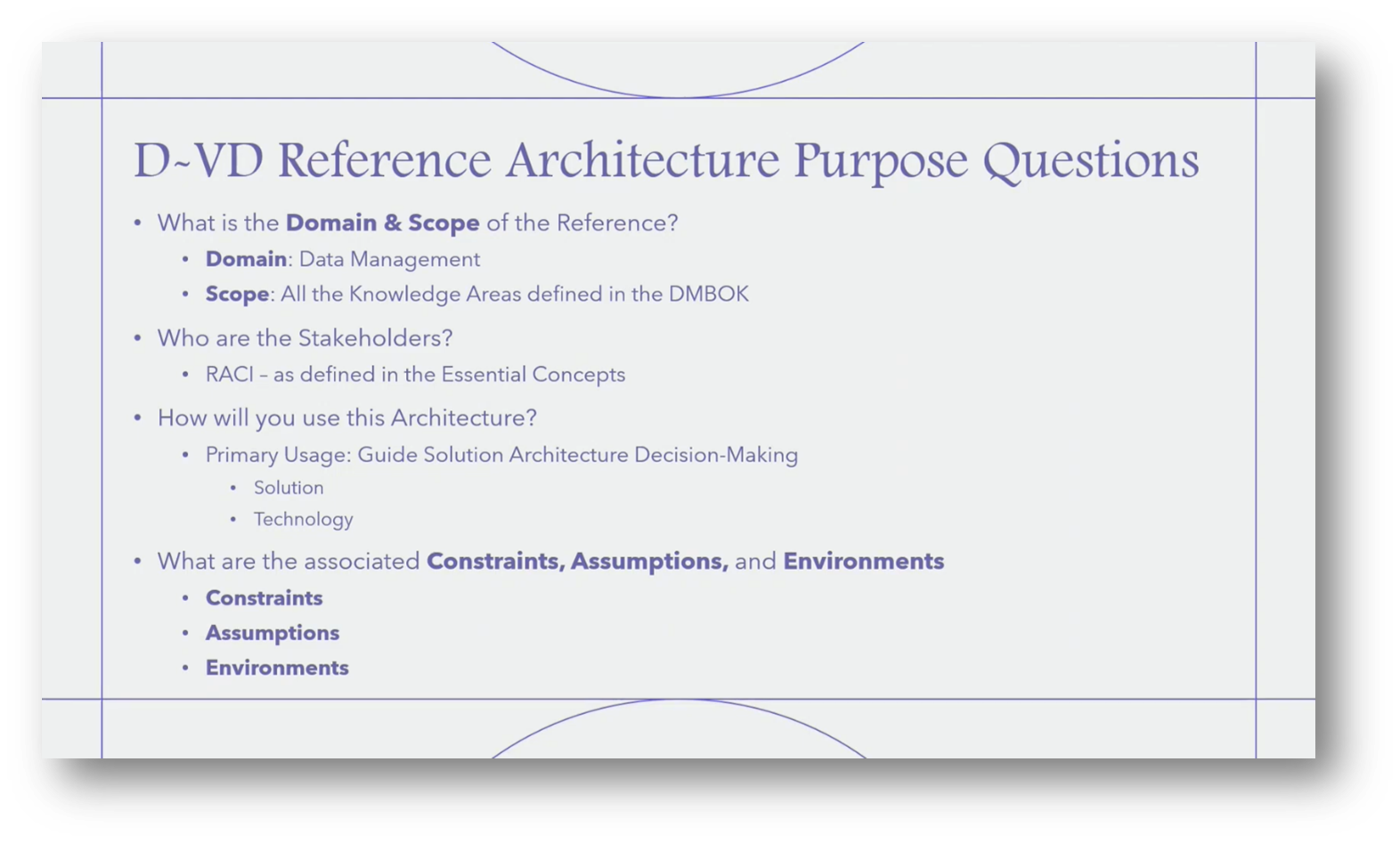

The concept of "awaiting" plays a crucial role in the development of Reference Architectures, particularly regarding Data Management. A Data Manager is responsible for articulating the purpose and principles of the architecture, ensuring clarity and focus. To formulate a purpose statement, key questions are addressed, starting with the domain and scope.

The domain is identified as Data Management, while the scope determines which areas are relevant, acknowledging that certain elements, such as Reference Data, Master Data, or advanced analytics, may not be immediately necessary based on the organisation's maturity level. Additionally, it is essential to avoid introducing unnecessary components that could lead to confusion, maintaining clarity and focus on current needs. Identifying stakeholders is also a critical aspect of this process.

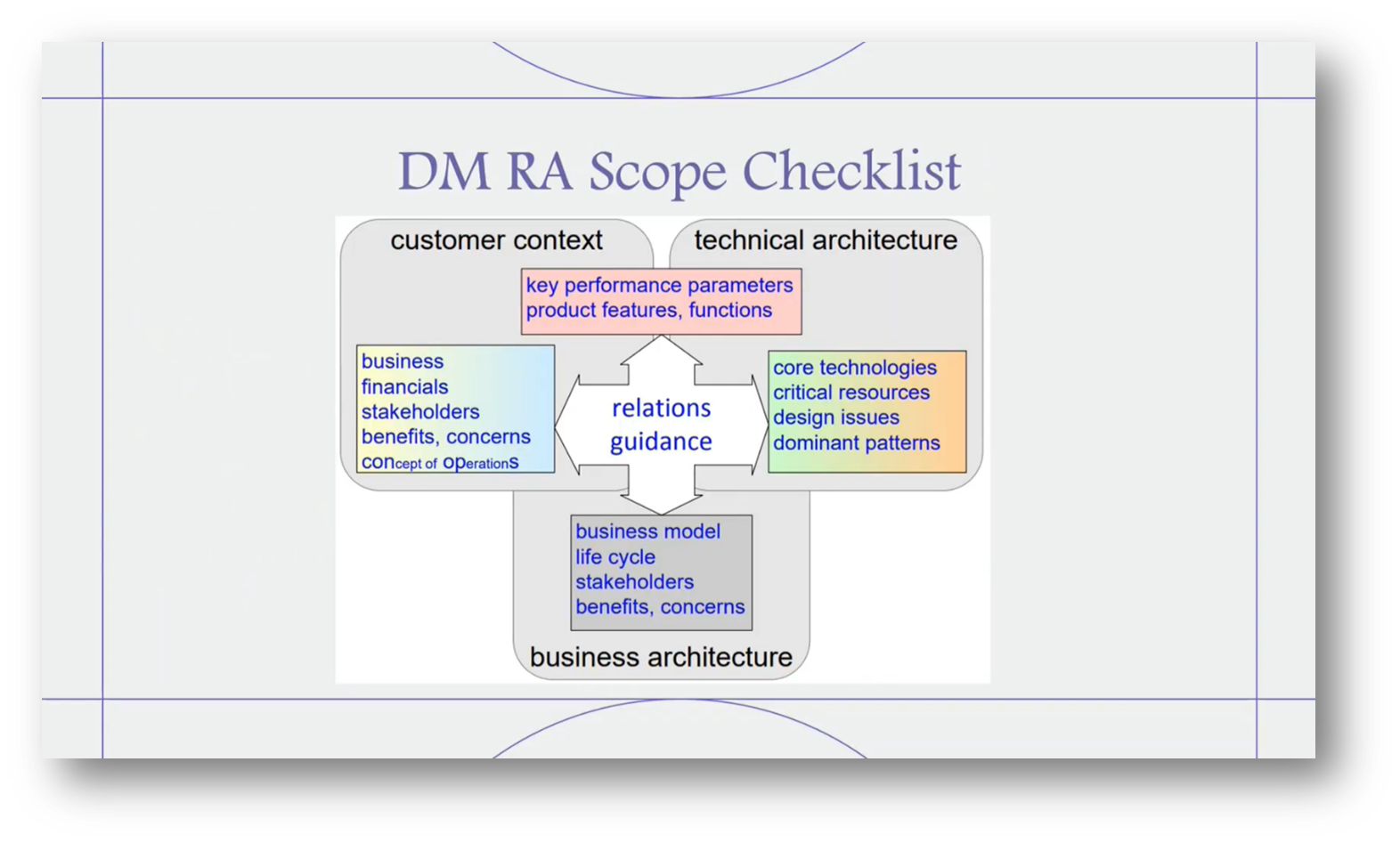

The Reference Architecture serves as a guiding framework for solution architects tasked with addressing specific departmental needs through the implementation of technology solutions. This architecture outlines the necessary principles for developing the solution architecture, considering various constraints such as budget, environment, and vendor preferences, whether single vendor or best-of-breed. Furthermore, it provides a checklist for scoping the project's requirements, ensuring that all essential factors are considered during the solution implementation process.

Figure 23 Data Manager: Reference Architecture

Figure 24 D-VD Reference Architecture Elements

Figure 25 D-VD Reference Architecture Purpose Questions

The Importance of Data Management in Enterprise Architecture

Howard highlighted the importance of understanding customer context and stakeholder concerns when providing technology solutions to various departments. Business architecture plays a critical role in shaping the business model, impacting key performance parameters such as products, features, core technologies, and critical resources.

Currently, dominant Data Management patterns, especially in cloud environments, include orchestration patterns for data flows. The knowledge areas related to Data Management are crucial. Still, a notable gap exists regarding the vision for Data Quality, which needs to be addressed to ensure a comprehensive approach to Data Management strategies.

Howard then focused on developing a comprehensive data architecture by identifying key modules and their interconnections, specifically highlighting the importance of including a Data Management component that had been overlooked. He emphasised that operating in silos—focusing solely on data architecture or design—can lead to missing critical integrations necessary for a cohesive enterprise approach.

Figure 26 DM RA Scope Checklist

Figure 27 DM RA Scope

Figure 28 Data-Value Driven Management Playbook

Figure 29 What are we missing?

Vendor Assessment and Interoperability in Data Management

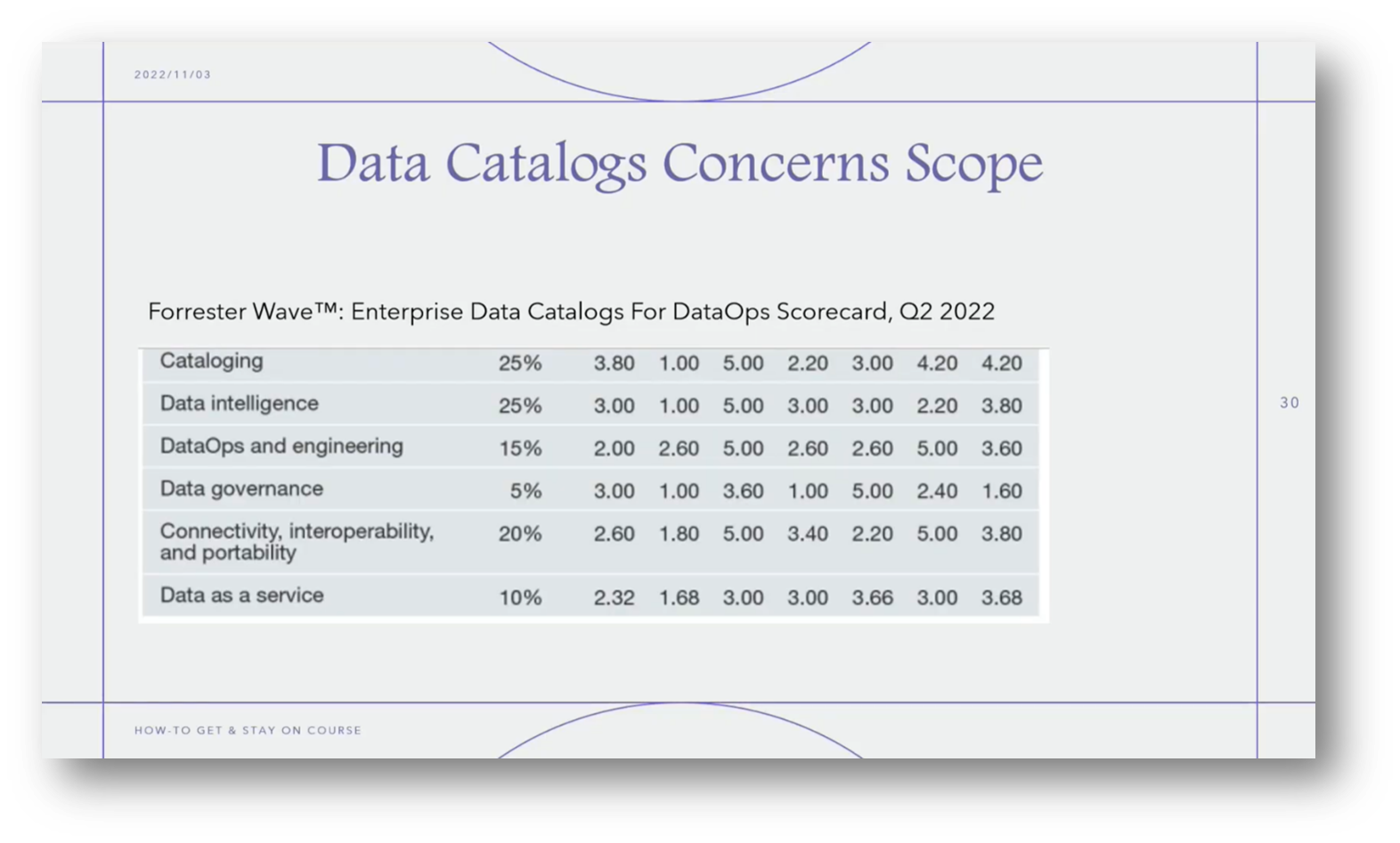

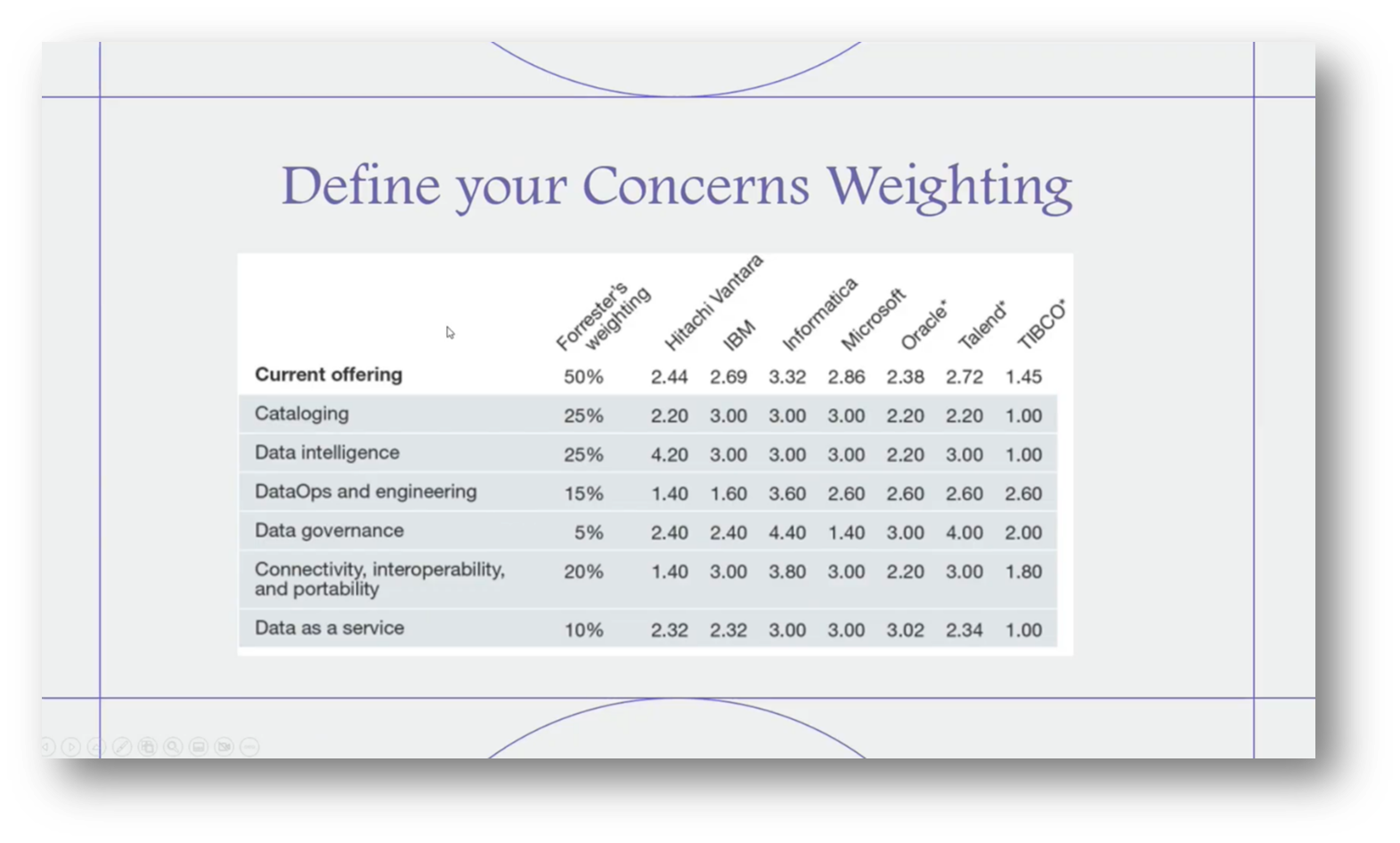

In evaluating data catalogue solutions, it's essential to consider the assessment criteria outlined by Forrester and Gartner, which emphasise specific features that influence product ratings. For instance, my weighted priorities include cataloguing (25%), Data Intelligence (25%), data operations and engineering (15%), connectivity (20%), Data as a Service (10%), and Data Governance (5%). Additionally, by using these weightings, organisations can effectively communicate their requirements to vendors like IBM, Informatica, or Microsoft, obtaining scores based on the features that matter most to them.

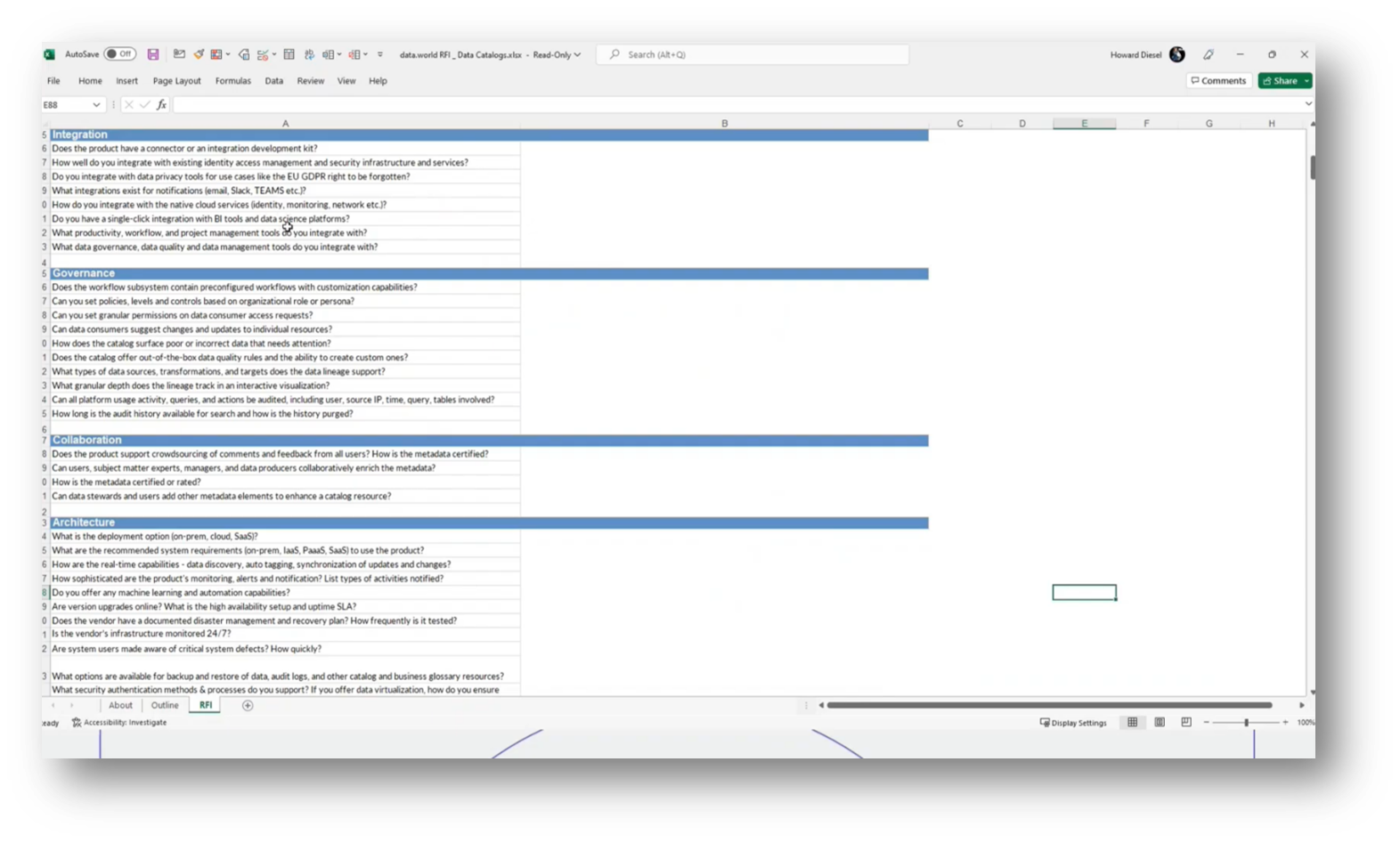

The process of evaluating vendors involves a detailed weighting feature built upon key knowledge areas and concepts, which can become quite comprehensive. Essential questions must be posed to the vendor, such as the capability to collect specific Metadata, the granularity of data, and whether the business glossary encompasses relevant terms and definitions.

This structured approach involves gathering responses through a Request for Information (RFI) and assigning scores based on the answers received, enabling a clear comparison across different vendors. Such a systematic analysis helps ensure informed decision-making rather than basing choices solely on personal experiences or social engagements, such as enjoying a lunch with a vendor like Informatica.

Howard then highlighted the importance of vendor interoperability in relation to organisational Reference Architectures. Key considerations include assessing integration capabilities, such as the availability of connectors, integration development kits, and compatibility with identity data privacy notifications on platforms like Slack, as well as native cloud services and single-click integration tools.

A structured approach involves formulating specific questions to evaluate interoperability features, including the implementation of a canonical model and support for SOA interfaces. This assessment aims to bridge the gap between vendor offerings, industry best practices, and the actual technological landscape within the organisation.

Figure 30 Data Catalogue Concerns: Scope

Figure 31 "Define your Concerns Weighting"

Figure 32 More Detail Questions by Core Concern

Figure 33 Data World RFI

Implementing Data Management Strategies

When initiating a building project, it's crucial to have well-defined procedures and policies in place, as well as a clear understanding of the necessary outputs, such as developing data as a service, creating a data catalogue, implementing Data Intelligence, and establishing data operations. To ensure that these initiatives align with industry standards, a review of resources from organisations like Forrester and Gartner is essential. Additionally, these resources provide valuable insights into the core features and evaluation criteria used to rate various products, facilitating the selection process without requiring direct engagement with vendors. This approach enables a comprehensive understanding of the critical technology elements necessary for successful implementation.

Howard outlined the key aspects of Master Data Management (MDM) in relation to current technology and industry best practices. Highlighting the importance of core features such as multiple domain support and hierarchy management, which present unique challenges across different industries.

For instance, the adequacy of an MDM system in supporting various product management systems and a chart of accounts is crucial for sectors such as finance and manufacturing. The application of these features must be tailored to specific industries, as exemplified by the differences in requirements between hypermarkets and software environments. Furthermore, the evaluation of these MDM capabilities includes different levels of ratings to assess their effectiveness in Data Integration and overall management.

Figure 34 DoD Reference Architecture White Paper

Data Management and Vendor Assessment

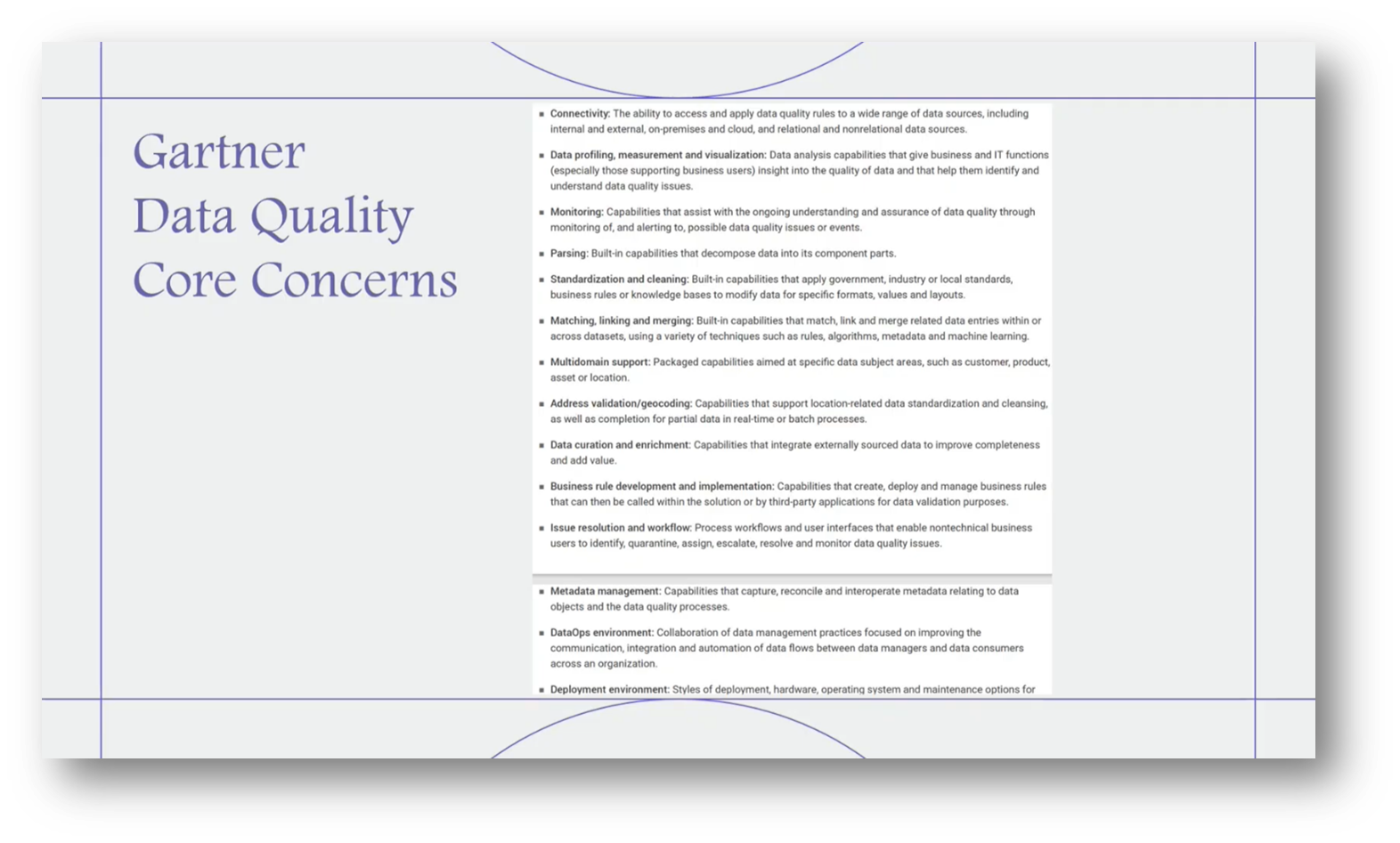

Howard outlined key considerations for automating Data Management processes, emphasising the importance of understanding specific requirements such as desired automation terms and architectural levels. He addressed core concerns related to Master Data and information quality, highlighting critical elements like hierarchy management, data standardisation, cleaning, matching, linking, and multi-domain support.

The significance of address validation and data curation, particularly for industries with diverse data subject areas, was highlighted. Howard then touched on the implications of data sharing in cloud environments and various data engineering approaches. He focused on integrating data across multiple cloud systems and emphasised the importance of creating a comprehensive framework for evaluating vendors, particularly in enterprise-level appraisals.

Key points include the necessity of understanding core concerns in various knowledge areas to develop a systematic list of questions that inform a rating system for vendors. Howard emphasised the significance of this assessment in ensuring effective decision-making, noting that each business's needs and priorities will differ.

When evaluating open-source solutions versus established vendors, it's essential to identify the critical features where open-source solutions may lag behind and consider whether those features are necessary for your current needs. Focus on your core principles, policies, and procedures, and assess whether any new features introduced by vendors could render your Reference Architecture invalid.

Howard noted that many vendors may focus on functionalities that you might not need now or in the future. It's crucial to align features with your business requirements and industry standards for Data Management, ensuring that your choices support long-term growth without unnecessary complexity, rather than being swayed by additional offerings from vendors like Informatica.

Figure 35 Gartner Data Quality Core Concerns

Figure 36 Gartner Data Sharing and Integration Core Concerns

Figure 37 “D-VD Reference Architecture Principles”

Architectural Principles and Their Implications

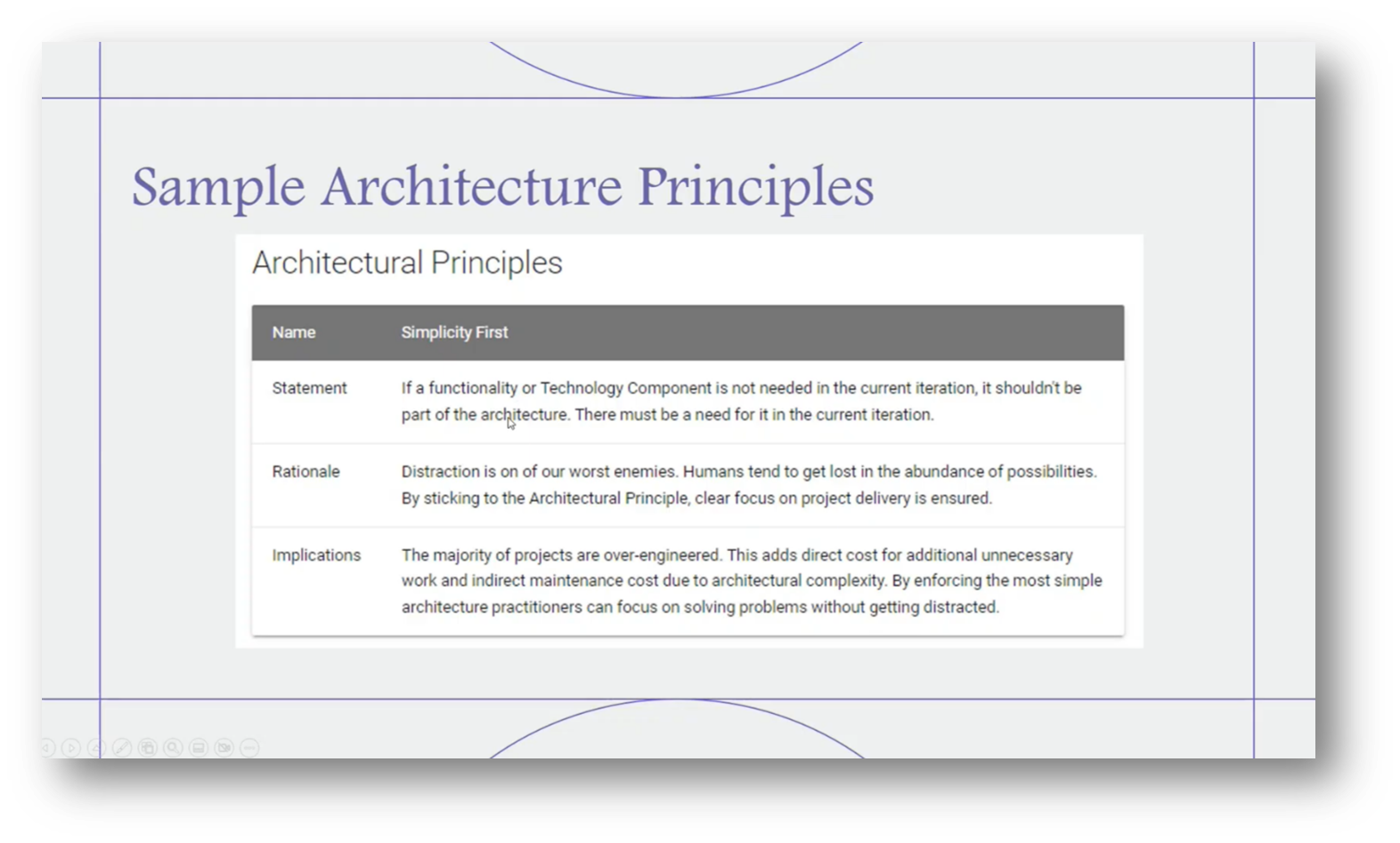

Architectural principles are crucial for guiding design decisions at a fundamental level. One notable example is the principle of "Simplicity First," which states that if functionality is not required in the current iteration of a project, it should not be included in the architecture. This principle aims to reduce overengineering, thereby minimising unnecessary costs and workloads.

By adhering to a simple architecture, practitioners can concentrate on effectively solving problems without diverting attention to irrelevant discussions. Clearly defining concerns and establishing their weightings is essential to prevent unnecessary complexities in the project. The framework provided by P3 can serve as an effective starting point for applying these principles.

Figure 38 Sample Architecture Principles

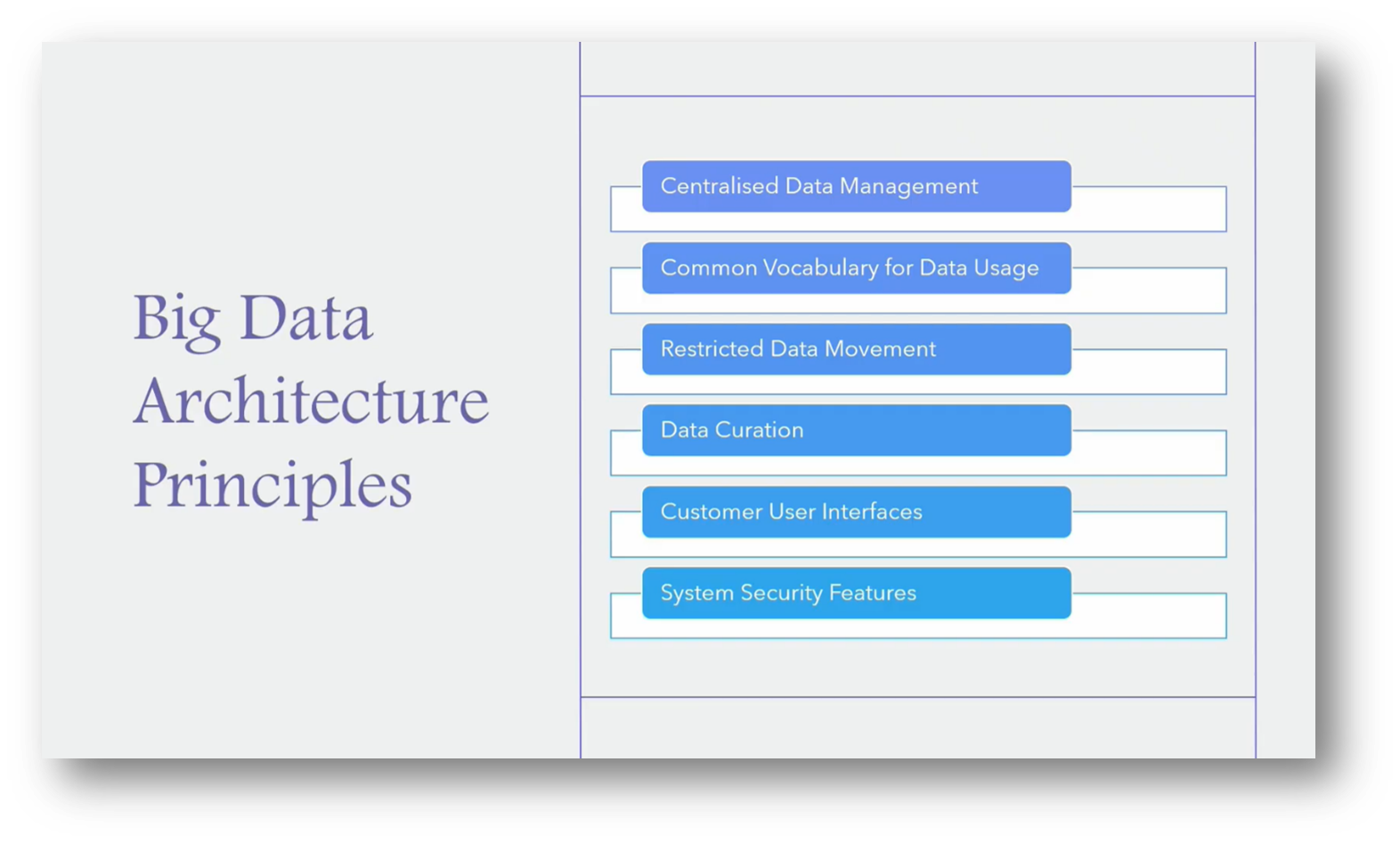

Big Data Architecture Principles and Data Management

Big Data architecture principles emphasise centralised Data Management, commonly represented by a data lake that serves as a unified repository using a common vocabulary while minimising data movement. Snowflake exemplifies these principles by eliminating data copying and instead offering different views of the same dataset.

Effective data curation involves building specific user interfaces on top of the data without requiring redundant development. Additionally, it’s crucial to assess Data Management products against frameworks like DMBO and NDMO principles, focusing on asset management, economic valuation, and the ability to support quality Metadata throughout data planning and implementation.

Howard then emphasised the importance of a Reference Data Architecture that is driven by value and guided by governance. Highlighting that decision-making will be informed by specific use cases, ensuring that resources are allocated effectively. Stakeholders, including citizens, data professionals, and executives, all play vital roles in this process: citizens contribute business requirements; data professionals focus on deliverables and assessments; while executives prioritise value, ROI, and vendor reliability. Lastly, Howard noted that the aim is to create a structured and useful framework that can be effectively applied.

Figure 39 “Big Data Architecture Principles”

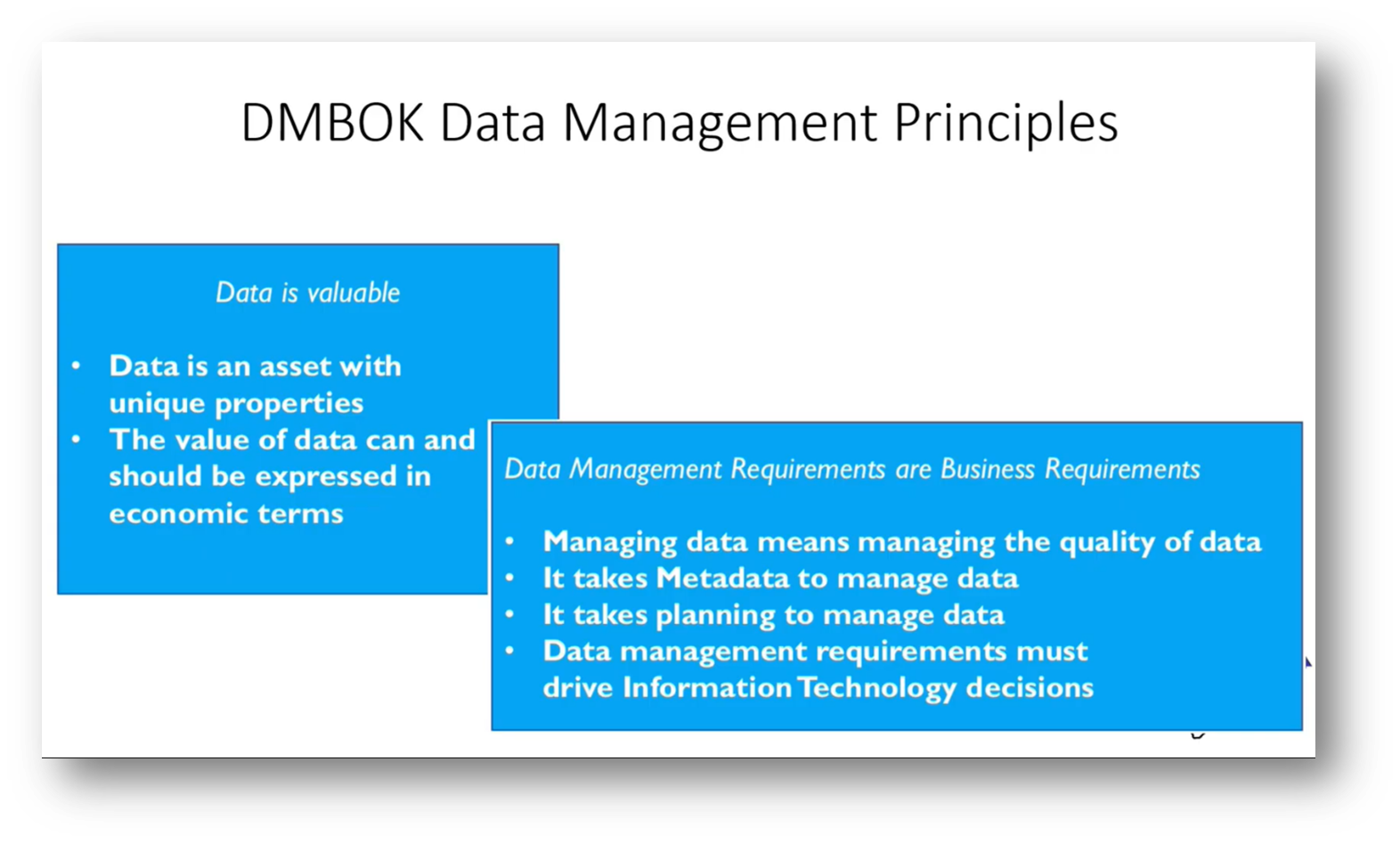

Figure 40 DMBoK Data Management Principles

Figure 41 “Data-Value Driven Data Management Principles”

If you would like to join the discussion, please visit our community platform, the Data Professional Expedition.

Additionally, if you would like to be a guest speaker on a future webinar, kindly contact Debbie (social@modelwaresystems.com)

Don’t forget to join our exciting LinkedIn and Meetup data communities not to miss out!