Data Management Maturity Assessment for Data Citizens

Executive Summary

This webinar provides an overview of the importance of data management maturity assessments, readiness assessments, stakeholder involvement, and training to achieve business agility. Howard Diesel emphasises the role of data citizens, semantics, data modelling, and the structure and benefits of enterprise data models. Additionally, he covers data flows, lineage, and the data value chain and discusses different approaches to data storage and knowledge graphs. Finally, the webinar highlights the challenges of implementing reference architectures and the significance of examples in understanding the processes.

Webinar Details

Title: Data Management Maturity Assessments for Data Citizens

Date: 25 February 2022

Presenter: Howard Diesel

Meetup Group: Data Citizens

Write-up Author: Howard Diesel

Contents

Maturity Assessment and Data Architecture

Data Management Maturity Assessment

Readiness Assessment and Maturity Models

Data Architecture Assessment

Importance of Readiness Assessments in Achieving Goals

Importance of Stakeholder Involvement in Data Architecture Training

The Role of Data Citizens in Data Architecture

The Importance of Data Architecture and Training Processes in Achieving Business Agility

Importance of Semantics and Data Architecture

Levels of Deliverable Management and Training

Structure and Benefits of the Enterprise Data Model

Data Modelling and Data Flow

Data Flows and Data Lineage

Data Value Chain and Data as a Service

Data Management and Maturity Assessment Training

Discussion on the Challenges of Implementing Reference Architectures and the Importance of Examples in Understanding Processes

Different Approaches to Data Storage and Knowledge Graphs

Maturity Assessment and Data Architecture

Howard Diesel emphasises the importance of data citizens in completing a maturity assessment to improve the effectiveness of data management for business value. Technical people are also involved, but data citizens' engagement and participation are crucial for automation and constant assessment. Data citizens can complete the maturity assessment with the right education and training. Data architecture is used as an example to highlight the importance of data citizens' involvement in the process. Executives were asked qualitative questions about their maturity in data architecture to understand their knowledge and feelings about it. Therefore, understanding data architecture is necessary for executives to answer these questions accurately.

Figure 1 Data Management Maturity Assessment for Data Citizens

Data Management Maturity Assessment

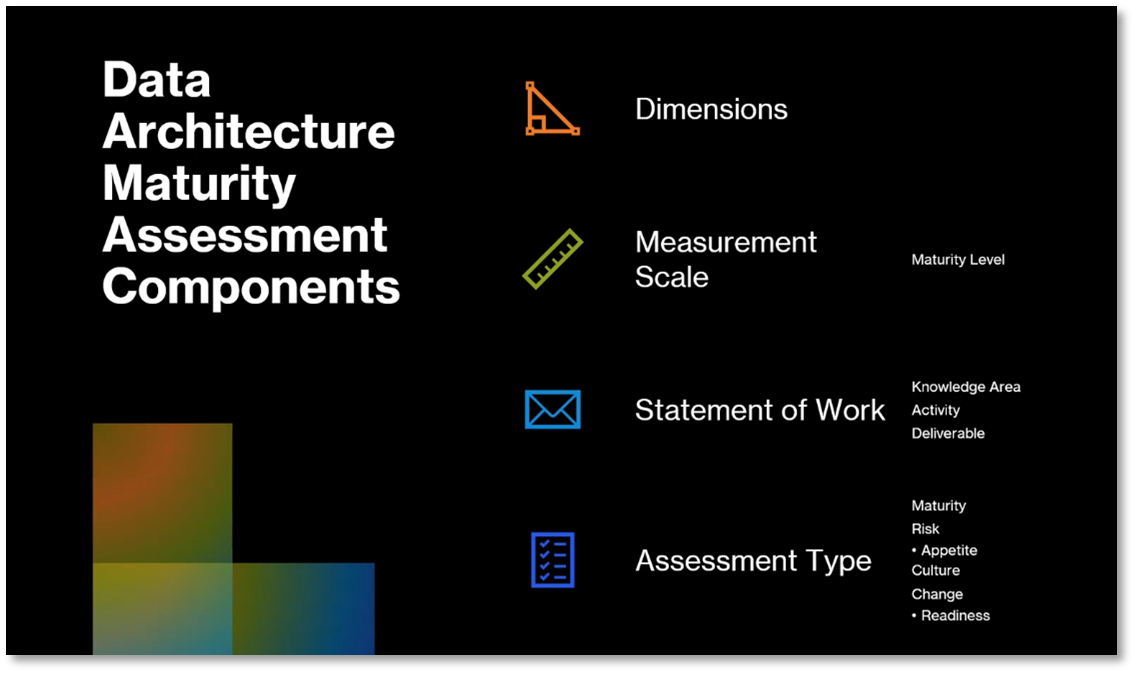

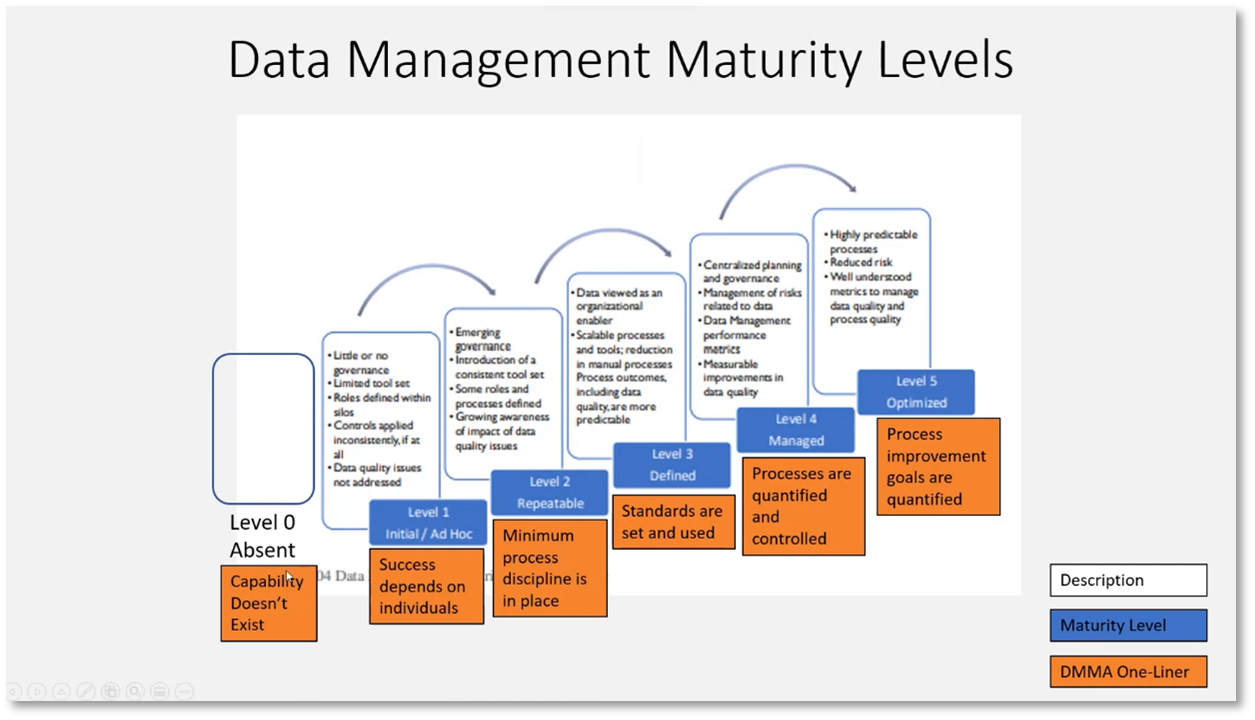

An overview of the technical team's maturity level assessment is provided, emphasising understanding data management's current state and challenges. The assessment can be conducted at various levels, including corporate, business unit, and reporting entities, and it involves enabling units such as the BI and data architecture team. Business stakeholders' or data citizens' involvement is crucial throughout the assessment process. The assessment's scope includes business processes, data quality, metadata, and document content, and the dimensions of the assessment and scale used are typically between one and five, with some using zero. The assessment type may vary, ranging from maturity, risk, cultural, and change assessment.

Figure 2 Data Management Maturity Assessment (DMMA) Core Concepts

Figure 3 Data Architecture Maturity Assessment Components

Readiness Assessment and Maturity Models

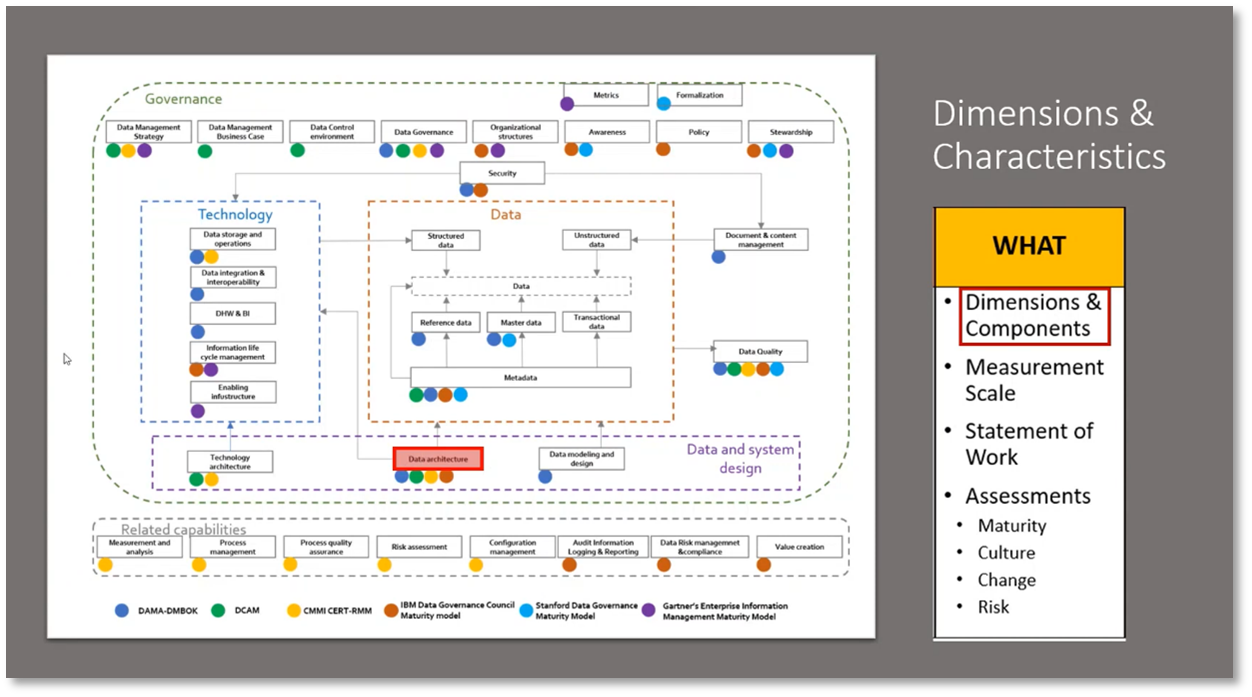

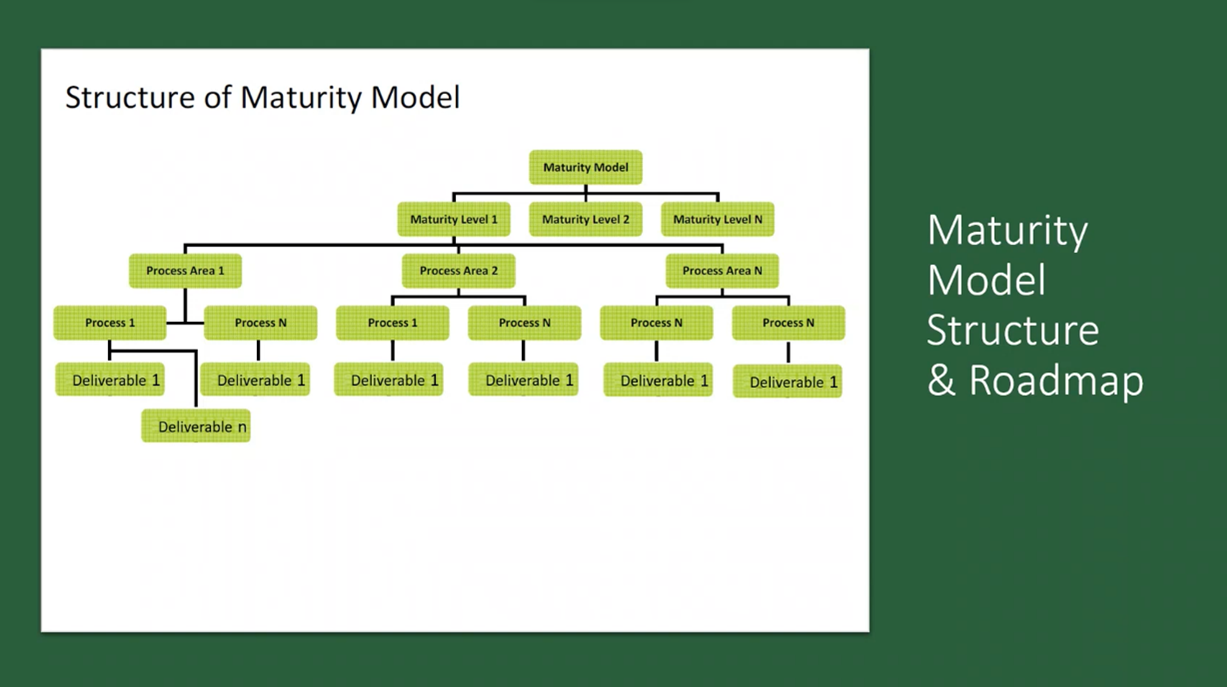

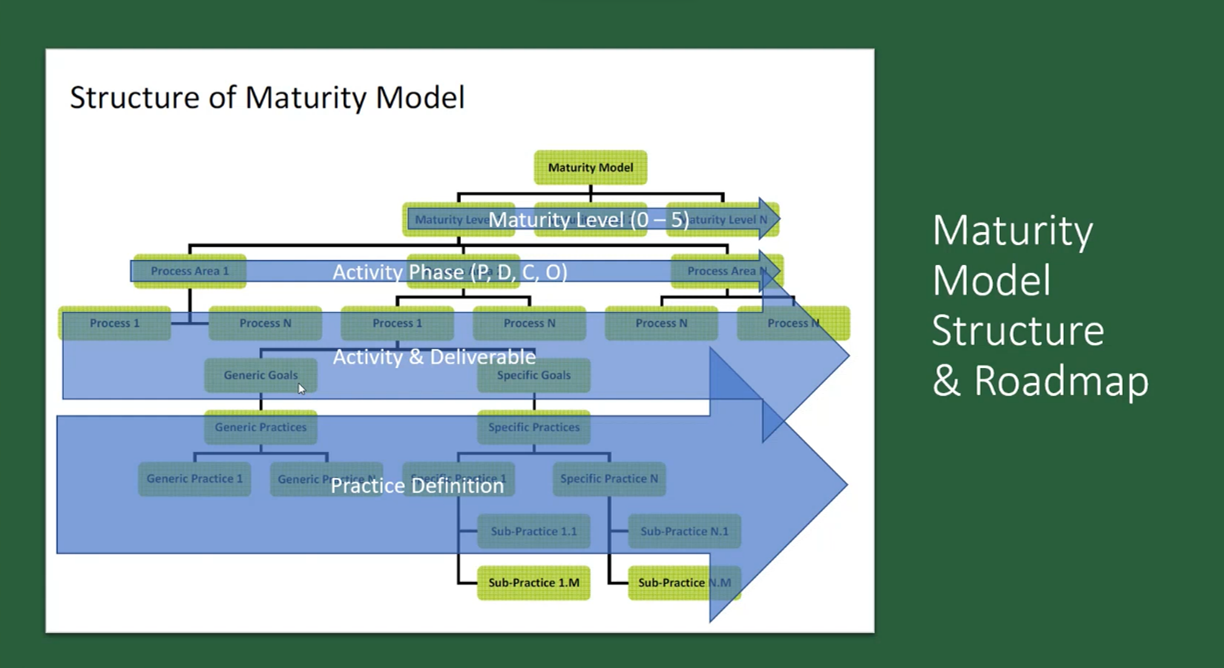

Howard mentions the readiness assessment of knowledge areas, focusing on data architecture. It is important to assess related capabilities such as risk assessment and value creation for each knowledge area. Maturity levels range from zero to five, where zero indicates the absence of capability. Maturity models consist of activity phases (planning, development, control, and operations), processes within the process area, and deliverables. Assessing both the process and deliverables is crucial for maturity assessment. Finally, business and data management processes can be broken down into goals and practices.

Figure 4 Dimensions & Characteristics

Figure 5 Data Management Maturity Levels

Figure 6 Maturity Model Structure & Roadmap

Figure 7 Maturity Model Structure & Roadmap Continued

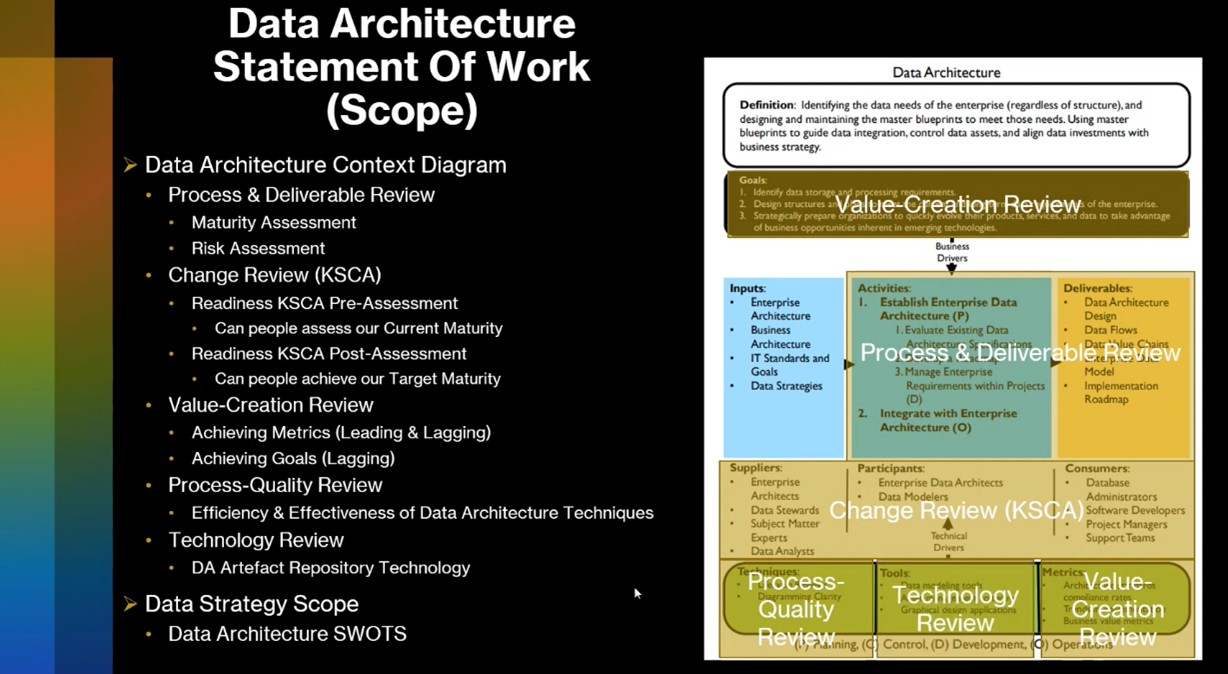

Data Architecture Assessment

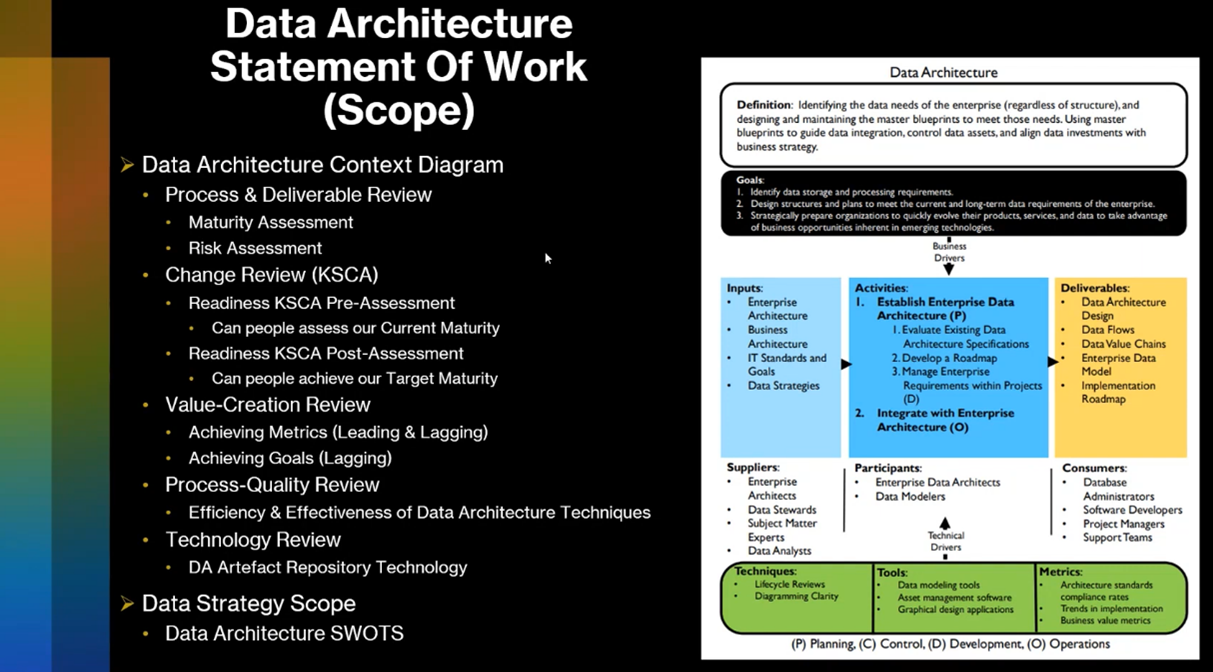

The practice of data architecture involves setting goals and work methods. To assess the effectiveness of this practice, a review is conducted to evaluate process, deliverables, maturity, risk, and change management. The review also assesses the capabilities, knowledge, skills, competencies, and attitudes of the people involved. In addition, metrics and goals are established to create value in the data architecture practice. Leading indicators, such as standards and compliance ratios, measure progress. Process quality is defined and assured to ensure effective data architecture techniques. The use of technology within the organisation is also evaluated. A data strategy is included to identify any issues or gaps related to data architecture. However, in some cases, participants may not be aware of the assessment, which requires a manual explanation of each area, taking up to two hours.

Figure 8 Data Architecture Statement of Work (Scope)

Figure 9 Data Architecture Statement of Work (Scope) continued

Importance of Readiness Assessments in Achieving Goals

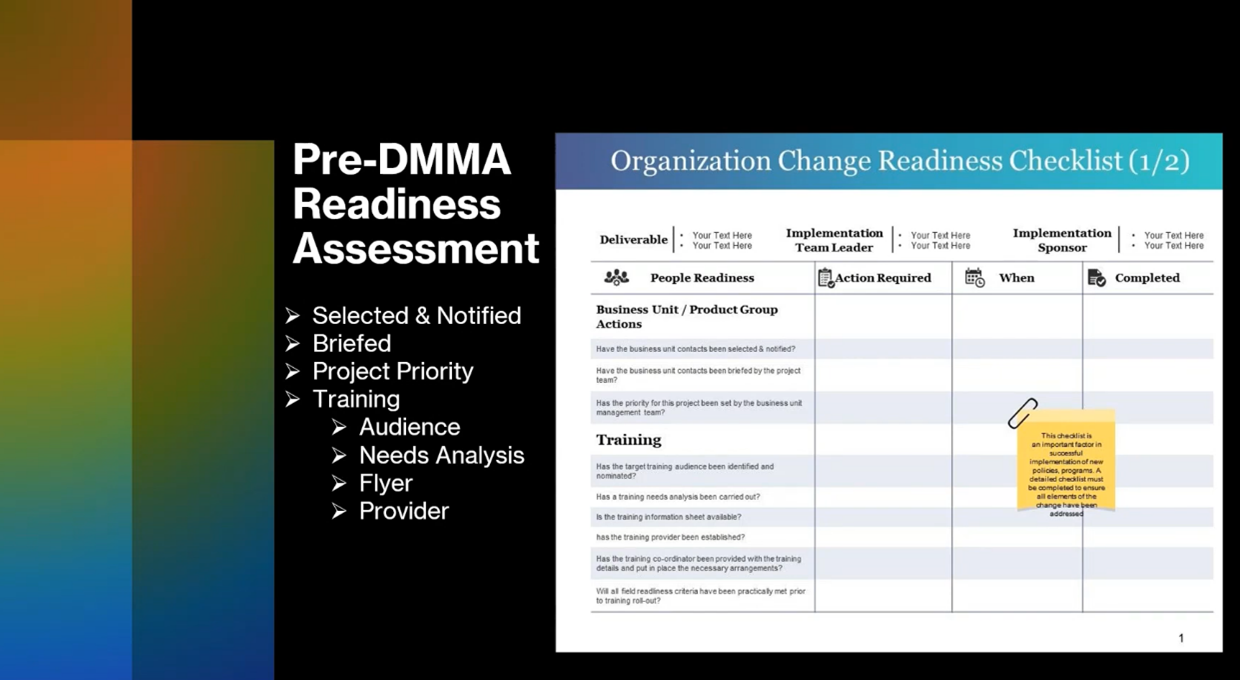

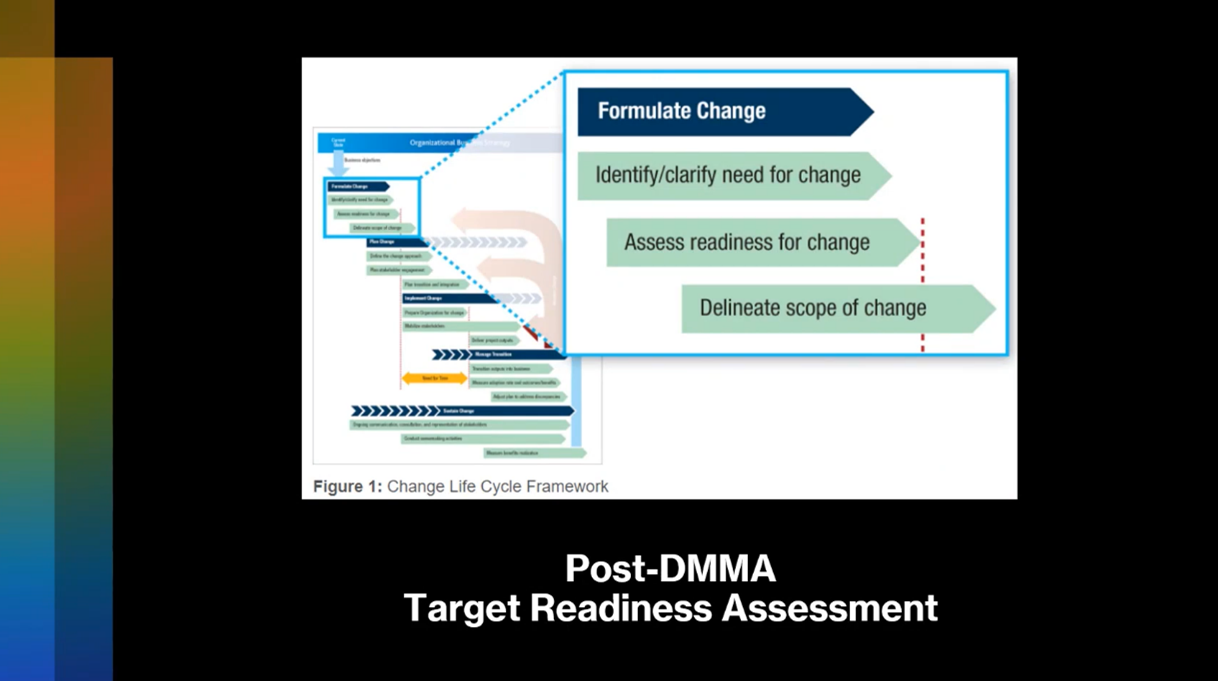

Implementing a deliverable can be slowed down and frustrating for many people without proper training upfront. A lack of awareness and desire for change management can make it difficult to get people committed to the process. Two types of readiness assessments were instituted to address these issues: pre-assessment and post-assessment.

Pre-assessment involves selecting and notifying individuals, briefing them about their roles, ensuring the project priority is known, and providing appropriate training. This helps improve readiness and prevent issues from arising during implementation.

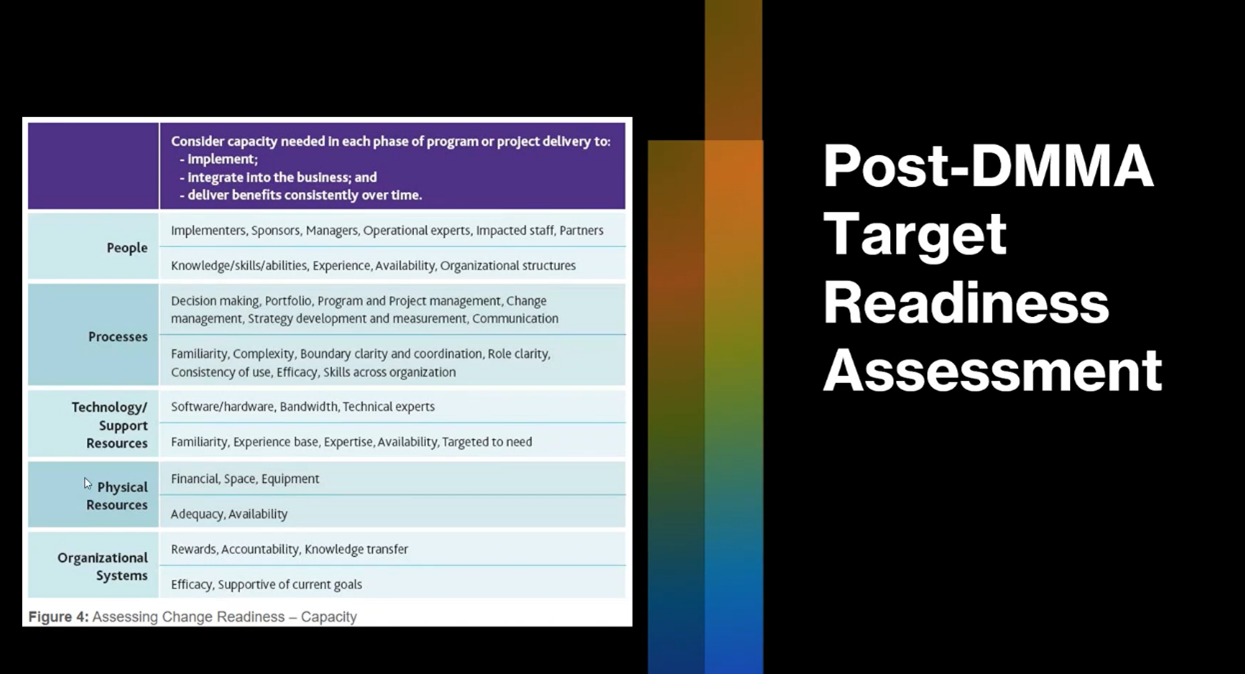

Post-assessment evaluates the organisation's people, processes, technology, physical resources, and systems. This helps identify any issues that may have arisen during implementation and determine if any improvements need to be made. Template breakdowns are provided for conducting the post-readiness assessment, making it easier for organisations to evaluate their readiness and make necessary changes.

Figure 10 Pre-DMMA Readiness Assessment

Figure 11 Post-DMMA Readiness Assessment

Figure 12 Post-DMMA Target Readiness Assessment

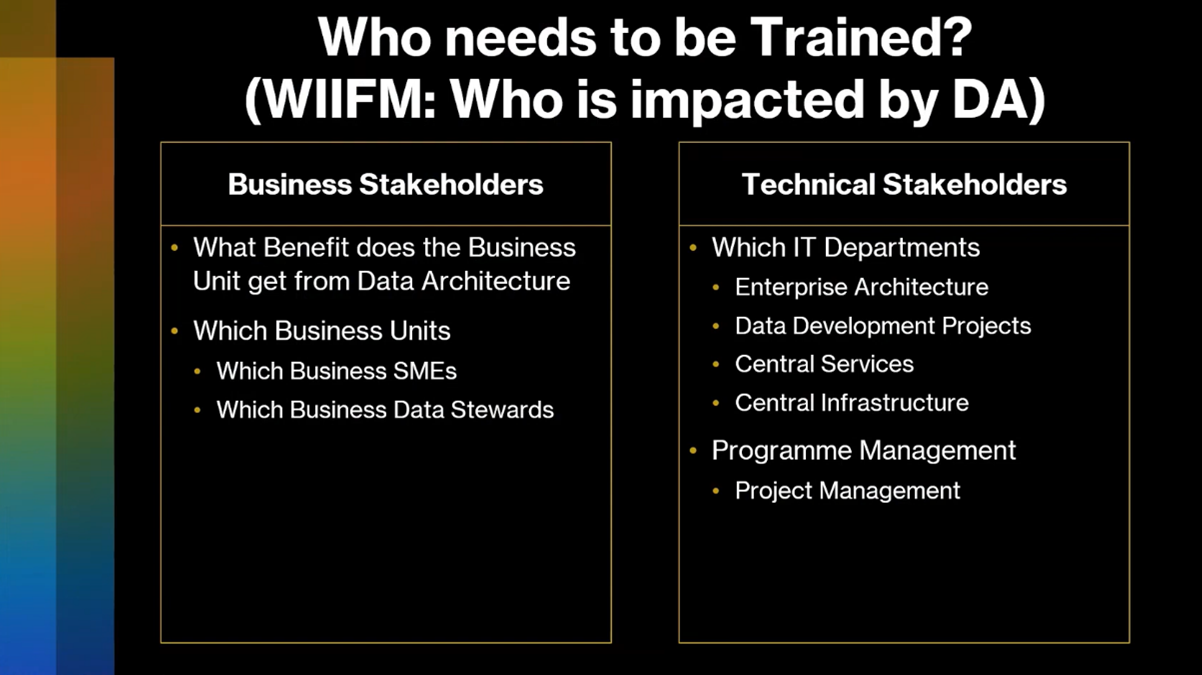

Importance of Stakeholder Involvement in Data Architecture Training

Howard highlights the key considerations for training participants on data architecture. It emphasises the importance of identifying the business units involved, selecting appropriate stakeholders, and encouraging stakeholder comments and suggestions on the value they expect to gain from data architecture.

Technical stakeholders, such as IT departments, enterprise architecture, data development projects, and project management, should also be involved in the training. Central services and infrastructure teams, such as BI teams and centralised data modelling, should understand the role of data architecture and how it can benefit their work.

Lastly, it's essential to consider the project management methodology being used and its impact on integrating data architecture into the project. Howard provides actionable insights for effectively training participants on data architecture.

Figure 13 Who needs to be trained? Who is impacted?

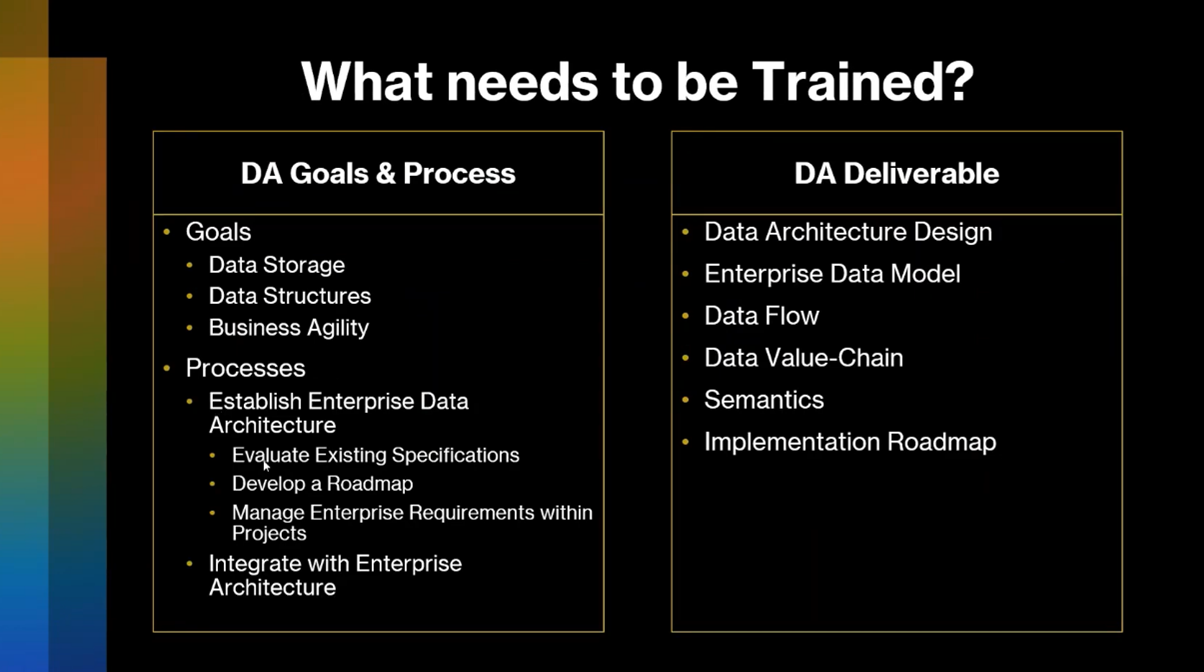

The Role of Data Citizens in Data Architecture

Data citizens prioritising functional development over documentation or design can pose challenges. However, they can contribute significantly to data architecture processes by managing enterprise requirements within projects. The key question for data citizens is, "What's in it for me?" they can better understand the assessment if they can see it working. Data citizens deeply understand data storage, data structures, and business agility. They can provide valuable insights into how quickly the business can respond to emerging AI and machine learning trends. Nonetheless, they may need assistance fixing data storage issues and understanding how data structures can assist them.

Figure 14 What needs to be Trained?

The Importance of Data Architecture and Training Processes in Achieving Business Agility

Data architects, also known as data ontologists, are essential to enabling and improving business agility through data architecture. Integrating data architecture with enterprise, business, application, and technology architecture is critical to achieving business agility. Failure to integrate with data architecture can impede business agility. However, many data citizens may not be aware of the architecture specifications and the need for evaluation. Hence, it is essential to involve stakeholders like the Data Governors Council in reviewing and signing off on the enterprise data model to ensure value. Training processes and deliverables in data architecture are necessary, but it can be challenging to train them effectively. Sarah Adani, the team lead responsible for data architecture, emphasises the importance of semantics and deliverables in data architecture.

Importance of Semantics and Data Architecture

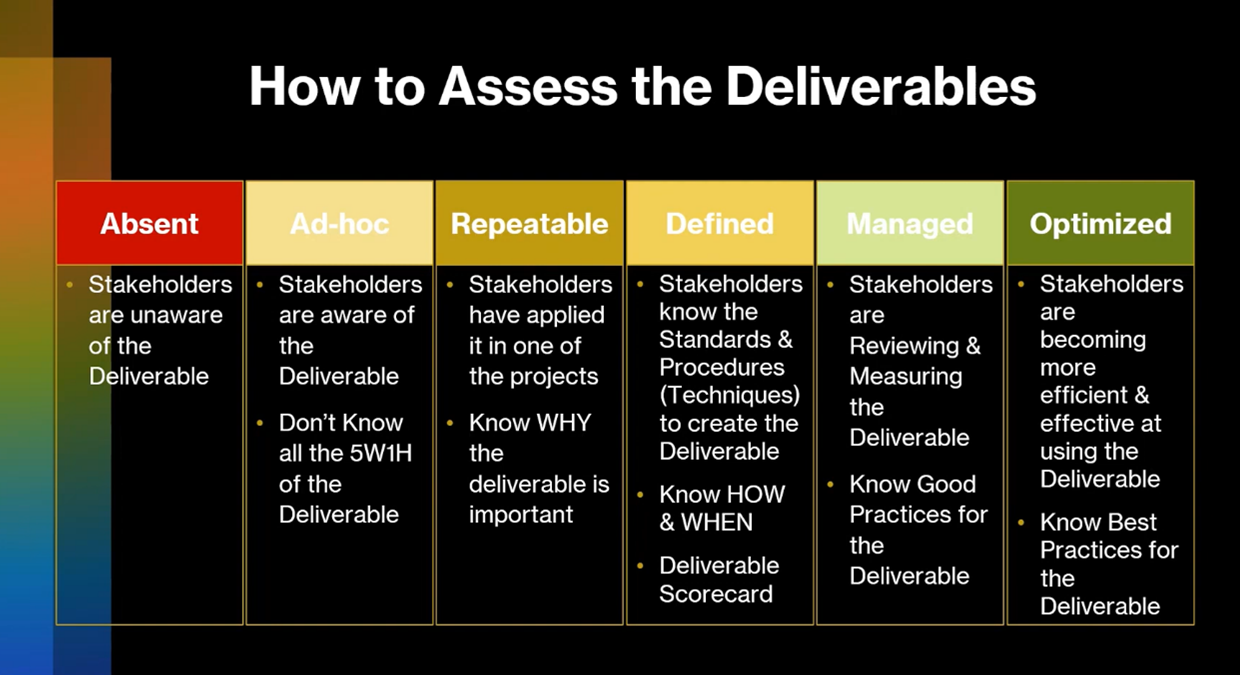

Howard emphasises the importance of clearly understanding an organisation's semantics. The process of progressing from a business glossary to a data dictionary, data model, taxonomy, and ontology is crucial for machines to understand the meaning of an organisation's data. Data architects are responsible for assessing semantics and defining taxonomies. By creating a taxonomy and ontology, semantic definitions become stronger and machine-readable. It's essential to have a defined practice or policy to claim its existence, as the absence of a concept or practice indicates a lack of understanding or implementation. Ad hoc decision-making implies limited or partial knowledge and personal experiences may influence knowledge, but they cannot guarantee complete understanding.

Figure 15 How to Assess the Deliverable

Levels of Deliverable Management and Training

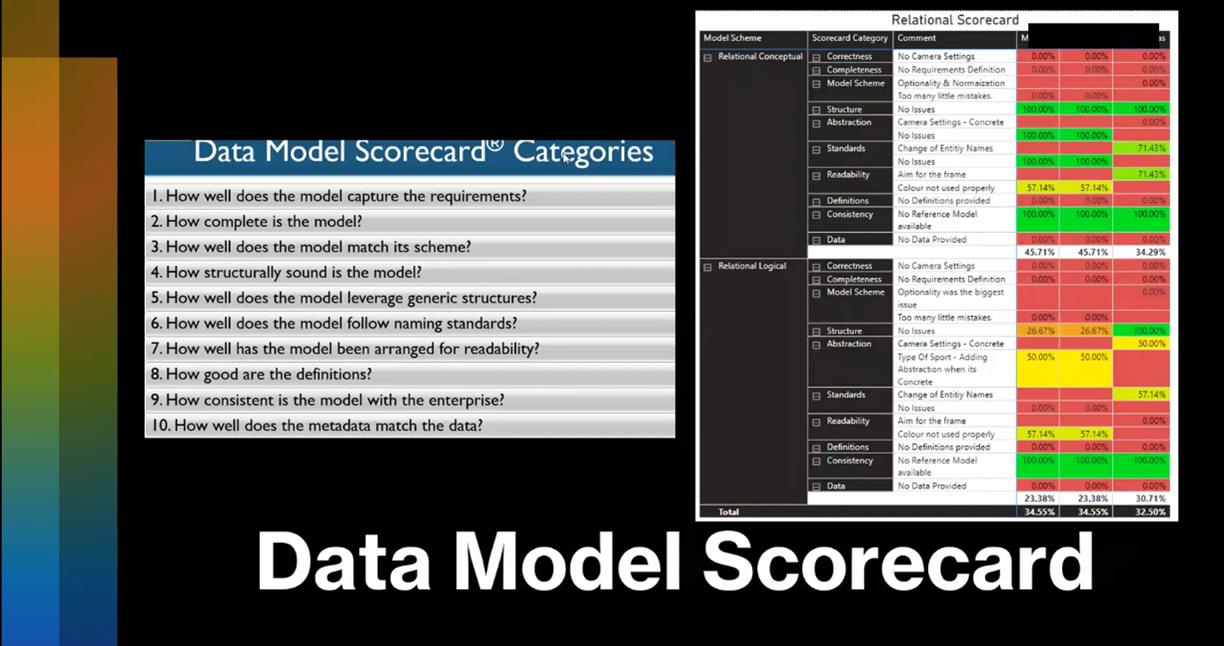

Howard emphasises the importance of understanding why and how to create consistent deliverables. He explains five levels of deliverable management - ad hoc, defined, managed, optimised, and sustaining. The defined level is characterised by operationalised, well-established practices, and stakeholders know the deliverable and how it should be used. Stakeholders review and measure the deliverable at the managed level, while at the optimised level, they continuously improve their efficiency and effectiveness in using it. Howard stresses the need for a scorecard or smart metrics to measure the success of a deliverable and mentioned their training program, which focuses on assessing individuals' ability to analyse and provide answers based on the deliverable in different situations. Finally, Howard points out the importance of defining every deliverable to avoid arguments and ensure structure.

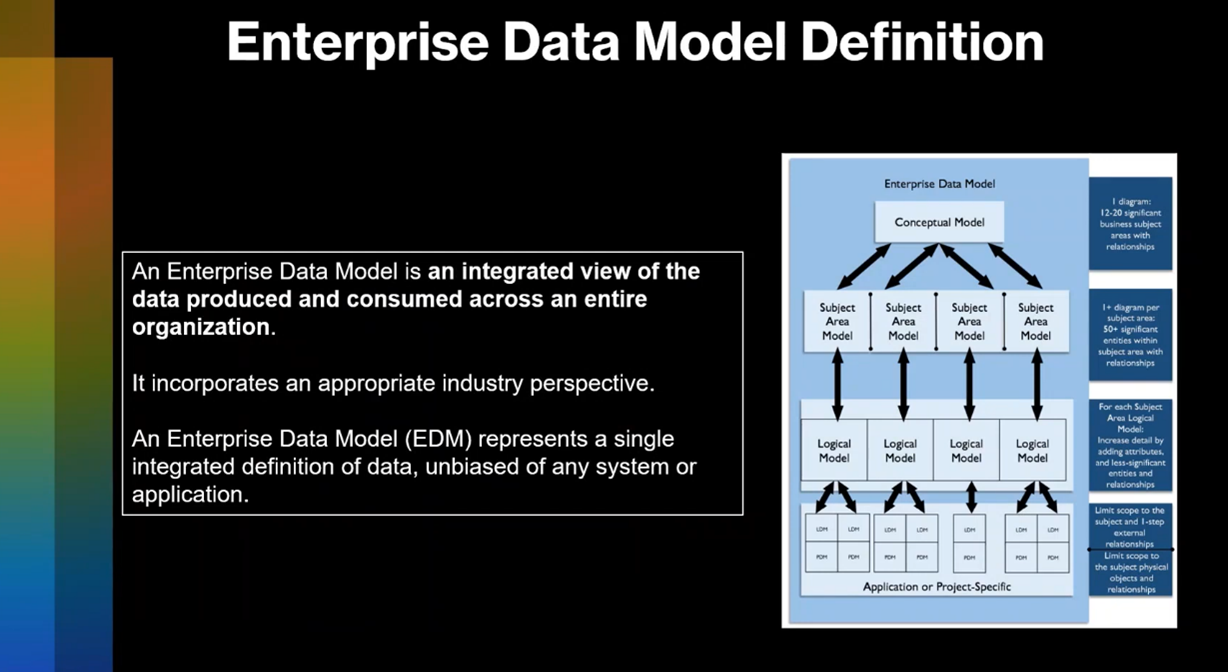

Figure 16 Enterprise Data Model Definition

Structure and Benefits of the Enterprise Data Model

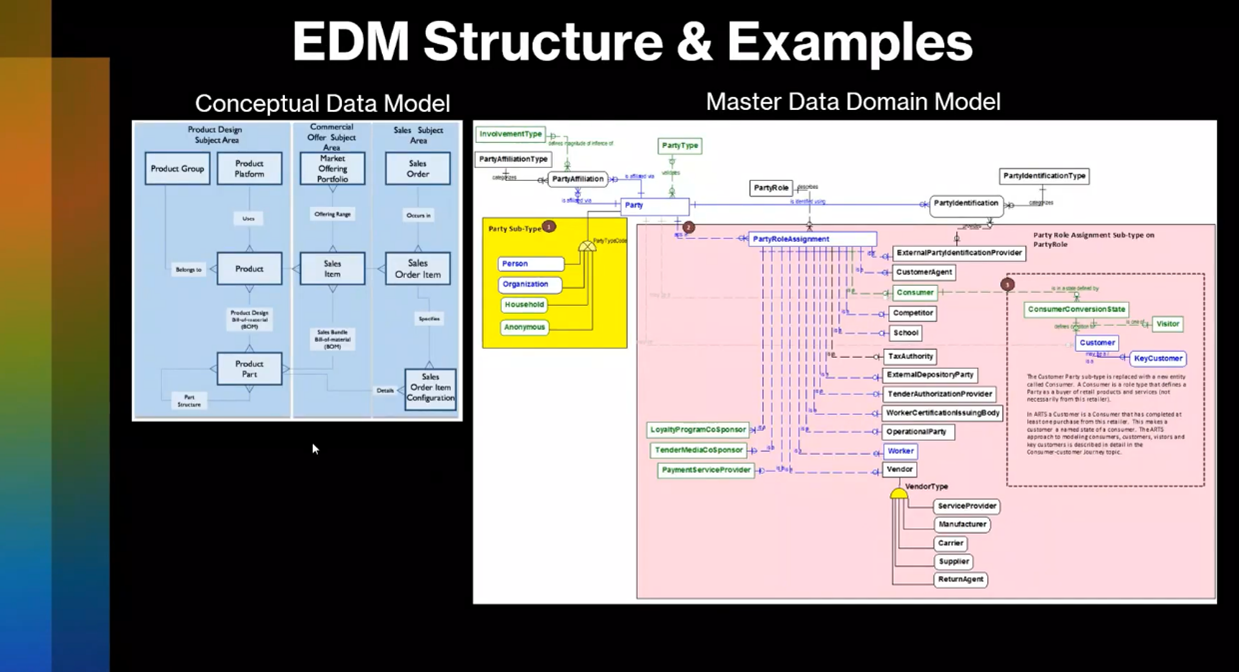

The enterprise data model is a crucial aspect of data management, as it provides consistency, common meaning, and understanding across different business units. The model comprises conceptual, subject, logical, and physical structures, with the subject area model being built by business stakeholders and data modellers using the enterprise model as a starting point for their projects.

The enterprise data model serves as a master blueprint for the organisation, providing common definitions such as data type specifications. It also includes conceptual and logical models to cover qualitative and quantitative aspects.

Data management maturity assessments involve qualitative questions and master data personnel benefit from having an enterprise data model. Such a model is necessary when creating subject area and application data models and buying or building new data models. In some cases, reverse engineering may be necessary when purchasing a data model.

Data Modelling and Data Flow

Data modelling is a critical process in which data is organised, structured, and analysed at both data warehouse and application levels. The conceptual model is used to describe the business, helping stakeholders to understand and recognise it. Additionally, a master data domain model, such as a party model, can describe the master data domain.

A scorecard can be employed to assess the maturity and effectiveness of the data modelling process. Data architects should ensure that the data model is consistent with the enterprise data model.

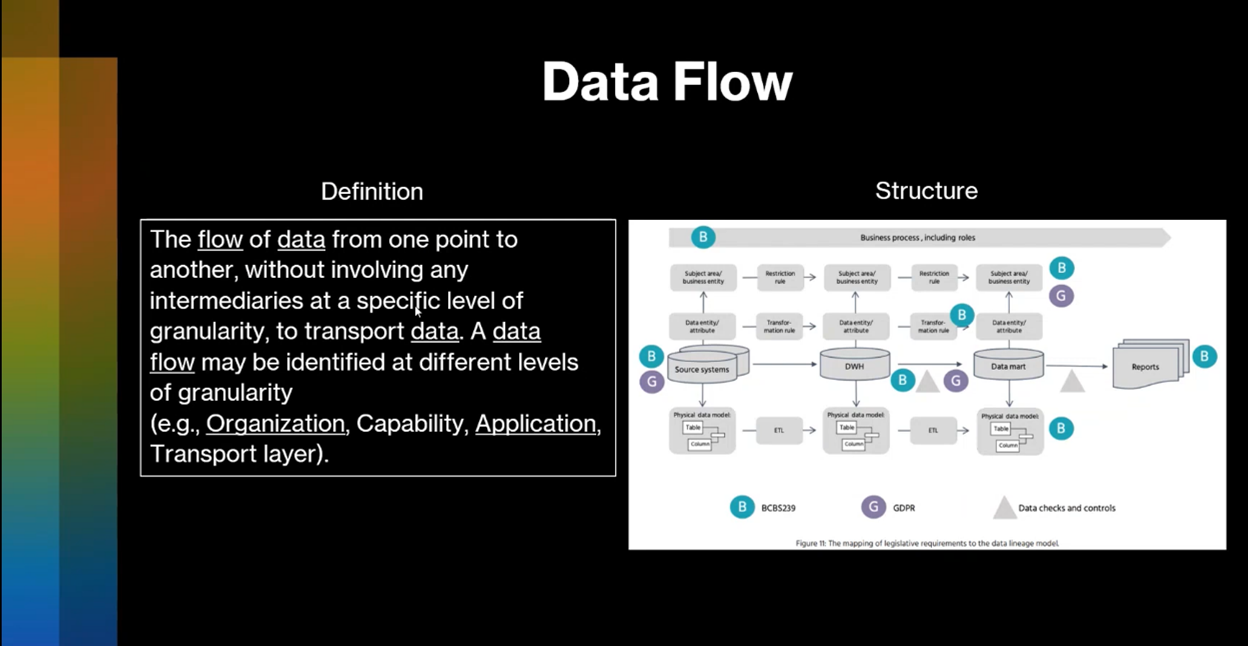

Power BI is a useful tool to load and analyse data modelling results, and the availability of a data model scorecard is crucial for assessing the maturity of data modelling. The data flow illustrates the movement of data from source systems to the data warehouse, data mart, and reports, making it an essential aspect of data modelling.

Figure 17 EDM Structure and Examples

Figure 18 Data Model Scorecard

Figure 19 Data Flow, Definition and Structure

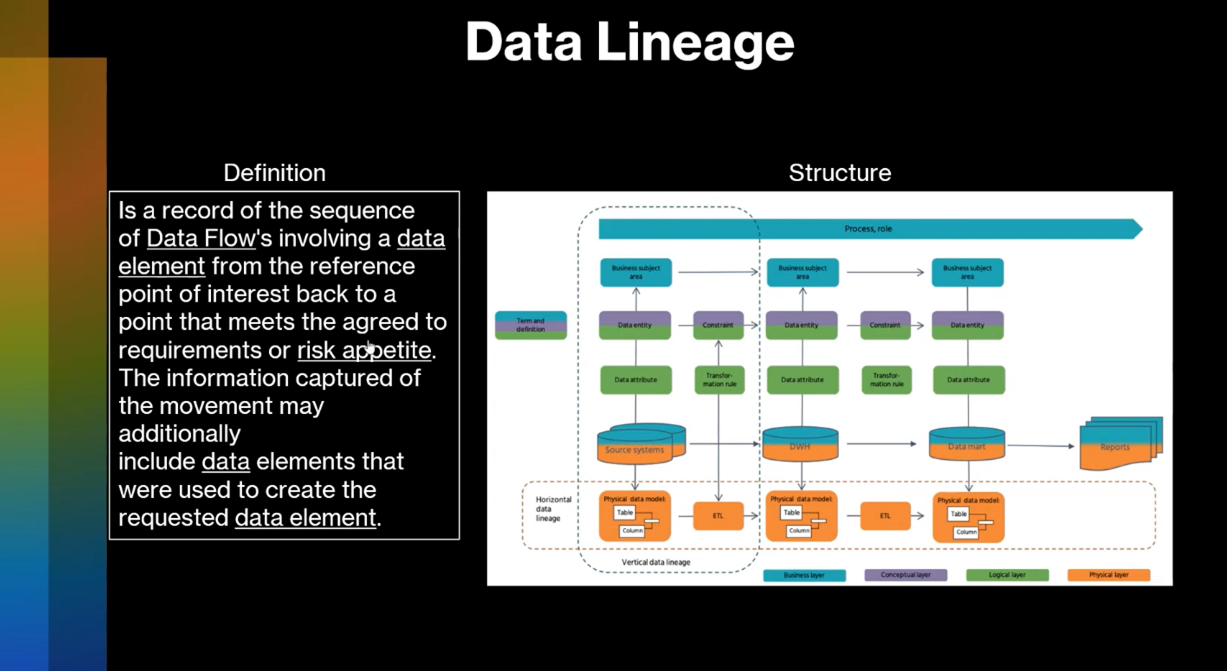

Data Flows and Data Lineage

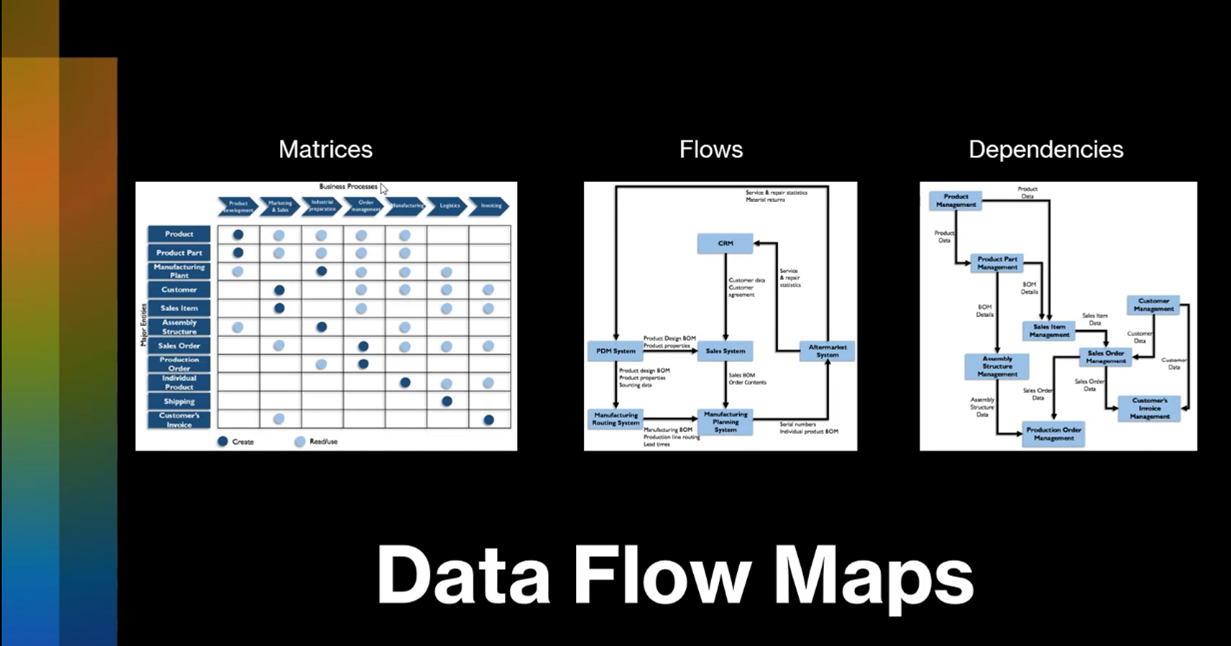

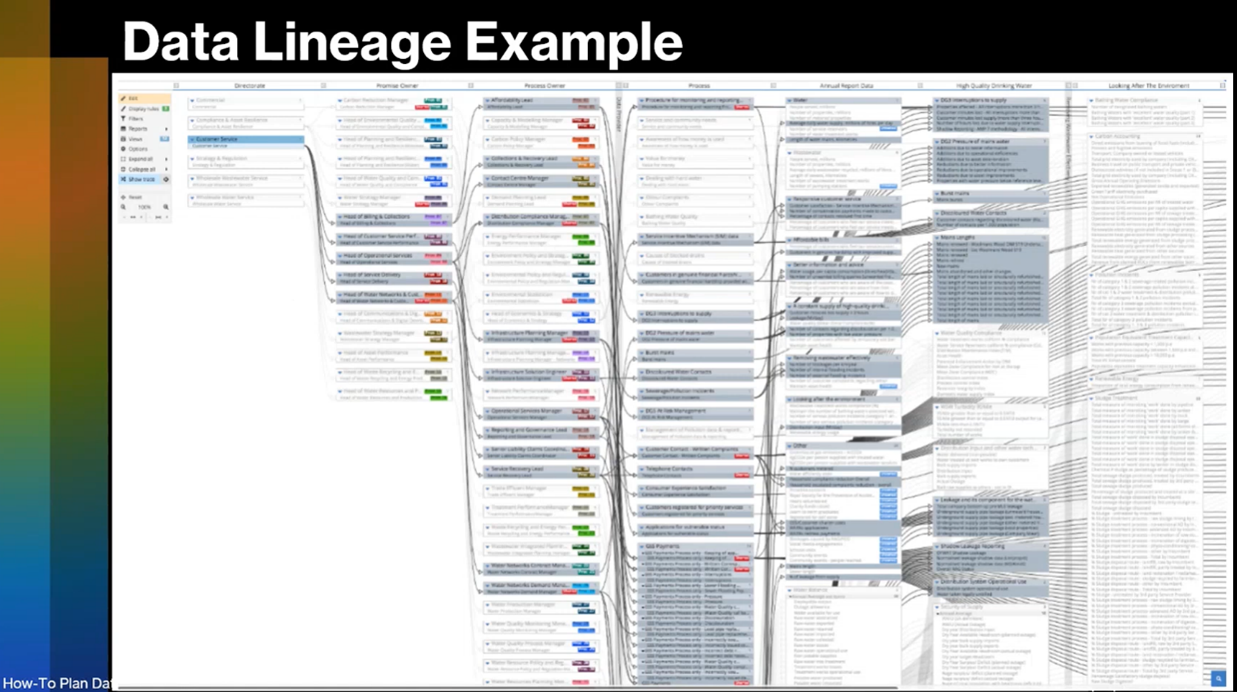

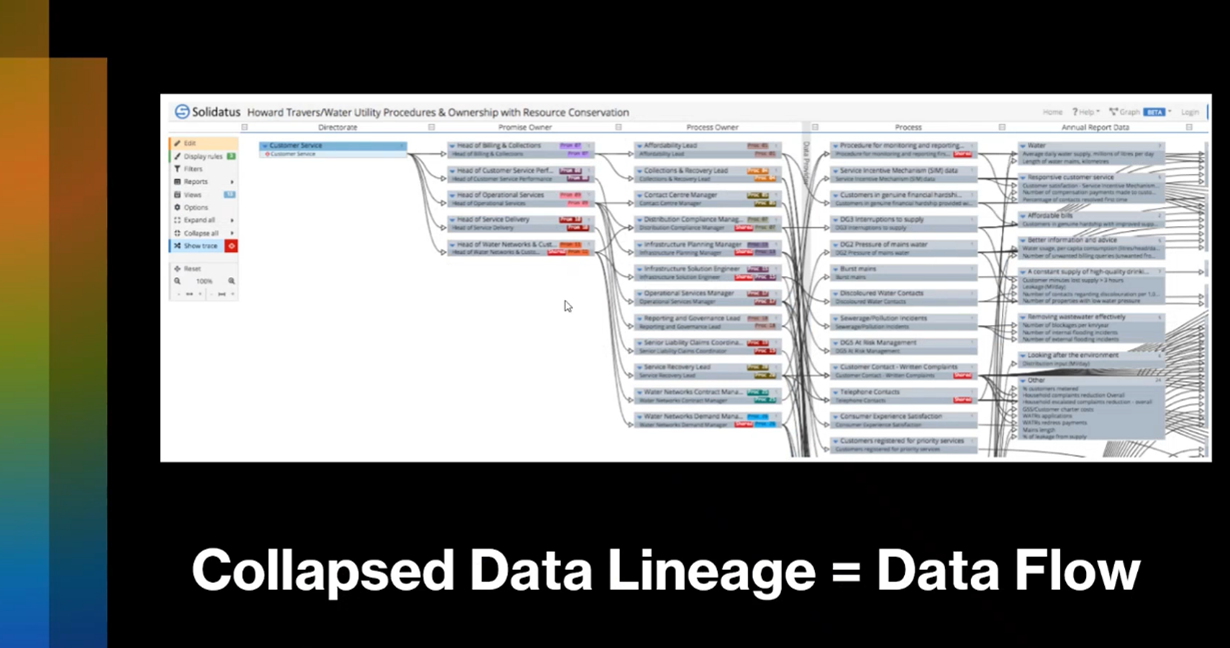

Data flows are an essential aspect of any organisation. They can be created at different levels, such as organisation, capability, and application. These diagrams are used for various purposes, including data privacy, regulatory compliance, and impact assessment. Data flows can be represented using different formats, such as business process diagrams, subject area diagrams, and capability dependence bots. The Solid Artist tool simplifies creating data flows by collapsing data lineage into a diagram. This tool also includes regulatory perspectives such as the Basel Committee Banking Supervision (BCBS) and General Data Protection Regulation (GDPR), making it a comprehensive solution for creating data flows.

Figure 20 Data Flow Maps

Figure 21 Data Lineage

Figure 22 Data Lineage Example

Figure 23 Collapsed Data Lineage = Data Flow

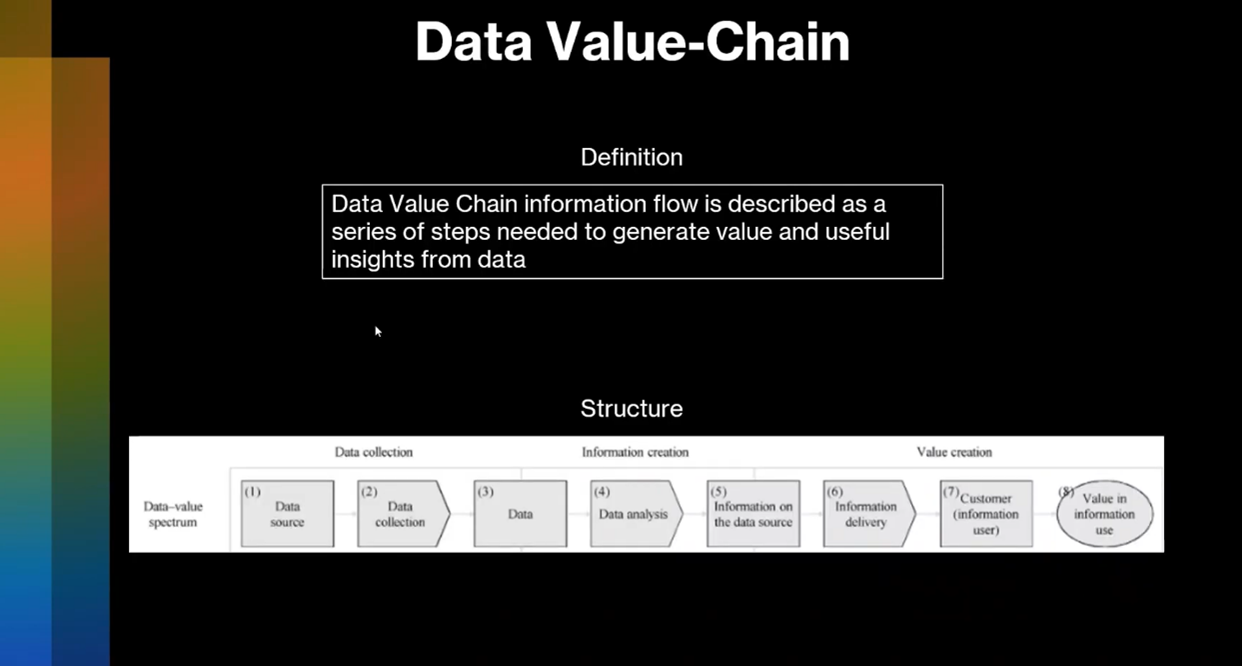

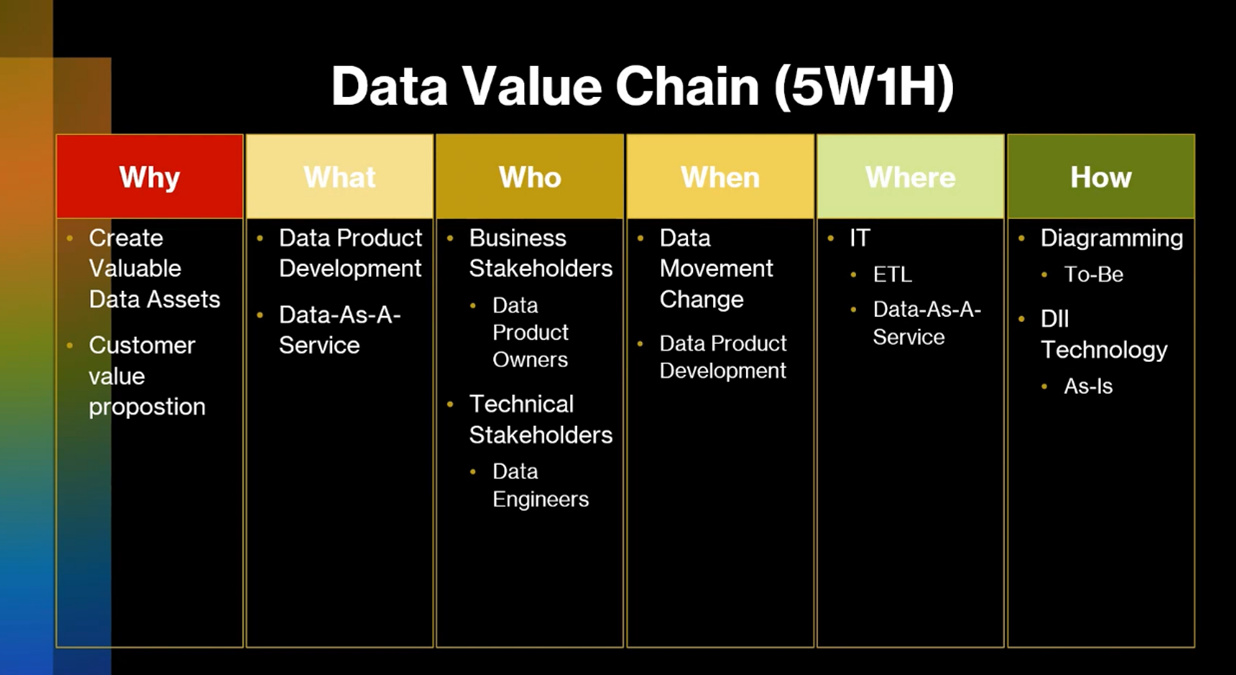

Data Value Chain and Data as a Service

A data value chain is a process that involves connecting and transforming data into information and delivering that information to create valuable data assets and support a data customer value proposition. The goal of this process is to generate value and insights from data.

One example of a data value chain is a heartbeat monitoring service where data from the heart is collected, transformed into information about heart conditions, and delivered back to the customer for better cardiovascular health.

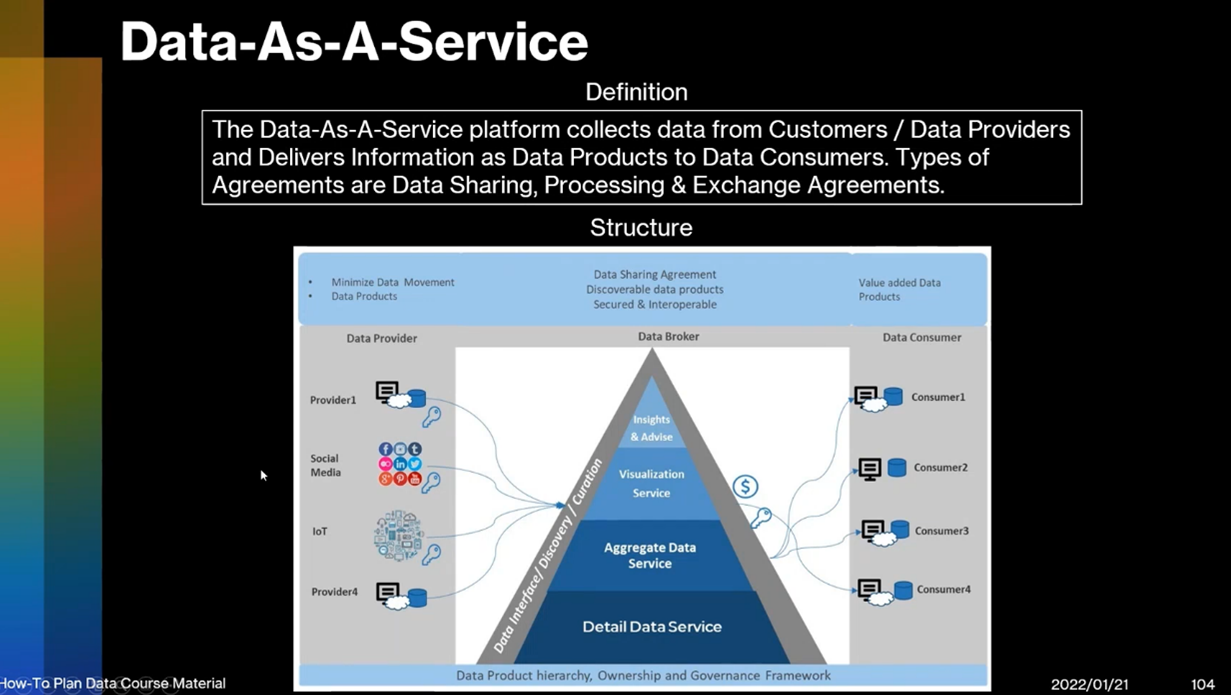

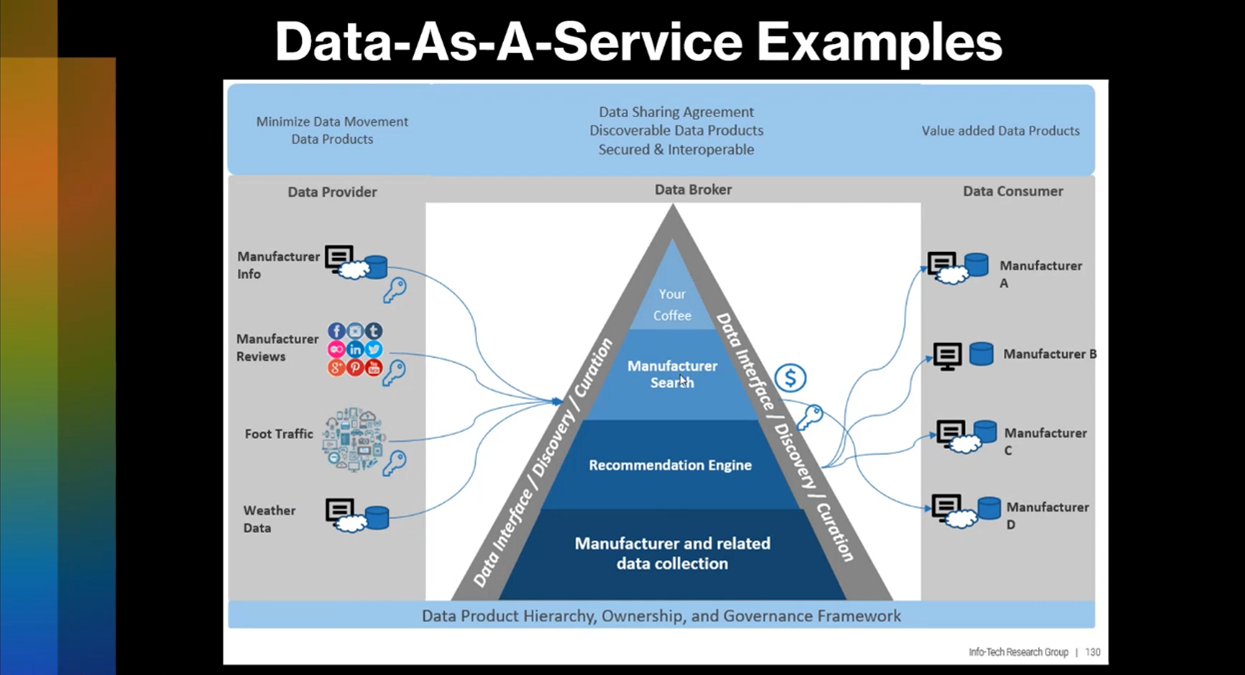

Another example is data as a service, which involves setting up a detailed data service in the cloud, aggregating and visualising the data, and deriving insights from it. A coffee shop that relies on data from coffee manufacturers and customer reviews to provide better coffee products and services is an example of data as a service.

Figure 24 Data Value-Chain. Definition and Structure

Figure 25 Data Value Chain (5W1H)

Figure 26 Data-As-A-Service

Data Management and Maturity Assessment Training

Howard emphasises the need to train employees on data management and maturity assessment and how risk assessments are essential for understanding the potential risks to the business based on its data maturity level and appetite for risk. Howard suggests assessing the impact and probability of each risk to determine the risk rating. The topic of data architects and ontologists is brought up, and Howard acknowledges that reaching the maturity level for ontology creation can be challenging. Enterprise data models, such as Lynn Soliston's universal data models, provide definitions and evolve into business clustering and ontologies. Lastly, the examples of Fiber and Juergen Timer's enterprise data model, 30 AM, demonstrate the movement towards ontology and data model creation at a more specific business level.

Figure 27 Data-As-A-Service Examples

Discussion on the Challenges of Implementing Reference Architectures and the Importance of Examples in Understanding Processes

Howard discusses the importance of customising reference architectures for each business. The IBM data model is cited as an example of a rigid enterprise data model that can be limiting. The use of training and examples is suggested as a way to make maturity assessment less subjective and political. Howard shares their experience of using examples to help data citizens understand processes and deliverables. The connection between ontology and the enterprise data model is explored as a way to enhance knowledge graphs. Implementing ontology is of interest, particularly in situations where it can be beneficial. Howard expresses interest in funding for innovative projects and suggests that connecting the enterprise data model to a knowledge graph could be a way to achieve this.

Different Approaches to Data Storage and Knowledge Graphs

Howard provides an overview of the concept of data mesh and its relationship with artificial intelligence/machine learning. There are two main approaches to building data mesh, one involving architects and ontologists and the other utilising active metadata and knowledge graphs. While some people are sceptical about the effectiveness of automatically building data mesh, it is widely acknowledged that the connection between data mesh and AI/machine learning is crucial for engagement.

Polyglot data modelling is an approach that uses a conceptual model and different languages, such as JSON, to generate a model. Starting at the denormalised or document level and then normalising is recommended as an alternative perspective.

Data architects are critical in assisting with business intelligence and machine learning due to the need for knowledge integration. However, challenges arise in assessments due to the lack of clear examples or descriptions of deliverables. The job of assessing is vast, with over 180 different deliverables, and more support and examples are needed to facilitate understanding of the deliverables. Small descriptions and examples can help those unfamiliar with the subject grasp the concepts.

If you would like to join the discussion, please visit our community platform, the Data Professional Expedition.

Additionally, if you would like to be a guest speaker on a future webinar, kindly contact Debbie (social@modelwaresystems.com)

Don’t forget to join our exciting LinkedIn and Meetup data communities not to miss out!